Pub/Sub

Ingest events for streaming into BigQuery, data lakes or operational databases.

New customers get $300 in free credits to spend on Pub/Sub. All customers get up to 10 GB for ingestion or delivery of messages free per month, not charged against your credits.

Deploy an exampledata warehouse solutionto explore, analyze, and visualize data using BigQuery and Looker Studio. Plus, apply generative AI to summarize the results of the analysis.

No-ops, secure, scalable messaging or queue system

In-order and any-order at-least-once message delivery with pull and push modes

Secure data with fine-grained accesscontrolsand always-on encryption

Benefits

High availability made simple

High availability made simple

Synchronous, cross-zone message replication and per-message receipt tracking ensures reliable delivery at any scale.

No-planning, auto-everything

No-planning, auto-everything

Auto-scaling and auto-provisioning with no partitions eliminates planning and ensures workloads are production ready from day one.

Easy, open foundation for real-time data systems

Easy, open foundation for real-time data systems

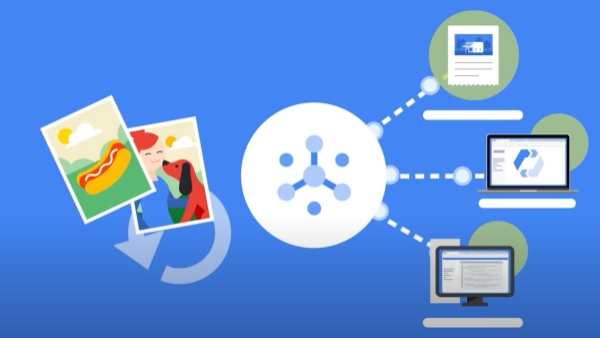

A fast, reliable way to land small records at any volume, an entry point for real-time and batch pipelines feeding BigQuery, data lakes and operational databases. Use it with ETL/ELT pipelines in Dataflow.

Key features

Key features

Stream analytics and connectors

NativeDataflowintegration enables reliable, expressive, exactly-once processing and integration of event streams in Java, Python, and SQL.

In-order delivery at scale

Optional per-key ordering simplifies stateful application logic without sacrificing horizontal scale—no partitions required.

Simplified streaming ingestion with native integrations

What's new

What's new

Sign upfor Google Cloud newsletters to receive product updates, event information, special offers, and more.

Documentation

Documentation

What is Pub/Sub?

Get a comprehensive overview of Pub/Sub, from core concepts and message flow to common use cases and integrations.

Introduction to Pub/Sub

Learn how to enable Pub/Sub in a Google Cloud project, create a Pub/Sub topic and subscription, and publish messages and pull them to the subscription.

Quickstart: Using client libraries

See how the Pub/Sub service allows applications to exchange messages reliably, quickly, and asynchronously.

In-order message delivery

Learn how scalable message ordering works and when to use it.

Choosing between Pub/Sub or Pub/Sub Lite

Understand how to make most of both options.

Quickstart: Stream processing with Dataflow

Learn how to use Dataflow to read messages published to a Pub/Sub topic, window the messages by timestamp, and write the messages to Cloud Storage.

Guide: Publishing messages to topics

Learn how to create a message containing your data and send a request to the Pub/Sub Server to publish the message to the desired topic.

Not seeing what you’re looking for?

Use cases

Use cases

Stream analytics

Google’sstream analyticsmakes data more organized, useful, and accessible from the instant it’s generated. Built on Pub/Sub along with Dataflow and BigQuery, our streaming solution provisions the resources you need to ingest, process, and analyze fluctuating volumes of real-time data for real-time business insights. This abstracted provisioning reduces complexity and makes stream analytics accessible to both data analysts and data engineers.

Asynchronous microservices integration

Pub/Sub works as a messaging middleware for traditional service integration or a simple communication medium for modern microservices. Push subscriptions deliver events to serverless webhooks onCloud Functions,App Engine,Cloud Run,or custom environments onGoogle Kubernetes EngineorCompute Engine.Low-latency pull delivery is available when exposing webhooks is not an option or for efficient handling of higher throughput streams.

All features

All features

| At-least-once delivery | Synchronous, cross-zone message replication and per-message receipt tracking ensures at-least-once delivery at any scale. |

| Open | Open APIs and client libraries in seven languages support cross-cloud and hybrid deployments. |

| Exactly-once processing | Dataflow supports reliable, expressive, exactly-once processing of Pub/Sub streams. |

| No provisioning, auto-everything | Pub/Sub does not have shards or partitions. Just set your quota, publish, and consume. |

| Compliance and security | Pub/Sub is a HIPAA-compliant service, offering fine-grained access controls and end-to-end encryption. |

| Google Cloud–native integrations | Take advantage of integrations with multiple services, such as Cloud Storage and Gmail update events and Cloud Functions for serverless event-driven computing. |

| Third-party and OSS integrations | Pub/Sub provides third-party integrations with Splunk and Datadog for logs along with Striim and Informatica for data integration. Additionally, OSS integrations are available through Confluent Cloud for Apache Kafka and Knative Eventing for Kubernetes-based serverless workloads. |

| Seek and replay | Rewind your backlog to any point in time or a snapshot, giving the ability to reprocess the messages. Fast forward to discard outdated data. |

| Dead letter topics | Dead letter topics allow for messages unable to be processed by subscriber applications to be put aside for offline examination and debugging so that other messages can be processed without delay. |

| Filtering | Pub/Sub can filter messages based upon attributes in order to reduce delivery volumes to subscribers. |

Pricing

Pricing

Pub/Sub pricing is calculated based upon monthly data volumes. The first 10 GB of data per month is offered at no charge.

Monthly data volume1 | Price per TB2 |

|---|---|

First 10 GB | $0.00 |

Beyond 10 GB | $40.00 |

1For detailed pricing information, please consult thepricing guide.

2TB refers to a tebibyte, or 240bytes.

If you pay in a currency other than USD, the prices listed in your currency onCloud Platform SKUsapply.

Take the next step

Start building on Google Cloud with $300 in free credits and 20+ always free products.

Need help getting started?

Contact salesWork with a trusted partner

Find a partnerContinue browsing

See all products