Learn to build and deploy your distributed applications easily to the cloud with Docker

Written and developed byPrakhar Srivastav

Introduction

What is Docker?

Wikipedia definesDockeras

an open-source project that automates the deployment of software applications insidecontainersby providing an additional layer of abstraction and automation ofOS-level virtualizationon Linux.

Wow! That's a mouthful. In simpler words, Docker is a tool that allows developers, sys-admins etc. to easily deploy their applications in a sandbox (calledcontainers) to run on the host operating system i.e. Linux. The key benefit of Docker is that it allows users topackage an application with all of its dependencies into a standardized unitfor software development. Unlike virtual machines, containers do not have high overhead and hence enable more efficient usage of the underlying system and resources.

What are containers?

The industry standard today is to use Virtual Machines (VMs) to run software applications. VMs run applications inside a guest Operating System, which runs on virtual hardware powered by the server’s host OS.

VMs are great at providing full process isolation for applications: there are very few ways a problem in the host operating system can affect the software running in the guest operating system, and vice-versa. But this isolation comes at great cost — the computational overhead spent virtualizing hardware for a guest OS to use is substantial.

Containers take a different approach: by leveraging the low-level mechanics of the host operating system, containers provide most of the isolation of virtual machines at a fraction of the computing power.

Why use containers?

Containers offer a logical packaging mechanism in which applications can be abstracted from the environment in which they actually run. This decoupling allows container-based applications to be deployed easily and consistently, regardless of whether the target environment is a private data center, the public cloud, or even a developer’s personal laptop. This gives developers the ability to create predictable environments that are isolated from the rest of the applications and can be run anywhere.

From an operations standpoint, apart from portability containers also give more granular control over resources giving your infrastructure improved efficiency which can result in better utilization of your compute resources.

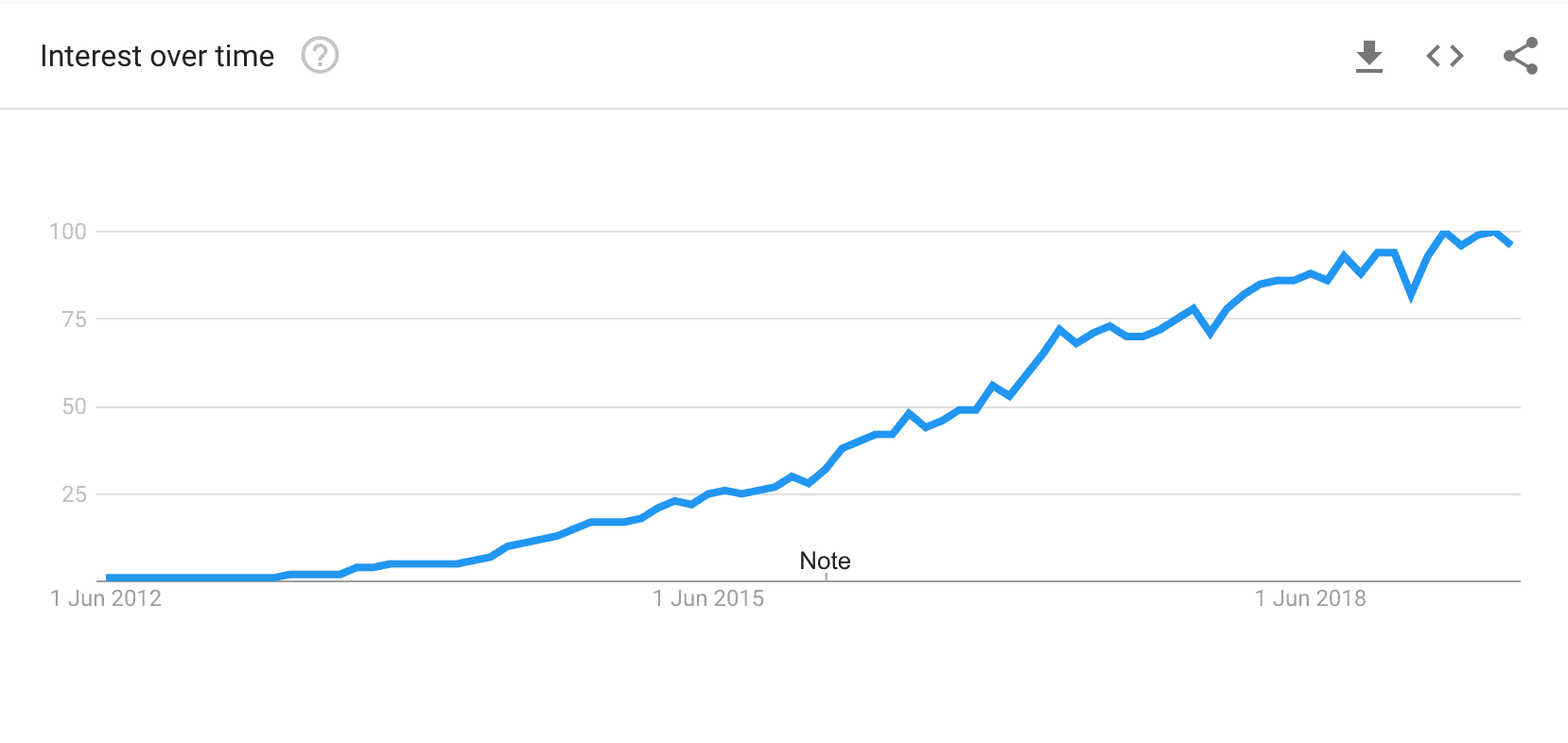

Google Trends for Docker

Due to these benefits, containers (& Docker) have seen widespread adoption. Companies like Google, Facebook, Netflix and Salesforce leverage containers to make large engineering teams more productive and to improve utilization of compute resources. In fact, Google credited containers for eliminating the need for an entire data center.

What will this tutorial teach me?

This tutorial aims to be the one-stop shop for getting your hands dirty with Docker. Apart from demystifying the Docker landscape, it'll give you hands-on experience with building and deploying your own webapps on the Cloud. We'll be usingAmazon Web Servicesto deploy a static website, and two dynamic webapps onEC2usingElastic BeanstalkandElastic Container Service.Even if you have no prior experience with deployments, this tutorial should be all you need to get started.

Getting Started

This document contains a series of several sections, each of which explains a particular aspect of Docker. In each section, we will be typing commands (or writing code). All the code used in the tutorial is available in theGithub repo.

Note: This tutorial uses version18.05.0-ceof Docker. If you find any part of the tutorial incompatible with a future version, please raise anissue.Thanks!

Prerequisites

There are no specific skills needed for this tutorial beyond a basic comfort with the command line and using a text editor. This tutorial usesgit cloneto clone the repository locally. If you don't have Git installed on your system, either install it or remember to manually download the zip files from Github. Prior experience in developing web applications will be helpful but is not required. As we proceed further along the tutorial, we'll make use of a few cloud services. If you're interested in following along, please create an account on each of these websites:

Setting up your computer

Getting all the tooling setup on your computer can be a daunting task, but thankfully as Docker has become stable, getting Docker up and running on your favorite OS has become very easy.

Until a few releases ago, running Docker on OSX and Windows was quite a hassle. Lately however, Docker has invested significantly into improving the on-boarding experience for its users on these OSes, thus running Docker now is a cakewalk. Thegetting startedguide on Docker has detailed instructions for setting up Docker onMac,LinuxandWindows.

Once you are done installing Docker, test your Docker installation by running the following:

$ docker run hello-world

Hello from Docker.

This message shows that your installation appears to be working correctly.

...Hello World

Playing with Busybox

Now that we have everything setup, it's time to get our hands dirty. In this section, we are going to run aBusyboxcontainer on our system and get a taste of thedocker runcommand.

To get started, let's run the following in our terminal:

$ docker pull busyboxNote: Depending on how you've installed docker on your system, you might see a

permission deniederror after running the above command. If you're on a Mac, make sure the Docker engine is running. If you're on Linux, then prefix yourdockercommands withsudo.Alternatively, you cancreate a docker groupto get rid of this issue.

Thepullcommand fetches the busyboximagefrom theDocker registryand saves it to our system. You can use thedocker imagescommand to see a list of all images on your system.

$ docker images

REPOSITORY TAG IMAGE ID CREATED VIRTUAL SIZE

busybox latest c51f86c28340 4 weeks ago 1.109 MBDocker Run

Great! Let's now run a Dockercontainerbased on this image. To do that we are going to use the almightydocker runcommand.

$ docker run busybox

$Wait, nothing happened! Is that a bug? Well, no. Behind the scenes, a lot of stuff happened. When you callrun,the Docker client finds the image (busybox in this case), loads up the container and then runs a command in that container. When we rundocker run busybox,we didn't provide a command, so the container booted up, ran an empty command and then exited. Well, yeah - kind of a bummer. Let's try something more exciting.

$ docker run busyboxecho"hello from busybox"

hello from busyboxNice - finally we see some output. In this case, the Docker client dutifully ran theechocommand in our busybox container and then exited it. If you've noticed, all of that happened pretty quickly. Imagine booting up a virtual machine, running a command and then killing it. Now you know why they say containers are fast! Ok, now it's time to see thedocker pscommand. Thedocker pscommand shows you all containers that are currently running.

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMESSince no containers are running, we see a blank line. Let's try a more useful variant:docker ps -a

$ docker ps-a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

305297d7a235 busybox"uptime"11 minutes ago Exited (0) 11 minutes ago distracted_goldstine

ff0a5c3750b9 busybox"sh"12 minutes ago Exited (0) 12 minutes ago elated_ramanujan

14e5bd11d164 hello-world"/hello"2 minutes ago Exited (0) 2 minutes ago thirsty_euclidSo what we see above is a list of all containers that we ran. Do notice that theSTATUScolumn shows that these containers exited a few minutes ago.

You're probably wondering if there is a way to run more than just one command in a container. Let's try that now:

$ docker run -it busybox sh

/# ls

bin dev etc home proc root sys tmp usr var

/# uptime

05:45:21 up 5:58, 0 users, load average: 0.00, 0.01, 0.04Running theruncommand with the-itflags attaches us to an interactive tty in the container. Now we can run as many commands in the container as we want. Take some time to run your favorite commands.

Danger Zone:If you're feeling particularly adventurous you can try

rm -rf binin the container. Make sure you run this command in the container andnotin your laptop/desktop. Doing this will make any other commands likels,uptimenot work. Once everything stops working, you can exit the container (typeexitand press Enter) and then start it up again with thedocker run -it busybox shcommand. Since Docker creates a new container every time, everything should start working again.

That concludes a whirlwind tour of the mightydocker runcommand, which would most likely be the command you'll use most often. It makes sense to spend some time getting comfortable with it. To find out more aboutrun,usedocker run --helpto see a list of all flags it supports. As we proceed further, we'll see a few more variants ofdocker run.

Before we move ahead though, let's quickly talk about deleting containers. We saw above that we can still see remnants of the container even after we've exited by runningdocker ps -a.Throughout this tutorial, you'll rundocker runmultiple times and leaving stray containers will eat up disk space. Hence, as a rule of thumb, I clean up containers once I'm done with them. To do that, you can run thedocker rmcommand. Just copy the container IDs from above and paste them alongside the command.

$ docker rm 305297d7a235 ff0a5c3750b9

305297d7a235

ff0a5c3750b9On deletion, you should see the IDs echoed back to you. If you have a bunch of containers to delete in one go, copy-pasting IDs can be tedious. In that case, you can simply run -

$ docker rm $(docker ps-a-q-fstatus=exited)This command deletes all containers that have a status ofexited.In case you're wondering, the-qflag, only returns the numeric IDs and-ffilters output based on conditions provided. One last thing that'll be useful is the--rmflag that can be passed todocker runwhich automatically deletes the container once it's exited from. For one off docker runs,--rmflag is very useful.

In later versions of Docker, thedocker container prunecommand can be used to achieve the same effect.

$ docker container prune

WARNING! This will remove all stopped containers.

Are you sure you want tocontinue?[y/N] y

Deleted Containers:

4a7f7eebae0f63178aff7eb0aa39f0627a203ab2df258c1a00b456cf20063

f98f9c2aa1eaf727e4ec9c0283bcaa4762fbdba7f26191f26c97f64090360

Total reclaimed space: 212 BLastly, you can also delete images that you no longer need by runningdocker rmi.

Terminology

In the last section, we used a lot of Docker-specific jargon which might be confusing to some. So before we go further, let me clarify some terminology that is used frequently in the Docker ecosystem.

- Images- The blueprints of our application which form the basis of containers. In the demo above, we used the

docker pullcommand to download thebusyboximage. - Containers- Created from Docker images and run the actual application. We create a container using

docker runwhich we did using the busybox image that we downloaded. A list of running containers can be seen using thedocker pscommand. - Docker Daemon- The background service running on the host that manages building, running and distributing Docker containers. The daemon is the process that runs in the operating system which clients talk to.

- Docker Client- The command line tool that allows the user to interact with the daemon. More generally, there can be other forms of clients too - such asKitematicwhich provide a GUI to the users.

- Docker Hub- Aregistryof Docker images. You can think of the registry as a directory of all available Docker images. If required, one can host their own Docker registries and can use them for pulling images.

Webapps with Docker

Great! So we have now looked atdocker run,played with a Docker container and also got a hang of some terminology. Armed with all this knowledge, we are now ready to get to the real-stuff, i.e. deploying web applications with Docker!

Static Sites

Let's start by taking baby-steps. The first thing we're going to look at is how we can run a dead-simple static website. We're going to pull a Docker image from Docker Hub, run the container and see how easy it is to run a webserver.

Let's begin. The image that we are going to use is a single-pagewebsitethat I've already created for the purpose of this demo and hosted on theregistry-prakhar1989/static-site.We can download and run the image directly in one go usingdocker run.As noted above, the--rmflag automatically removes the container when it exits and the-itflag specifies an interactive terminal which makes it easier to kill the container with Ctrl+C (on windows).

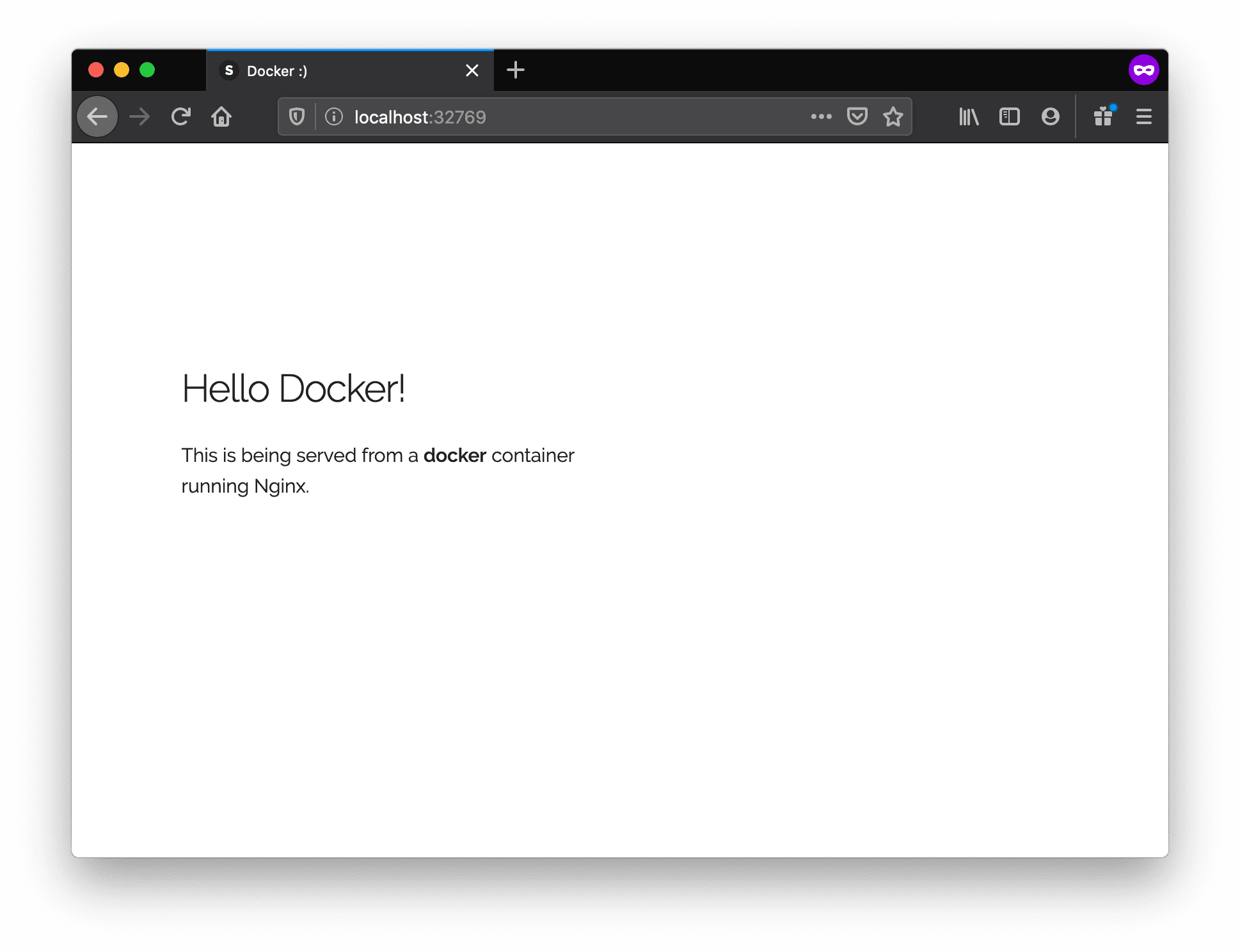

$ docker run --rm -it prakhar1989/static-siteSince the image doesn't exist locally, the client will first fetch the image from the registry and then run the image. If all goes well, you should see aNginx is running...message in your terminal. Okay now that the server is running, how to see the website? What port is it running on? And more importantly, how do we access the container directly from our host machine? Hit Ctrl+C to stop the container.

Well, in this case, the client is not exposing any ports so we need to re-run thedocker runcommand to publish ports. While we're at it, we should also find a way so that our terminal is not attached to the running container. This way, you can happily close your terminal and keep the container running. This is calleddetachedmode.

$ docker run-d-P --name static-site prakhar1989/static-site

e61d12292d69556eabe2a44c16cbd54486b2527e2ce4f95438e504afb7b02810In the above command,-dwill detach our terminal,-Pwill publish all exposed ports to random ports and finally--namecorresponds to a name we want to give. Now we can see the ports by running thedocker port [CONTAINER]command

$ docker port static-site

80/tcp -> 0.0.0.0:32769

443/tcp -> 0.0.0.0:32768You can openhttp://localhost:32769in your browser.

Note: If you're using docker-toolbox, then you might need to use

docker-machine ip defaultto get the IP.

You can also specify a custom port to which the client will forward connections to the container.

$ docker run -p 8888:80 prakhar1989/static-site

Nginx is running...

To stop a detached container, rundocker stopby giving the container ID. In this case, we can use the namestatic-sitewe used to start the container.

$ docker stop static-site

static-siteI'm sure you agree that was super simple. To deploy this on a real server you would just need to install Docker, and run the above Docker command. Now that you've seen how to run a webserver inside a Docker image, you must be wondering - how do I create my own Docker image? This is the question we'll be exploring in the next section.

Docker Images

We've looked at images before, but in this section we'll dive deeper into what Docker images are and build our own image! Lastly, we'll also use that image to run our application locally and finally deploy onAWSto share it with our friends! Excited? Great! Let's get started.

Docker images are the basis of containers. In the previous example, wepulledtheBusyboximage from the registry and asked the Docker client to run a containerbasedon that image. To see the list of images that are available locally, use thedocker imagescommand.

$ docker images

REPOSITORY TAG IMAGE ID CREATED VIRTUAL SIZE

prakhar1989/catnip latest c7ffb5626a50 2 hours ago 697.9 MB

prakhar1989/static-site latest b270625a1631 21 hours ago 133.9 MB

Python 3-onbuild cf4002b2c383 5 days ago 688.8 MB

martin/docker-cleanup-volumes latest b42990daaca2 7 weeks ago 22.14 MB

ubuntu latest e9ae3c220b23 7 weeks ago 187.9 MB

busybox latest c51f86c28340 9 weeks ago 1.109 MB

hello-world latest 0a6ba66e537a 11 weeks ago 960 BThe above gives a list of images that I've pulled from the registry, along with ones that I've created myself (we'll shortly see how). TheTAGrefers to a particular snapshot of the image and theIMAGE IDis the corresponding unique identifier for that image.

For simplicity, you can think of an image akin to a git repository - images can becommittedwith changes and have multiple versions. If you don't provide a specific version number, the client defaults tolatest.For example, you can pull a specific version ofubuntuimage

$ docker pull ubuntu:18.04To get a new Docker image you can either get it from a registry (such as the Docker Hub) or create your own. There are tens of thousands of images available onDocker Hub.You can also search for images directly from the command line usingdocker search.

An important distinction to be aware of when it comes to images is the difference between base and child images.

Base imagesare images that have no parent image, usually images with an OS like ubuntu, busybox or debian.

Child imagesare images that build on base images and add additional functionality.

Then there are official and user images, which can be both base and child images.

Official imagesare images that are officially maintained and supported by the folks at Docker. These are typically one word long. In the list of images above, the

Python,ubuntu,busyboxandhello-worldimages are official images.User imagesare images created and shared by users like you and me. They build on base images and add additional functionality. Typically, these are formatted as

user/image-name.

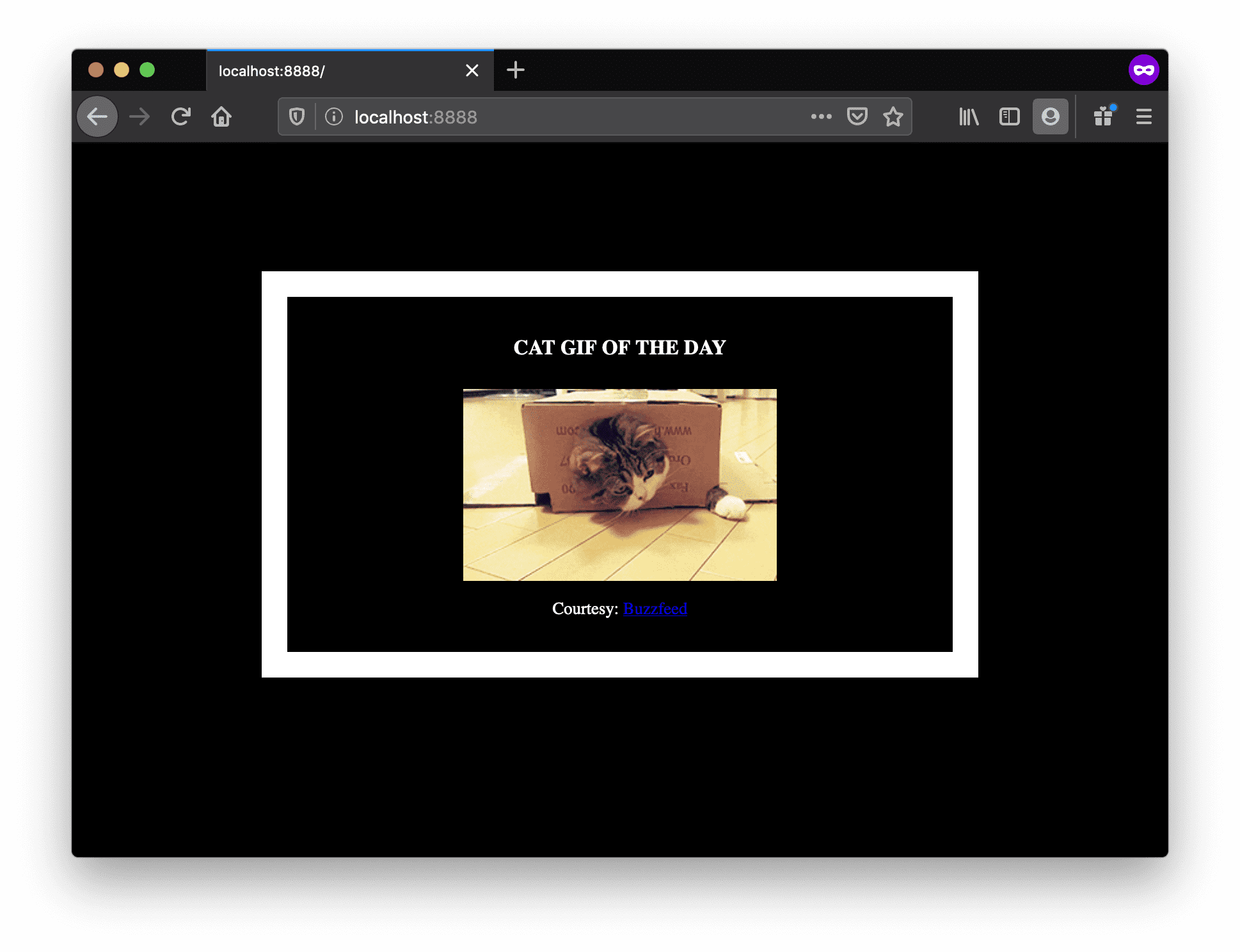

Our First Image

Now that we have a better understanding of images, it's time to create our own. Our goal in this section will be to create an image that sandboxes a simpleFlaskapplication. For the purposes of this workshop, I've already created a fun littleFlask appthat displays a random cat.gifevery time it is loaded - because you know, who doesn't like cats? If you haven't already, please go ahead and clone the repository locally like so -

$ gitclonehttps://github /prakhar1989/docker-curriculum.git

$cddocker-curriculum/flask-appThis should be cloned on the machine where you are running the docker commands andnotinside a docker container.

The next step now is to create an image with this web app. As mentioned above, all user images are based on a base image. Since our application is written in Python, the base image we're going to use will bePython 3.

Dockerfile

ADockerfileis a simple text file that contains a list of commands that the Docker client calls while creating an image. It's a simple way to automate the image creation process. The best part is that thecommandsyou write in a Dockerfile arealmostidentical to their equivalent Linux commands. This means you don't really have to learn new syntax to create your own dockerfiles.

The application directory does contain a Dockerfile but since we're doing this for the first time, we'll create one from scratch. To start, create a new blank file in our favorite text-editor and save it in thesamefolder as the flask app by the name ofDockerfile.

We start with specifying our base image. Use theFROMkeyword to do that -

FROMPython:3.8The next step usually is to write the commands of copying the files and installing the dependencies. First, we set a working directory and then copy all the files for our app.

# set a directory for the app

WORKDIR/usr/src/app

# copy all the files to the container

COPY..Now, that we have the files, we can install the dependencies.

# install dependencies

RUNpip install --no-cache-dir -r requirements.txtThe next thing we need to specify is the port number that needs to be exposed. Since our flask app is running on port5000,that's what we'll indicate.

EXPOSE5000The last step is to write the command for running the application, which is simply -Python./app.py.We use theCMDcommand to do that -

CMD["Python","./app.py"]The primary purpose ofCMDis to tell the container which command it should run when it is started. With that, ourDockerfileis now ready. This is how it looks -

FROMPython:3.8

# set a directory for the app

WORKDIR/usr/src/app

# copy all the files to the container

COPY..

# install dependencies

RUNpip install --no-cache-dir -r requirements.txt

# define the port number the container should expose

EXPOSE5000

# run the command

CMD["Python","./app.py"]Now that we have ourDockerfile,we can build our image. Thedocker buildcommand does the heavy-lifting of creating a Docker image from aDockerfile.

The section below shows you the output of running the same. Before you run the command yourself (don't forget the period), make sure to replace my username with yours. This username should be the same one you created when you registered onDocker hub.If you haven't done that yet, please go ahead and create an account. Thedocker buildcommand is quite simple - it takes an optional tag name with-tand a location of the directory containing theDockerfile.

$ docker build -t yourusername/catnip.

Sending build context to Docker daemon 8.704 kB

Step 1: FROM Python:3.8

# Executing 3 build triggers...

Step 1: COPY requirements.txt /usr/src/app/

---> Using cache

Step 1: RUN pip install --no-cache-dir -r requirements.txt

---> Using cache

Step 1: COPY. /usr/src/app

---> 1d61f639ef9e

Removing intermediate container 4de6ddf5528c

Step 2: EXPOSE 5000

---> Runningin12cfcf6d67ee

---> f423c2f179d1

Removing intermediate container 12cfcf6d67ee

Step 3: CMD Python./app.py

---> Runninginf01401a5ace9

---> 13e87ed1fbc2

Removing intermediate container f01401a5ace9

Successfully built 13e87ed1fbc2If you don't have thePython:3.8image, the client will first pull the image and then create your image. Hence, your output from running the command will look different from mine. If everything went well, your image should be ready! Rundocker imagesand see if your image shows.

The last step in this section is to run the image and see if it actually works (replacing my username with yours).

$ docker run -p 8888:5000 yourusername/catnip

* Running on http://0.0.0.0:5000/ (Press CTRL+C to quit)The command we just ran used port 5000 for the server inside the container and exposed this externally on port 8888. Head over to the URL with port 8888, where your app should be live.

Congratulations! You have successfully created your first docker image.

Docker on AWS

What good is an application that can't be shared with friends, right? So in this section we are going to see how we can deploy our awesome application to the cloud so that we can share it with our friends! We're going to use AWSElastic Beanstalkto get our application up and running in a few clicks. We'll also see how easy it is to make our application scalable and manageable with Beanstalk!

Docker push

The first thing that we need to do before we deploy our app to AWS is to publish our image on a registry which can be accessed by AWS. There are many differentDocker registriesyou can use (you can even hostyour own). For now, let's useDocker Hubto publish the image.

If this is the first time you are pushing an image, the client will ask you to login. Provide the same credentials that you used for logging into Docker Hub.

$ docker login

Logininwith your Docker ID to push and pull images from Docker Hub. If youdonot have a Docker ID, head over to https://hub.docker to create one.

Username: yourusername

Password:

WARNING! Your password will be stored unencryptedin/Users/yourusername/.docker/config.json

Configure a credential helper to remove this warning. See

https://docs.docker /engine/reference/commandline/login/credential-store

Login SucceededTo publish, just type the below command remembering to replace the name of the image tag above with yours. It is important to have the format ofyourusername/image_nameso that the client knows where to publish.

$ docker push yourusername/catnipOnce that is done, you can view your image on Docker Hub. For example, here's theweb pagefor my image.

Note: One thing that I'd like to clarify before we go ahead is that it is notimperativeto host your image on a public registry (or any registry) in order to deploy to AWS. In case you're writing code for the next million-dollar unicorn startup you can totally skip this step. The reason why we're pushing our images publicly is that it makes deployment super simple by skipping a few intermediate configuration steps.

Now that your image is online, anyone who has docker installed can play with your app by typing just a single command.

$ docker run -p 8888:5000 yourusername/catnipIf you've pulled your hair out in setting up local dev environments / sharing application configuration in the past, you very well know how awesome this sounds. That's why Docker is so cool!

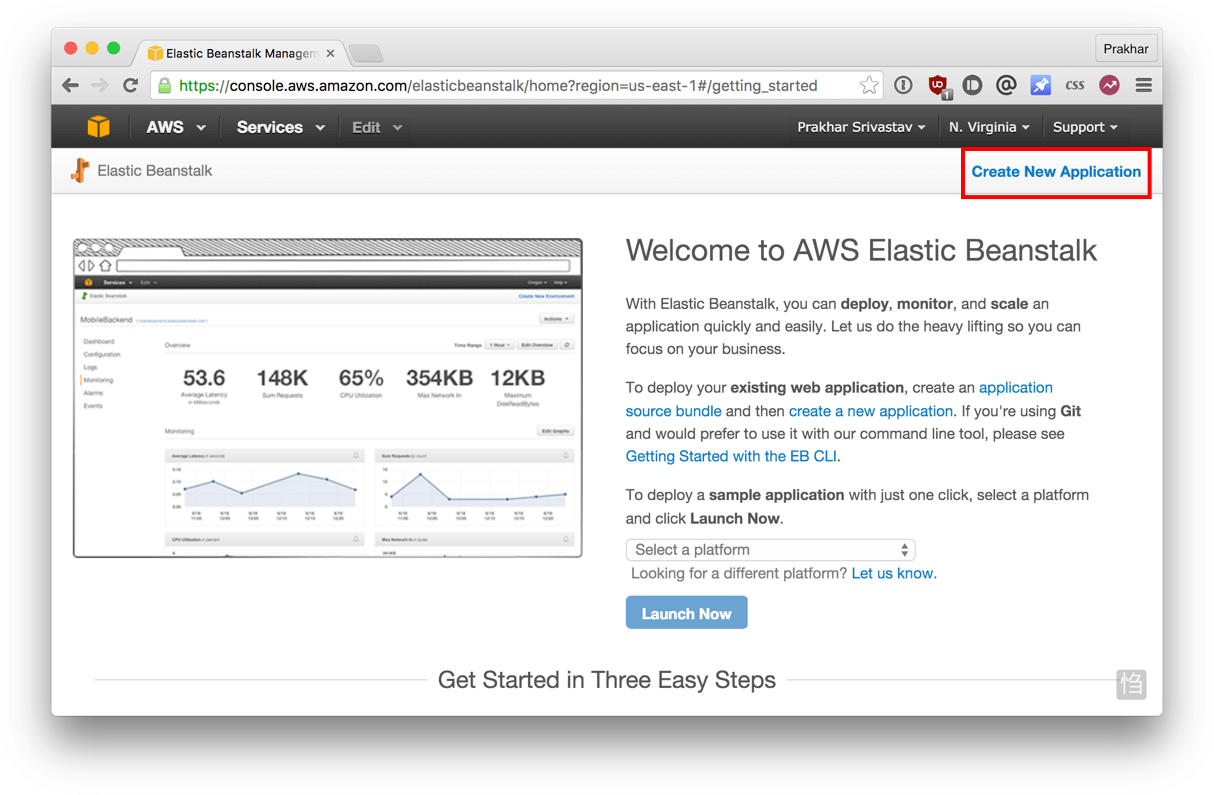

Beanstalk

AWS Elastic Beanstalk (EB) is a PaaS (Platform as a Service) offered by AWS. If you've used Heroku, Google App Engine etc. you'll feel right at home. As a developer, you just tell EB how to run your app and it takes care of the rest - including scaling, monitoring and even updates. In April 2014, EB added support for running single-container Docker deployments which is what we'll use to deploy our app. Although EB has a very intuitiveCLI,it does require some setup, and to keep things simple we'll use the web UI to launch our application.

To follow along, you need a functioningAWSaccount. If you haven't already, please go ahead and do that now - you will need to enter your credit card information. But don't worry, it's free and anything we do in this tutorial will also be free! Let's get started.

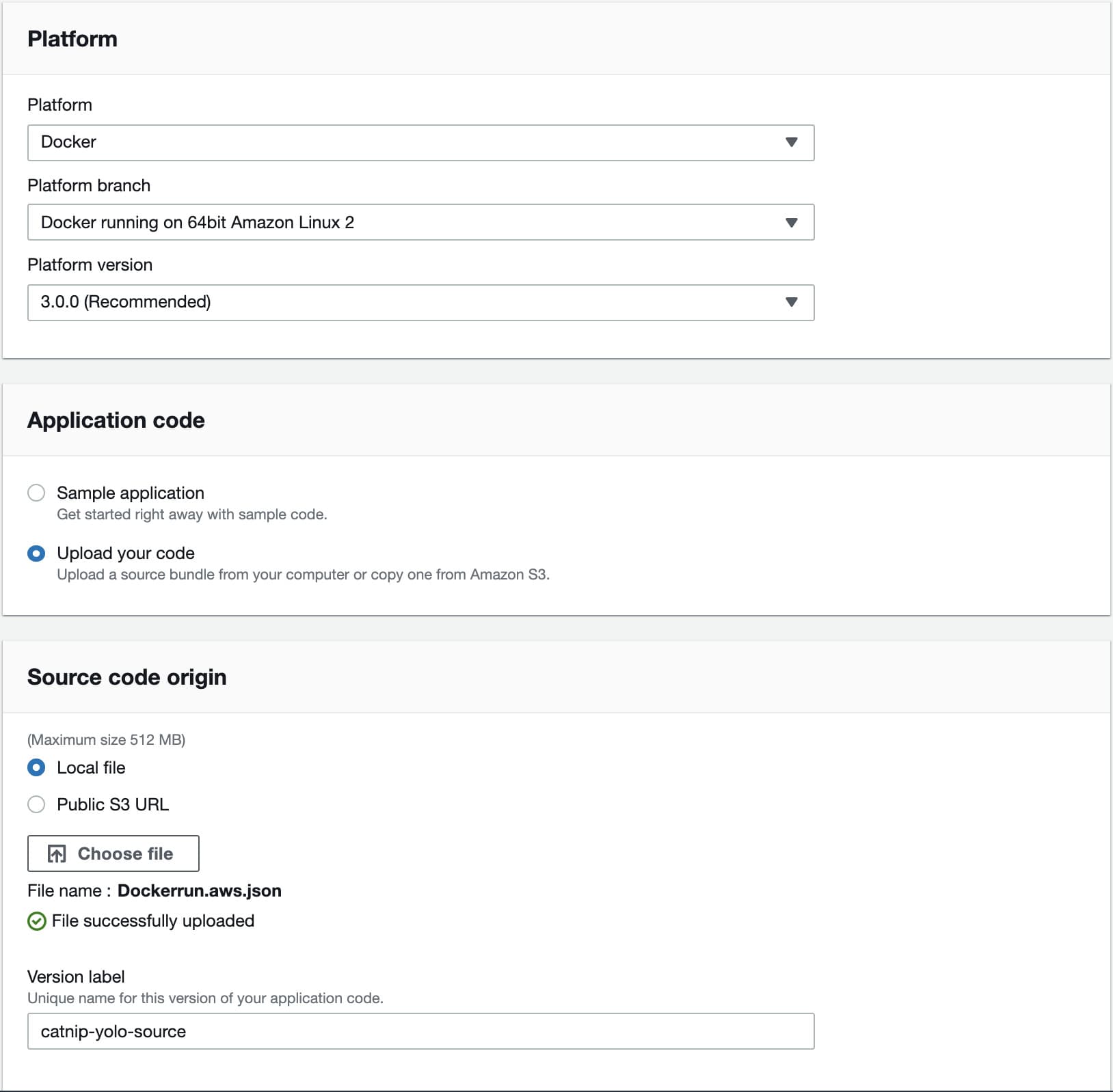

Here are the steps:

- Login to your AWSconsole.

- Click on Elastic Beanstalk. It will be in the compute section on the top left. Alternatively, you can access theElastic Beanstalk console.

- Click on "Create New Application" in the top right

- Give your app a memorable (but unique) name and provide an (optional) description

- In theNew Environmentscreen, create a new environment and choose theWeb Server Environment.

- Fill in the environment information by choosing a domain. This URL is what you'll share with your friends so make sure it's easy to remember.

- Under base configuration section. ChooseDockerfrom thepredefined platform.

- Now we need to upload our application code. But since our application is packaged in a Docker container, we just need to tell EB about our container. Open the

Dockerrun.aws.jsonfilelocated in theflask-appfolder and edit theNameof the image to your image's name. Don't worry, I'll explain the contents of the file shortly. When you are done, click on the radio button for "Upload your Code", choose this file, and click on "Upload". - Now click on "Create environment". The final screen that you see will have a few spinners indicating that your environment is being set up. It typically takes around 5 minutes for the first-time setup.

While we wait, let's quickly see what theDockerrun.aws.jsonfile contains. This file is basically an AWS specific file that tells EB details about our application and docker configuration.

{

"AWSEBDockerrunVersion":"1",

"Image":{

"Name":"prakhar1989/catnip",

"Update":"true"

},

"Ports":[

{

"ContainerPort":5000,

"HostPort":8000

}

],

"Logging":"/var/log/nginx"

}The file should be pretty self-explanatory, but you can alwaysreferencethe official documentation for more information. We provide the name of the image that EB should use along with a port that the container should open.

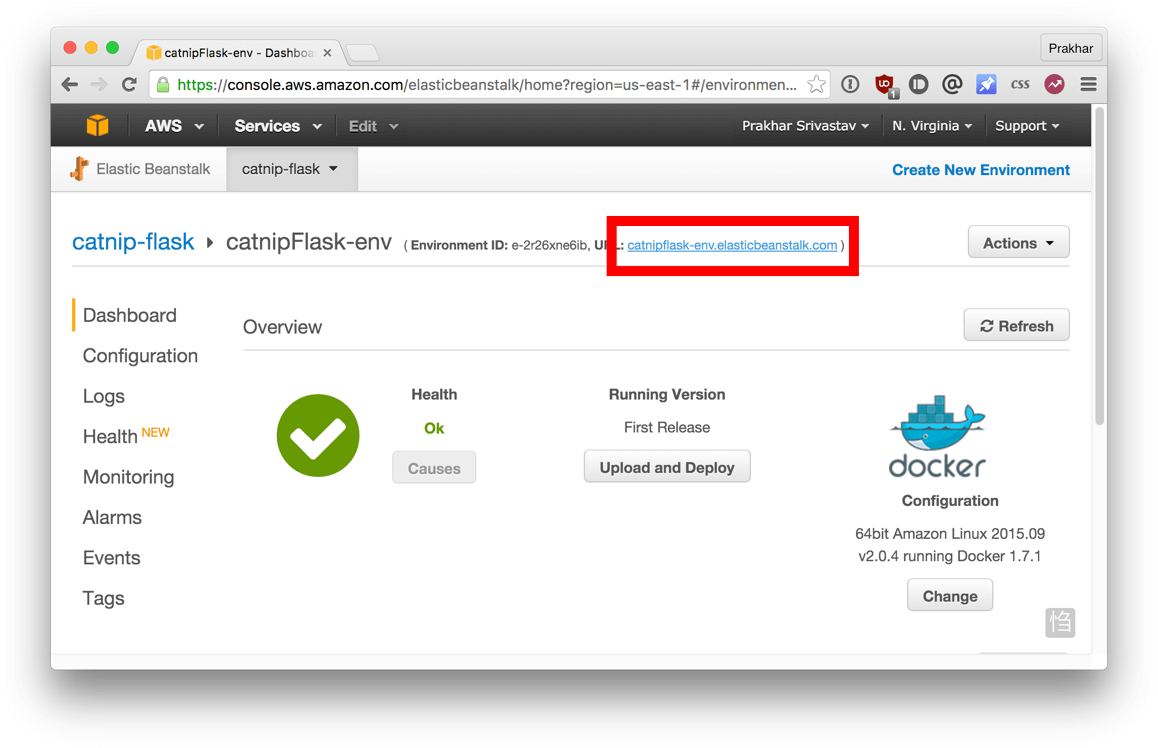

Hopefully by now, our instance should be ready. Head over to the EB page and you should see a green tick indicating that your app is alive and kicking.

Go ahead and open the URL in your browser and you should see the application in all its glory. Feel free to email / IM / snapchat this link to your friends and family so that they can enjoy a few cat gifs, too.

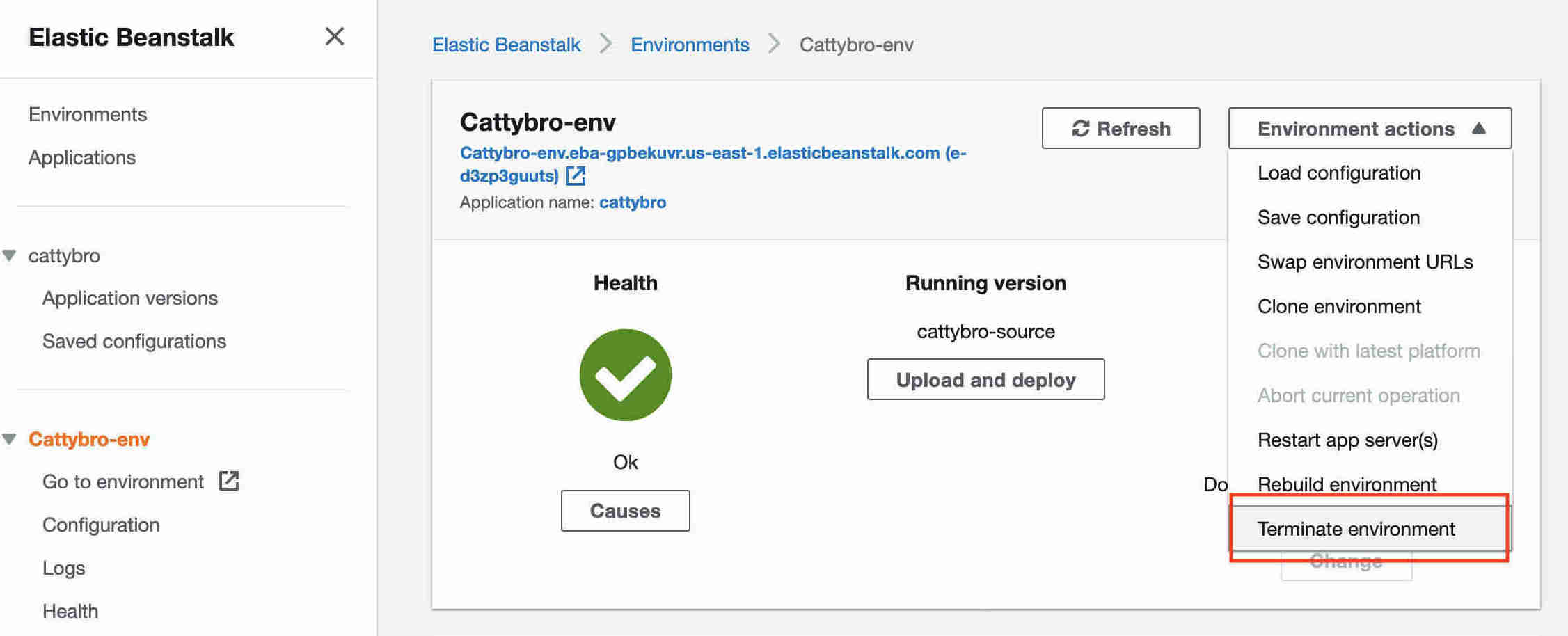

Cleanup

Once you done basking in the glory of your app, remember to terminate the environment so that you don't end up getting charged for extra resources.

Congratulations! You have deployed your first Docker application! That might seem like a lot of steps, but with thecommand-line tool for EByou can almost mimic the functionality of Heroku in a few keystrokes! Hopefully, you agree that Docker takes away a lot of the pains of building and deploying applications in the cloud. I would encourage you to read the AWSdocumentationon single-container Docker environments to get an idea of what features exist.

In the next (and final) part of the tutorial, we'll up the ante a bit and deploy an application that mimics the real-world more closely; an app with a persistent back-end storage tier. Let's get straight to it!

Multi-container Environments

In the last section, we saw how easy and fun it is to run applications with Docker. We started with a simple static website and then tried a Flask app. Both of which we could run locally and in the cloud with just a few commands. One thing both these apps had in common was that they were running in asingle container.

Those of you who have experience running services in production know that usually apps nowadays are not that simple. There's almost always a database (or any other kind of persistent storage) involved. Systems such asRedisandMemcachedhave becomede rigueurof most web application architectures. Hence, in this section we are going to spend some time learning how to Dockerize applications which rely on different services to run.

In particular, we are going to see how we can run and managemulti-containerdocker environments. Why multi-container you might ask? Well, one of the key points of Docker is the way it provides isolation. The idea of bundling a process with its dependencies in a sandbox (called containers) is what makes this so powerful.

Just like it's a good strategy to decouple your application tiers, it is wise to keep containers for each of theservicesseparate. Each tier is likely to have different resource needs and those needs might grow at different rates. By separating the tiers into different containers, we can compose each tier using the most appropriate instance type based on different resource needs. This also plays in very well with the wholemicroservicesmovement which is one of the main reasons why Docker (or any other container technology) is at theforefrontof modern microservices architectures.

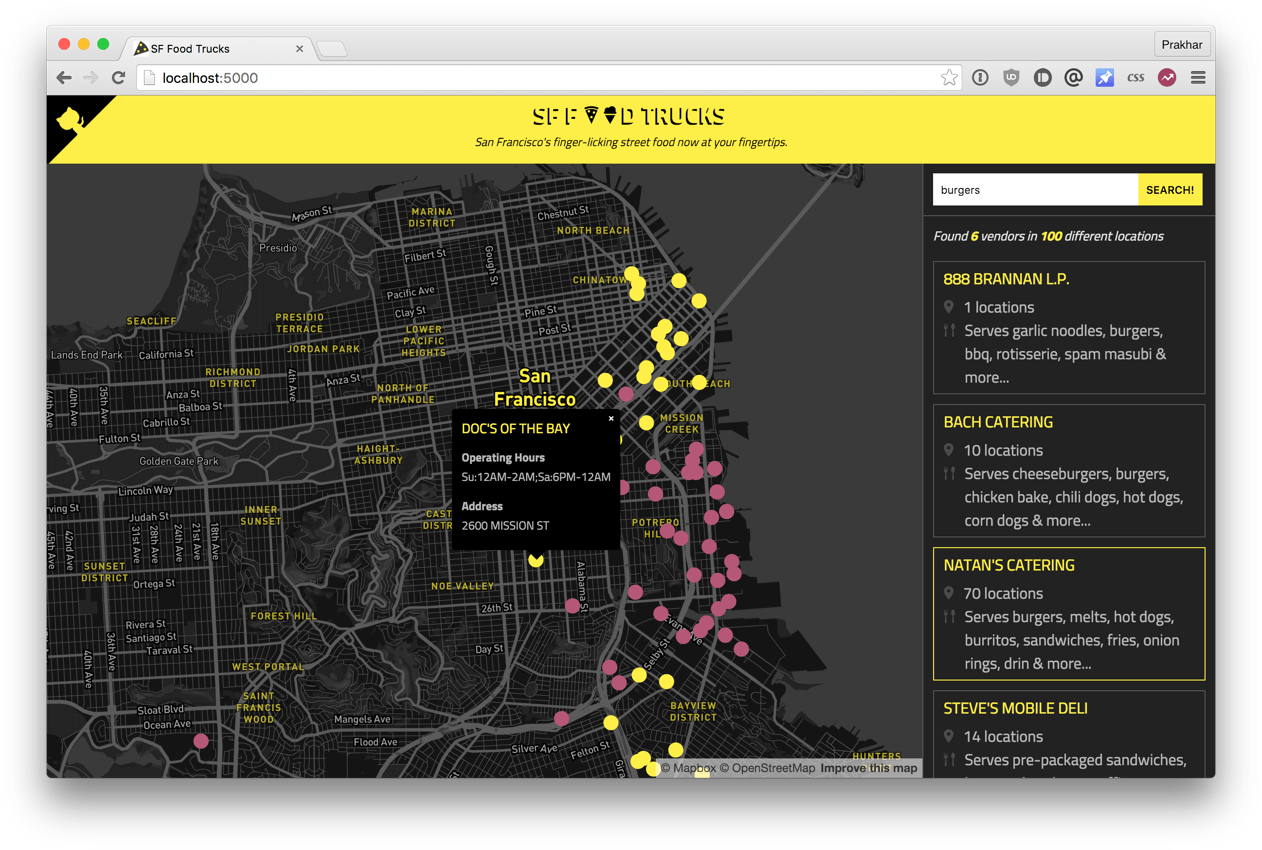

SF Food Trucks

The app that we're going to Dockerize is called SF Food Trucks. My goal in building this app was to have something that is useful (in that it resembles a real-world application), relies on at least one service, but is not too complex for the purpose of this tutorial. This is what I came up with.

The app's backend is written in Python (Flask) and for search it usesElasticsearch.Like everything else in this tutorial, the entire source is available onGithub.We'll use this as our candidate application for learning out how to build, run and deploy a multi-container environment.

First up, let's clone the repository locally.

$ gitclonehttps://github /prakhar1989/FoodTrucks

$cdFoodTrucks

$ tree -L 2

.

├── Dockerfile

├── README.md

├── aws-compose.yml

├── docker-compose.yml

├── flask-app

│ ├── app.py

│ ├── package-lock.json

│ ├── package.json

│ ├── requirements.txt

│ ├── static

│ ├── templates

│ └── webpack.config.js

├── setup-aws-ecs.sh

├── setup-docker.sh

├── shot.png

└── utils

├── generate_geojson.py

└── trucks.geojsonTheflask-appfolder contains the Python application, while theutilsfolder has some utilities to load the data into Elasticsearch. The directory also contains some YAML files and a Dockerfile, all of which we'll see in greater detail as we progress through this tutorial. If you are curious, feel free to take a look at the files.

Now that you're excited (hopefully), let's think of how we can Dockerize the app. We can see that the application consists of a Flask backend server and an Elasticsearch service. A natural way to split this app would be to have two containers - one running the Flask process and another running the Elasticsearch (ES) process. That way if our app becomes popular, we can scale it by adding more containers depending on where the bottleneck lies.

Great, so we need two containers. That shouldn't be hard right? We've already built our own Flask container in the previous section. And for Elasticsearch, let's see if we can find something on the hub.

$ docker search elasticsearch

NAME DESCRIPTION STARS OFFICIAL AUTOMATED

elasticsearch Elasticsearch is a powerful opensourcese... 697 [OK]

itzg/elasticsearch Provides an easily configurable Elasticsea... 17 [OK]

tutum/elasticsearch Elasticsearch image - listensinport 9200. 15 [OK]

barnybug/elasticsearch Latest Elasticsearch 1.7.2 and previous re... 15 [OK]

digitalwonderland/elasticsearch Latest Elasticsearch with Marvel & Kibana 12 [OK]

monsantoco/elasticsearch ElasticSearch Docker image 9 [OK]Quite unsurprisingly, there exists an officially supportedimagefor Elasticsearch. To get ES running, we can simply usedocker runand have a single-node ES container running locally within no time.

Note: Elastic, the company behind Elasticsearch, maintains itsown registryfor Elastic products. It's recommended to use the images from that registry if you plan to use Elasticsearch.

Let's first pull the image

$ docker pull docker.elastic.co/elasticsearch/elasticsearch:6.3.2and then run it in development mode by specifying ports and setting an environment variable that configures the Elasticsearch cluster to run as a single-node.

$ docker run-d--name es -p 9200:9200 -p 9300:9300-e"discovery.type=single-node"docker.elastic.co/elasticsearch/elasticsearch:6.3.2

277451c15ec183dd939e80298ea4bcf55050328a39b04124b387d668e3ed3943Note: If your container runs into memory issues, you might need totweak some JVM flagsto limit its memory consumption.

As seen above, we use--name esto give our container a name which makes it easy to use in subsequent commands. Once the container is started, we can see the logs by runningdocker container logswith the container name (or ID) to inspect the logs. You should see logs similar to below if Elasticsearch started successfully.

Note: Elasticsearch takes a few seconds to start so you might need to wait before you see

initializedin the logs.

$ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

277451c15ec1 docker.elastic.co/elasticsearch/elasticsearch:6.3.2"/usr/local/bin/dock…"2 minutes ago Up 2 minutes 0.0.0.0:9200->9200/tcp, 0.0.0.0:9300->9300/tcp es

$ docker container logs es

[2018-07-29T05:49:09,304][INFO ][o.e.n.Node ] [] initializing...

[2018-07-29T05:49:09,385][INFO ][o.e.e.NodeEnvironment ] [L1VMyzt] using [1] data paths, mounts [[/ (overlay)]], net usable_space [54.1gb], net total_space [62.7gb], types [overlay]

[2018-07-29T05:49:09,385][INFO ][o.e.e.NodeEnvironment ] [L1VMyzt] heap size [990.7mb], compressed ordinary object pointers [true]

[2018-07-29T05:49:11,979][INFO ][o.e.p.PluginsService ] [L1VMyzt] loaded module [x-pack-security]

[2018-07-29T05:49:11,980][INFO ][o.e.p.PluginsService ] [L1VMyzt] loaded module [x-pack-sql]

[2018-07-29T05:49:11,980][INFO ][o.e.p.PluginsService ] [L1VMyzt] loaded module [x-pack-upgrade]

[2018-07-29T05:49:11,980][INFO ][o.e.p.PluginsService ] [L1VMyzt] loaded module [x-pack-watcher]

[2018-07-29T05:49:11,981][INFO ][o.e.p.PluginsService ] [L1VMyzt] loaded plugin [ingest-geoip]

[2018-07-29T05:49:11,981][INFO ][o.e.p.PluginsService ] [L1VMyzt] loaded plugin [ingest-user-agent]

[2018-07-29T05:49:17,659][INFO ][o.e.d.DiscoveryModule ] [L1VMyzt] using discoverytype[single-node]

[2018-07-29T05:49:18,962][INFO ][o.e.n.Node ] [L1VMyzt] initialized

[2018-07-29T05:49:18,963][INFO ][o.e.n.Node ] [L1VMyzt] starting...

[2018-07-29T05:49:19,218][INFO ][o.e.t.TransportService ] [L1VMyzt] publish_address {172.17.0.2:9300}, bound_addresses {0.0.0.0:9300}

[2018-07-29T05:49:19,302][INFO ][o.e.x.s.t.n.SecurityNetty4HttpServerTransport] [L1VMyzt] publish_address {172.17.0.2:9200}, bound_addresses {0.0.0.0:9200}

[2018-07-29T05:49:19,303][INFO ][o.e.n.Node ] [L1VMyzt] started

[2018-07-29T05:49:19,439][WARN ][o.e.x.s.a.s.m.NativeRoleMappingStore] [L1VMyzt] Failed to clear cacheforrealms [[]]

[2018-07-29T05:49:19,542][INFO ][o.e.g.GatewayService ] [L1VMyzt] recovered [0] indices into cluster_stateNow, lets try to see if can send a request to the Elasticsearch container. We use the9200port to send acURLrequest to the container.

$ curl 0.0.0.0:9200

{

"name":"ijJDAOm",

"cluster_name":"docker-cluster",

"cluster_uuid":"a_nSV3XmTCqpzYYzb-LhNw",

"version":{

"number":"6.3.2",

"build_flavor":"default",

"build_type":"tar",

"build_hash":"053779d",

"build_date":"2018-07-20T05:20:23.451332Z",

"build_snapshot":false,

"lucene_version":"7.3.1",

"minimum_wire_compatibility_version":"5.6.0",

"minimum_index_compatibility_version":"5.0.0"

},

"tagline":"You Know, for Search"

}Sweet! It's looking good! While we are at it, let's get our Flask container running too. But before we get to that, we need aDockerfile.In the last section, we usedPython:3.8image as our base image. This time, however, apart from installing Python dependencies viapip,we want our application to also generate our minified Javascript file for production. For this, we'll require Nodejs. Since we need a custom build step, we'll start from theubuntubase image to build ourDockerfilefrom scratch.

Note: if you find that an existing image doesn't cater to your needs, feel free to start from another base image and tweak it yourself. For most of the images on Docker Hub, you should be able to find the corresponding

Dockerfileon Github. Reading through existing Dockerfiles is one of the best ways to learn how to roll your own.

OurDockerfilefor the flask app looks like below -

# start from base

FROMubuntu:18.04

MAINTAINERPrakhar Srivastav <[email protected]>

# install system-wide deps for Python and node

RUNapt-get -yqq update

RUNapt-get -yqq install Python 3-pip Python 3-dev curl gnupg

RUNcurl-sL https://deb.nodesource /setup_10.x | bash

RUNapt-get install -yq nodejs

# copy our application code

ADDflask-app /opt/flask-app

WORKDIR/opt/flask-app

# fetch app specific deps

RUNnpm install

RUNnpm run build

RUNpip3 install -r requirements.txt

# expose port

EXPOSE5000

# start app

CMD["Python 3","./app.py"]Quite a few new things here so let's quickly go over this file. We start off with theUbuntu LTSbase image and use the package managerapt-getto install the dependencies namely - Python and Node. Theyqqflag is used to suppress output and assumes "Yes" to all prompts.

We then use theADDcommand to copy our application into a new volume in the container -/opt/flask-app.This is where our code will reside. We also set this as our working directory, so that the following commands will be run in the context of this location. Now that our system-wide dependencies are installed, we get around to installing app-specific ones. First off we tackle Node by installing the packages from npm and running the build command as defined in ourpackage.jsonfile.We finish the file off by installing the Python packages, exposing the port and defining theCMDto run as we did in the last section.

Finally, we can go ahead, build the image and run the container (replaceyourusernamewith your username below).

$ docker build -t yourusername/foodtrucks-web.In the first run, this will take some time as the Docker client will download the ubuntu image, run all the commands and prepare your image. Re-runningdocker buildafter any subsequent changes you make to the application code will almost be instantaneous. Now let's try running our app.

$ docker run -P --rm yourusername/foodtrucks-web

Unable to connect to ES. Retyingin5 secs...

Unable to connect to ES. Retyingin5 secs...

Unable to connect to ES. Retyingin5 secs...

Out of retries. Bailing out...Oops! Our flask app was unable to run since it was unable to connect to Elasticsearch. How do we tell one container about the other container and get them to talk to each other? The answer lies in the next section.

Docker Network

Before we talk about the features Docker provides especially to deal with such scenarios, let's see if we can figure out a way to get around the problem. Hopefully, this should give you an appreciation for the specific feature that we are going to study.

Okay, so let's rundocker container ls(which is same asdocker ps) and see what we have.

$ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

277451c15ec1 docker.elastic.co/elasticsearch/elasticsearch:6.3.2"/usr/local/bin/dock…"17 minutes ago Up 17 minutes 0.0.0.0:9200->9200/tcp, 0.0.0.0:9300->9300/tcp esSo we have one ES container running on0.0.0.0:9200port which we can directly access. If we can tell our Flask app to connect to this URL, it should be able to connect and talk to ES, right? Let's dig into ourPython codeand see how the connection details are defined.

es = Elasticsearch(host='es')To make this work, we need to tell the Flask container that the ES container is running on0.0.0.0host (the port by default is9200) and that should make it work, right? Unfortunately, that is not correct since the IP0.0.0.0is the IP to access ES container from thehost machinei.e. from my Mac. Another container will not be able to access this on the same IP address. Okay if not that IP, then which IP address should the ES container be accessible by? I'm glad you asked this question.

Now is a good time to start our exploration of networking in Docker. When docker is installed, it creates three networks automatically.

$ docker network ls

NETWORK ID NAME DRIVER SCOPE

c2c695315b3a bridge bridgelocal

a875bec5d6fd host hostlocal

ead0e804a67b none nulllocalThebridgenetwork is the network in which containers are run by default. So that means that when I ran the ES container, it was running in this bridge network. To validate this, let's inspect the network.

$ docker network inspect bridge

[

{

"Name":"bridge",

"Id":"c2c695315b3aaf8fc30530bb3c6b8f6692cedd5cc7579663f0550dfdd21c9a26",

"Created":"2018-07-28T20:32:39.405687265Z",

"Scope":"local",

"Driver":"bridge",

"EnableIPv6":false,

"IPAM":{

"Driver":"default",

"Options":null,

"Config":[

{

"Subnet":"172.17.0.0/16",

"Gateway":"172.17.0.1"

}

]

},

"Internal":false,

"Attachable":false,

"Ingress":false,

"ConfigFrom":{

"Network":""

},

"ConfigOnly":false,

"Containers":{

"277451c15ec183dd939e80298ea4bcf55050328a39b04124b387d668e3ed3943":{

"Name":"es",

"EndpointID":"5c417a2fc6b13d8ec97b76bbd54aaf3ee2d48f328c3f7279ee335174fbb4d6bb",

"MacAddress":"02:42:ac:11:00:02",

"IPv4Address":"172.17.0.2/16",

"IPv6Address":""

}

},

"Options":{

"com.docker.network.bridge.default_bridge":"true",

"com.docker.network.bridge.enable_icc":"true",

"com.docker.network.bridge.enable_ip_masquerade":"true",

"com.docker.network.bridge.host_binding_ipv4":"0.0.0.0",

"com.docker.network.bridge.name":"docker0",

"com.docker.network.driver.mtu":"1500"

},

"Labels":{}

}

]You can see that our container277451c15ec1is listed under theContainerssection in the output. What we also see is the IP address this container has been allotted -172.17.0.2.Is this the IP address that we're looking for? Let's find out by running our flask container and trying to access this IP.

$ docker run -it --rm yourusername/foodtrucks-web bash

root@35180ccc206a:/opt/flask-app# curl 172.17.0.2:9200

{

"name":"Jane Foster",

"cluster_name":"elasticsearch",

"version":{

"number":"2.1.1",

"build_hash":"40e2c53a6b6c2972b3d13846e450e66f4375bd71",

"build_timestamp":"2015-12-15T13:05:55Z",

"build_snapshot":false,

"lucene_version":"5.3.1"

},

"tagline":"You Know, for Search"

}

root@35180ccc206a:/opt/flask-app# exitThis should be fairly straightforward to you by now. We start the container in the interactive mode with thebashprocess. The--rmis a convenient flag for running one off commands since the container gets cleaned up when its work is done. We try acurlbut we need to install it first. Once we do that, we see that we can indeed talk to ES on172.17.0.2:9200.Awesome!

Although we have figured out a way to make the containers talk to each other, there are still two problems with this approach -

How do we tell the Flask container that

eshostname stands for172.17.0.2or some other IP since the IP can change?Since thebridgenetwork is shared by every container by default, this method isnot secure.How do we isolate our network?

The good news that Docker has a great answer to our questions. It allows us to define our own networks while keeping them isolated using thedocker networkcommand.

Let's first go ahead and create our own network.

$ docker network create foodtrucks-net

0815b2a3bb7a6608e850d05553cc0bda98187c4528d94621438f31d97a6fea3c

$ docker network ls

NETWORK ID NAME DRIVER SCOPE

c2c695315b3a bridge bridgelocal

0815b2a3bb7a foodtrucks-net bridgelocal

a875bec5d6fd host hostlocal

ead0e804a67b none nulllocalThenetwork createcommand creates a newbridgenetwork, which is what we need at the moment. In terms of Docker, a bridge network uses a software bridge which allows containers connected to the same bridge network to communicate, while providing isolation from containers which are not connected to that bridge network. The Docker bridge driver automatically installs rules in the host machine so that containers on different bridge networks cannot communicate directly with each other. There are other kinds of networks that you can create, and you are encouraged to read about them in the officialdocs.

Now that we have a network, we can launch our containers inside this network using the--netflag. Let's do that - but first, in order to launch a new container with the same name, we will stop and remove our ES container that is running in the bridge (default) network.

$ docker container stop es

es

$ docker container rm es

es

$ docker run-d--name es --net foodtrucks-net -p 9200:9200 -p 9300:9300-e"discovery.type=single-node"docker.elastic.co/elasticsearch/elasticsearch:6.3.2

13d6415f73c8d88bddb1f236f584b63dbaf2c3051f09863a3f1ba219edba3673

$ docker network inspect foodtrucks-net

[

{

"Name":"foodtrucks-net",

"Id":"0815b2a3bb7a6608e850d05553cc0bda98187c4528d94621438f31d97a6fea3c",

"Created":"2018-07-30T00:01:29.1500984Z",

"Scope":"local",

"Driver":"bridge",

"EnableIPv6":false,

"IPAM":{

"Driver":"default",

"Options":{},

"Config":[

{

"Subnet":"172.18.0.0/16",

"Gateway":"172.18.0.1"

}

]

},

"Internal":false,

"Attachable":false,

"Ingress":false,

"ConfigFrom":{

"Network":""

},

"ConfigOnly":false,

"Containers":{

"13d6415f73c8d88bddb1f236f584b63dbaf2c3051f09863a3f1ba219edba3673":{

"Name":"es",

"EndpointID":"29ba2d33f9713e57eb6b38db41d656e4ee2c53e4a2f7cf636bdca0ec59cd3aa7",

"MacAddress":"02:42:ac:12:00:02",

"IPv4Address":"172.18.0.2/16",

"IPv6Address":""

}

},

"Options":{},

"Labels":{}

}

]As you can see, ourescontainer is now running inside thefoodtrucks-netbridge network. Now let's inspect what happens when we launch in ourfoodtrucks-netnetwork.

$ docker run -it --rm --net foodtrucks-net yourusername/foodtrucks-web bash

root@9d2722cf282c:/opt/flask-app# curl es:9200

{

"name":"wWALl9M",

"cluster_name":"docker-cluster",

"cluster_uuid":"BA36XuOiRPaghPNBLBHleQ",

"version":{

"number":"6.3.2",

"build_flavor":"default",

"build_type":"tar",

"build_hash":"053779d",

"build_date":"2018-07-20T05:20:23.451332Z",

"build_snapshot":false,

"lucene_version":"7.3.1",

"minimum_wire_compatibility_version":"5.6.0",

"minimum_index_compatibility_version":"5.0.0"

},

"tagline":"You Know, for Search"

}

root@53af252b771a:/opt/flask-app# ls

app.py node_modules package.json requirements.txt static templates webpack.config.js

root@53af252b771a:/opt/flask-app# Python 3 app.py

Index not found...

Loading datainelasticsearch...

Total trucks loaded: 733

* Running on http://0.0.0.0:5000/ (Press CTRL+C to quit)

root@53af252b771a:/opt/flask-app# exitWohoo! That works! On user-defined networks like foodtrucks-net, containers can not only communicate by IP address, but can also resolve a container name to an IP address. This capability is calledautomatic service discovery.Great! Let's launch our Flask container for real now -

$ docker run-d--net foodtrucks-net -p 5000:5000 --name foodtrucks-web yourusername/foodtrucks-web

852fc74de2954bb72471b858dce64d764181dca0cf7693fed201d76da33df794

$ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

852fc74de295 yourusername/foodtrucks-web"Python 3./app.py"About a minute ago Up About a minute 0.0.0.0:5000->5000/tcp foodtrucks-web

13d6415f73c8 docker.elastic.co/elasticsearch/elasticsearch:6.3.2"/usr/local/bin/dock…"17 minutes ago Up 17 minutes 0.0.0.0:9200->9200/tcp, 0.0.0.0:9300->9300/tcp es

$ curl -I 0.0.0.0:5000

HTTP/1.0 200 OK

Content-Type: text/html; charset=utf-8

Content-Length: 3697

Server: Werkzeug/0.11.2 Python/2.7.6

Date: Sun, 10 Jan 2016 23:58:53 GMTHead over tohttp://0.0.0.0:5000and see your glorious app live! Although that might have seemed like a lot of work, we actually just typed 4 commands to go from zero to running. I've collated the commands in abash script.

#!/bin/bash

# build the flask container

docker build -t yourusername/foodtrucks-web.

# create the network

docker network create foodtrucks-net

# start the ES container

docker run-d--name es --net foodtrucks-net -p 9200:9200 -p 9300:9300-e"discovery.type=single-node"docker.elastic.co/elasticsearch/elasticsearch:6.3.2

# start the flask app container

docker run-d--net foodtrucks-net -p 5000:5000 --name foodtrucks-web yourusername/foodtrucks-webNow imagine you are distributing your app to a friend, or running on a server that has docker installed. You can get a whole app running with just one command!

$ gitclonehttps://github /prakhar1989/FoodTrucks

$cdFoodTrucks

$./setup-docker.shAnd that's it! If you ask me, I find this to be an extremely awesome, and a powerful way of sharing and running your applications!

Docker Compose

Till now we've spent all our time exploring the Docker client. In the Docker ecosystem, however, there are a bunch of other open-source tools which play very nicely with Docker. A few of them are -

- Docker Machine- Create Docker hosts on your computer, on cloud providers, and inside your own data center

- Docker Compose- A tool for defining and running multi-container Docker applications.

- Docker Swarm- A native clustering solution for Docker

- Kubernetes- Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications.

In this section, we are going to look at one of these tools, Docker Compose, and see how it can make dealing with multi-container apps easier.

The background story of Docker Compose is quite interesting. Roughly around January 2014, a company called OrchardUp launched a tool called Fig. The idea behind Fig was to make isolated development environments work with Docker. The project was very well received onHacker News- I oddly remember reading about it but didn't quite get the hang of it.

Thefirst commenton the forum actually does a good job of explaining what Fig is all about.

So really at this point, that's what Docker is about: running processes. Now Docker offers a quite rich API to run the processes: shared volumes (directories) between containers (i.e. running images), forward port from the host to the container, display logs, and so on. But that's it: Docker as of now, remains at the process level.

While it provides options to orchestrate multiple containers to create a single "app", it doesn't address the management of such group of containers as a single entity. And that's where tools such as Fig come in: talking about a group of containers as a single entity. Think "run an app" (i.e. "run an orchestrated cluster of containers" ) instead of "run a container".

It turns out that a lot of people using docker agree with this sentiment. Slowly and steadily as Fig became popular, Docker Inc. took notice,acquired the companyand re-branded Fig as Docker Compose.

So what isComposeused for? Compose is a tool that is used for defining and running multi-container Docker apps in an easy way. It provides a configuration file calleddocker-compose.ymlthat can be used to bring up an application and the suite of services it depends on with just one command. Compose works in all environments: production, staging, development, testing, as well as CI workflows, although Compose is ideal for development and testing environments.

Let's see if we can create adocker-compose.ymlfile for our SF-Foodtrucks app and evaluate whether Docker Compose lives up to its promise.

The first step, however, is to install Docker Compose. If you're running Windows or Mac, Docker Compose is already installed as it comes in the Docker Toolbox. Linux users can easily get their hands on Docker Compose by following theinstructionson the docs. Since Compose is written in Python, you can also simply dopip install docker-compose.Test your installation with -

$ docker-compose --version

docker-compose version 1.21.2, build a133471Now that we have it installed, we can jump on the next step i.e. the Docker Compose filedocker-compose.yml.The syntax for YAML is quite simple and the repo already contains the docker-composefilethat we'll be using.

version:"3"

services:

es:

image:docker.elastic.co/elasticsearch/elasticsearch:6.3.2

container_name:es

environment:

-discovery.type=single-node

ports:

-9200:9200

volumes:

- esdata1:/usr/share/elasticsearch/data

web:

image:yourusername/foodtrucks-web

command:Python 3 app.py

depends_on:

-es

ports:

-5000:5000

volumes:

-./flask-app:/opt/flask-app

volumes:

esdata1:

driver:localLet me breakdown what the file above means. At the parent level, we define the names of our services -esandweb.Theimageparameter is always required, and for each service that we want Docker to run, we can add additional parameters. Fores,we just refer to theelasticsearchimage available on Elastic registry. For our Flask app, we refer to the image that we built at the beginning of this section.

Other parameters such ascommandandportsprovide more information about the container. Thevolumesparameter specifies a mount point in ourwebcontainer where the code will reside. This is purely optional and is useful if you need access to logs, etc. We'll later see how this can be useful during development. Refer to theonline referenceto learn more about the parameters this file supports. We also add volumes for theescontainer so that the data we load persists between restarts. We also specifydepends_on,which tells docker to start theescontainer beforeweb.You can read more about it ondocker compose docs.

Note: You must be inside the directory with the

docker-compose.ymlfile in order to execute most Compose commands.

Great! Now the file is ready, let's seedocker-composein action. But before we start, we need to make sure the ports and names are free. So if you have the Flask and ES containers running, lets turn them off.

$ docker stop es foodtrucks-web

es

foodtrucks-web

$ docker rm es foodtrucks-web

es

foodtrucks-webNow we can rundocker-compose.Navigate to the food trucks directory and rundocker-compose up.

$ docker-compose up

Creating network"foodtrucks_default"with the default driver

Creating foodtrucks_es_1

Creating foodtrucks_web_1

Attaching to foodtrucks_es_1, foodtrucks_web_1

es_1 | [2016-01-11 03:43:50,300][INFO ][node ] [Comet] version[2.1.1], pid[1], build[40e2c53/2015-12-15T13:05:55Z]

es_1 | [2016-01-11 03:43:50,307][INFO ][node ] [Comet] initializing...

es_1 | [2016-01-11 03:43:50,366][INFO ][plugins ] [Comet] loaded [], sites []

es_1 | [2016-01-11 03:43:50,421][INFO ][env ] [Comet] using [1] data paths, mounts [[/usr/share/elasticsearch/data (/dev/sda1)]], net usable_space [16gb], net total_space [18.1gb], spins? [possibly], types [ext4]

es_1 | [2016-01-11 03:43:52,626][INFO ][node ] [Comet] initialized

es_1 | [2016-01-11 03:43:52,632][INFO ][node ] [Comet] starting...

es_1 | [2016-01-11 03:43:52,703][WARN ][common.network ] [Comet] publish address: {0.0.0.0} is a wildcard address, falling back to first non-loopback: {172.17.0.2}

es_1 | [2016-01-11 03:43:52,704][INFO ][transport ] [Comet] publish_address {172.17.0.2:9300}, bound_addresses {[::]:9300}

es_1 | [2016-01-11 03:43:52,721][INFO ][discovery ] [Comet] elasticsearch/cEk4s7pdQ-evRc9MqS2wqw

es_1 | [2016-01-11 03:43:55,785][INFO ][cluster.service ] [Comet] new_master {Comet}{cEk4s7pdQ-evRc9MqS2wqw}{172.17.0.2}{172.17.0.2:9300}, reason: zen-disco-join(elected_as_master, [0] joins received)

es_1 | [2016-01-11 03:43:55,818][WARN ][common.network ] [Comet] publish address: {0.0.0.0} is a wildcard address, falling back to first non-loopback: {172.17.0.2}

es_1 | [2016-01-11 03:43:55,819][INFO ][http ] [Comet] publish_address {172.17.0.2:9200}, bound_addresses {[::]:9200}

es_1 | [2016-01-11 03:43:55,819][INFO ][node ] [Comet] started

es_1 | [2016-01-11 03:43:55,826][INFO ][gateway ] [Comet] recovered [0] indices into cluster_state

es_1 | [2016-01-11 03:44:01,825][INFO ][cluster.metadata ] [Comet] [sfdata] creating index, cause [auto(index api)], templates [], shards [5]/[1], mappings [truck]

es_1 | [2016-01-11 03:44:02,373][INFO ][cluster.metadata ] [Comet] [sfdata] update_mapping [truck]

es_1 | [2016-01-11 03:44:02,510][INFO ][cluster.metadata ] [Comet] [sfdata] update_mapping [truck]

es_1 | [2016-01-11 03:44:02,593][INFO ][cluster.metadata ] [Comet] [sfdata] update_mapping [truck]

es_1 | [2016-01-11 03:44:02,708][INFO ][cluster.metadata ] [Comet] [sfdata] update_mapping [truck]

es_1 | [2016-01-11 03:44:03,047][INFO ][cluster.metadata ] [Comet] [sfdata] update_mapping [truck]

web_1 | * Running on http://0.0.0.0:5000/ (Press CTRL+C to quit)Head over to the IP to see your app live. That was amazing wasn't it? Just a few lines of configuration and we have two Docker containers running successfully in unison. Let's stop the services and re-run in detached mode.

web_1 | * Running on http://0.0.0.0:5000/ (Press CTRL+C to quit)

Killing foodtrucks_web_1...done

Killing foodtrucks_es_1...done

$ docker-compose up-d

Creating es...done

Creating foodtrucks_web_1...done

$ docker-compose ps

Name Command State Ports

--------------------------------------------------------------------------------------------

es /usr/local/bin/docker-entr... Up 0.0.0.0:9200->9200/tcp, 9300/tcp

foodtrucks_web_1 Python 3 app.py Up 0.0.0.0:5000->5000/tcpUnsurprisingly, we can see both the containers running successfully. Where do the names come from? Those were created automatically by Compose. But doesComposealso create the network automatically? Good question! Let's find out.

First off, let us stop the services from running. We can always bring them back up in just one command. Data volumes will persist, so it’s possible to start the cluster again with the same data using docker-compose up. To destroy the cluster and the data volumes, just typedocker-compose down -v.

$ docker-compose down -v

Stopping foodtrucks_web_1...done

Stopping es...done

Removing foodtrucks_web_1...done

Removing es...done

Removing network foodtrucks_default

Removing volume foodtrucks_esdata1While we're are at it, we'll also remove thefoodtrucksnetwork that we created last time.

$ docker network rm foodtrucks-net

$ docker network ls

NETWORK ID NAME DRIVER SCOPE

c2c695315b3a bridge bridgelocal

a875bec5d6fd host hostlocal

ead0e804a67b none nulllocalGreat! Now that we have a clean slate, let's re-run our services and see ifComposedoes its magic.

$ docker-compose up-d

Recreating foodtrucks_es_1

Recreating foodtrucks_web_1

$ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

f50bb33a3242 yourusername/foodtrucks-web"Python 3 app.py"14 seconds ago Up 13 seconds 0.0.0.0:5000->5000/tcp foodtrucks_web_1

e299ceeb4caa elasticsearch"/docker-entrypoint.s"14 seconds ago Up 14 seconds 9200/tcp, 9300/tcp foodtrucks_es_1So far, so good. Time to see if any networks were created.

$ docker network ls

NETWORK ID NAME DRIVER

c2c695315b3a bridge bridgelocal

f3b80f381ed3 foodtrucks_default bridgelocal

a875bec5d6fd host hostlocal

ead0e804a67b none nulllocalYou can see that compose went ahead and created a new network calledfoodtrucks_defaultand attached both the new services in that network so that each of these are discoverable to the other. Each container for a service joins the default network and is both reachable by other containers on that network, and discoverable by them at a hostname identical to the container name.

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

8c6bb7e818ec docker.elastic.co/elasticsearch/elasticsearch:6.3.2"/usr/local/bin/dock…"About a minute ago Up About a minute 0.0.0.0:9200->9200/tcp, 9300/tcp es

7640cec7feb7 yourusername/foodtrucks-web"Python 3 app.py"About a minute ago Up About a minute 0.0.0.0:5000->5000/tcp foodtrucks_web_1

$ docker network inspect foodtrucks_default

[

{

"Name":"foodtrucks_default",

"Id":"f3b80f381ed3e03b3d5e605e42c4a576e32d38ba24399e963d7dad848b3b4fe7",

"Created":"2018-07-30T03:36:06.0384826Z",

"Scope":"local",

"Driver":"bridge",

"EnableIPv6":false,

"IPAM":{

"Driver":"default",

"Options":null,

"Config":[

{

"Subnet":"172.19.0.0/16",

"Gateway":"172.19.0.1"

}

]

},

"Internal":false,

"Attachable":true,

"Ingress":false,

"ConfigFrom":{

"Network":""

},

"ConfigOnly":false,

"Containers":{

"7640cec7feb7f5615eaac376271a93fb8bab2ce54c7257256bf16716e05c65a5":{

"Name":"foodtrucks_web_1",

"EndpointID":"b1aa3e735402abafea3edfbba605eb4617f81d94f1b5f8fcc566a874660a0266",

"MacAddress":"02:42:ac:13:00:02",

"IPv4Address":"172.19.0.2/16",

"IPv6Address":""

},

"8c6bb7e818ec1f88c37f375c18f00beb030b31f4b10aee5a0952aad753314b57":{

"Name":"es",

"EndpointID":"649b3567d38e5e6f03fa6c004a4302508c14a5f2ac086ee6dcf13ddef936de7b",

"MacAddress":"02:42:ac:13:00:03",

"IPv4Address":"172.19.0.3/16",

"IPv6Address":""

}

},

"Options":{},

"Labels":{

"com.docker pose.network":"default",

"com.docker pose.project":"foodtrucks",

"com.docker pose.version":"1.21.2"

}

}

]Development Workflow

Before we jump to the next section, there's one last thing I wanted to cover about docker-compose. As stated earlier, docker-compose is really great for development and testing. So let's see how we can configure compose to make our lives easier during development.

Throughout this tutorial, we've worked with readymade docker images. While we've built images from scratch, we haven't touched any application code yet and mostly restricted ourselves to editing Dockerfiles and YAML configurations. One thing that you must be wondering is how does the workflow look during development? Is one supposed to keep creating Docker images for every change, then publish it and then run it to see if the changes work as expected? I'm sure that sounds super tedious. There has to be a better way. In this section, that's what we're going to explore.

Let's see how we can make a change in the Foodtrucks app we just ran. Make sure you have the app running,

$ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5450ebedd03c yourusername/foodtrucks-web"Python 3 app.py"9 seconds ago Up 6 seconds 0.0.0.0:5000->5000/tcp foodtrucks_web_1

05d408b25dfe docker.elastic.co/elasticsearch/elasticsearch:6.3.2"/usr/local/bin/dock…"10 hours ago Up 10 hours 0.0.0.0:9200->9200/tcp, 9300/tcp esNow let's see if we can change this app to display aHello world!message when a request is made to/helloroute. Currently, the app responds with a 404.

$ curl -I 0.0.0.0:5000/hello

HTTP/1.0 404 NOT FOUND

Content-Type: text/html

Content-Length: 233

Server: Werkzeug/0.11.2 Python/2.7.15rc1

Date: Mon, 30 Jul 2018 15:34:38 GMTWhy does this happen? Since ours is a Flask app, we can seeapp.py(link) for answers. In Flask, routes are defined with @app.route syntax. In the file, you'll see that we only have three routes defined -/,/debugand/search.The/route renders the main app, thedebugroute is used to return some debug information and finallysearchis used by the app to query elasticsearch.

$ curl 0.0.0.0:5000/debug

{

"msg":"yellow open sfdata Ibkx7WYjSt-g8NZXOEtTMg 5 1 618 0 1.3mb 1.3mb\n",

"status":"success"

}Given that context, how would we add a new route forhello?You guessed it! Let's openflask-app/app.pyin our favorite editor and make the following change

@app.route('/')

defindex():

returnrender_template("index.html")

# add a new hello route

@app.route('/hello')

defhello():

return"hello world!"Now let's try making a request again

$ curl -I 0.0.0.0:5000/hello

HTTP/1.0 404 NOT FOUND

Content-Type: text/html

Content-Length: 233

Server: Werkzeug/0.11.2 Python/2.7.15rc1

Date: Mon, 30 Jul 2018 15:34:38 GMTOh no! That didn't work! What did we do wrong? While we did make the change inapp.py,the file resides in our machine (or the host machine), but since Docker is running our containers based off theyourusername/foodtrucks-webimage, it doesn't know about this change. To validate this, lets try the following -

$docker-compose run web bash

Starting es... done

root@581e351c82b0:/opt/flask-app# ls

app.py package-lock.json requirements.txt templates

node_modules package.json static webpack.config.js

root@581e351c82b0:/opt/flask-app# grep hello app.py

root@581e351c82b0:/opt/flask-app# exitWhat we're trying to do here is to validate that our changes are not in theapp.pythat's running in the container. We do this by running the commanddocker-compose run,which is similar to its cousindocker runbut takes additional arguments for the service (which iswebin ourcase). As soon as we runbash,the shell opens in/opt/flask-appas specified in ourDockerfile.From the grep command we can see that our changes are not in the file.

Lets see how we can fix it. First off, we need to tell docker compose to not use the image and instead use the files locally. We'll also set debug mode totrueso that Flask knows to reload the server whenapp.pychanges. Replace thewebportion of thedocker-compose.ymlfile like so:

version:"3"

services:

es:

image:docker.elastic.co/elasticsearch/elasticsearch:6.3.2

container_name:es

environment:

-discovery.type=single-node

ports:

-9200:9200

volumes:

- esdata1:/usr/share/elasticsearch/data

web:

build:.# replaced image with build

command:Python 3 app.py

environment:

-DEBUG=True# set an env var for flask

depends_on:

-es

ports:

-"5000:5000"

volumes:

-./flask-app:/opt/flask-app

volumes:

esdata1:

driver:localWith that change (diff), let's stop and start the containers.

$ docker-compose down -v

Stopping foodtrucks_web_1...done

Stopping es...done

Removing foodtrucks_web_1...done

Removing es...done

Removing network foodtrucks_default

Removing volume foodtrucks_esdata1

$ docker-compose up-d

Creating network"foodtrucks_default"with the default driver

Creating volume"foodtrucks_esdata1"withlocaldriver

Creating es...done

Creating foodtrucks_web_1...doneAs a final step, lets make the change inapp.pyby adding a new route. Now we try to curl

$ curl 0.0.0.0:5000/hello

hello worldWohoo! We get a valid response! Try playing around by making more changes in the app.

That concludes our tour of Docker Compose. With Docker Compose, you can also pause your services, run a one-off command on a container and even scale the number of containers. I also recommend you checkout a few otheruse-casesof Docker compose. Hopefully, I was able to show you how easy it is to manage multi-container environments with Compose. In the final section, we are going to deploy our app to AWS!

AWS Elastic Container Service

In the last section we useddocker-composeto run our app locally with a single command:docker-compose up.Now that we have a functioning app we want to share this with the world, get some users, make tons of money and buy a big house in Miami. Executing the last three are beyond the scope of the tutorial, so we'll spend our time instead on figuring out how we can deploy our multi-container apps on the cloud with AWS.

If you've read this far you are pretty much convinced that Docker is a pretty cool technology. And you are not alone. Seeing the meteoric rise of Docker, almost all Cloud vendors started working on adding support for deploying Docker apps on their platform. As of today, you can deploy containers onGoogle Cloud Platform,AWS,Azureand many others. We already got a primer on deploying single container apps with Elastic Beanstalk and in this section we are going to look atElastic Container Service (or ECS)by AWS.

AWS ECS is a scalable and super flexible container management service that supports Docker containers. It allows you to operate a Docker cluster on top of EC2 instances via an easy-to-use API. Where Beanstalk came with reasonable defaults, ECS allows you to completely tune your environment as per your needs. This makes ECS, in my opinion, quite complex to get started with.

Luckily for us, ECS has a friendlyCLItool that understands Docker Compose files and automatically provisions the cluster on ECS! Since we already have a functioningdocker-compose.ymlit should not take a lot of effort in getting up and running on AWS. So let's get started!

The first step is to install the CLI. Instructions to install the CLI on both Mac and Linux are explained very clearly in theofficial docs.Go ahead, install the CLI and when you are done, verify the install by running

$ ecs-cli --version

ecs-cli version 1.18.1 (7e9df84)Next, we'll be working on configuring the CLI so that we can talk to ECS. We'll be following the steps as detailed in theofficial guideon AWS ECS docs. In case of any confusion, please feel free to refer to that guide.

The first step will involve creating a profile that we'll use for the rest of the tutorial. To continue, you'll need yourAWS_ACCESS_KEY_IDandAWS_SECRET_ACCESS_KEY.To obtain these, follow the steps as detailed under the section titledAccess Key and Secret Access Keyonthis page.

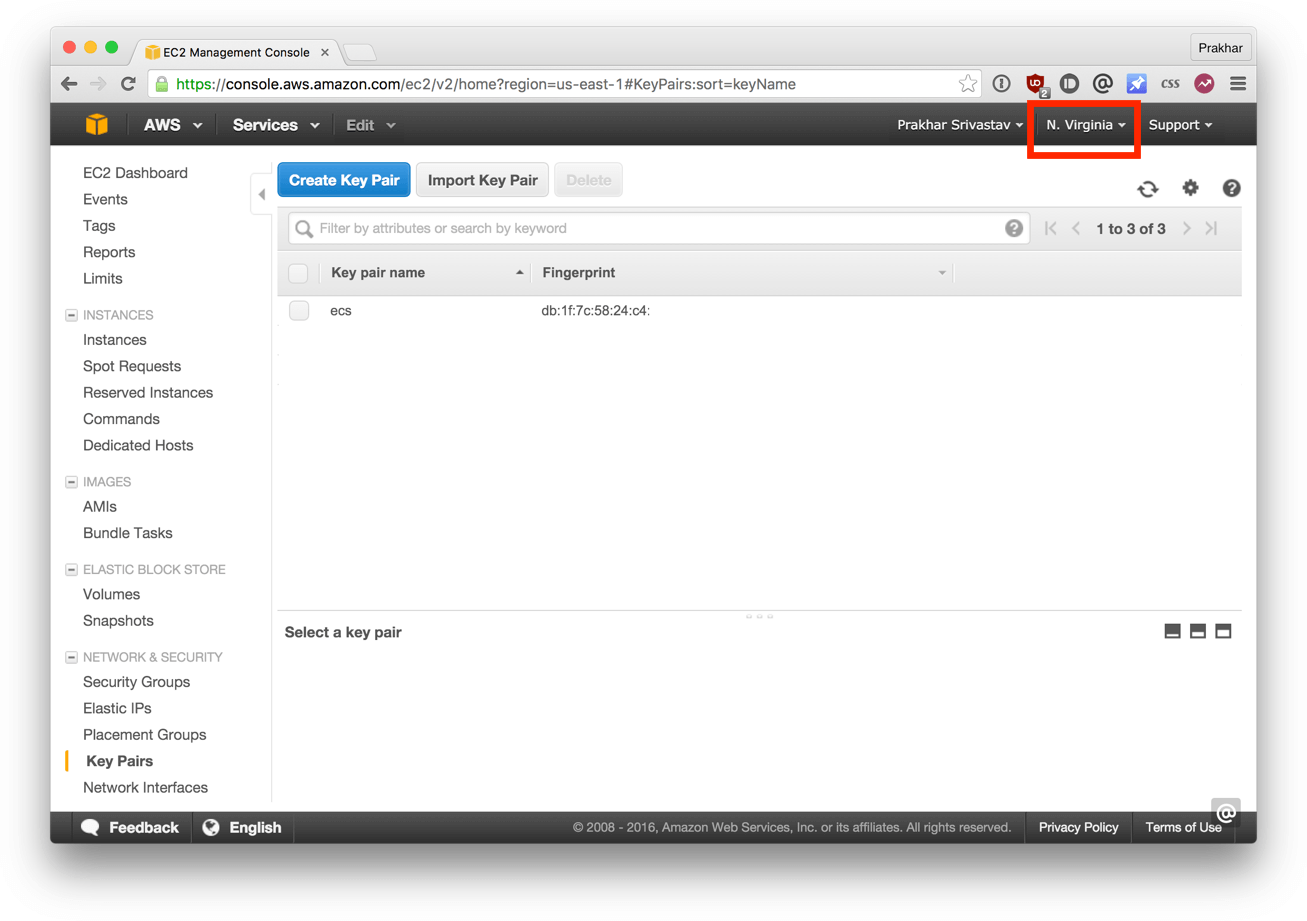

$ ecs-cli configure profile --profile-name ecs-foodtrucks --access-key$AWS_ACCESS_KEY_ID--secret-key$AWS_SECRET_ACCESS_KEYNext, we need to get a keypair which we'll be using to log into the instances. Head over to yourEC2 Consoleand create a new keypair. Download the keypair and store it in a safe location. Another thing to note before you move away from this screen is the region name. In my case, I have named my key -ecsand set my region asus-east-1.This is what I'll assume for the rest of this walkthrough.

The next step is to configure the CLI.

$ ecs-cli configure --region us-east-1 --cluster foodtrucks

INFO[0000] Saved ECS CLI configurationforcluster (foodtrucks)We provide theconfigurecommand with the region name we want our cluster to reside in and a cluster name. Make sure you provide thesame region namethat you used when creating the keypair. If you've not configured theAWS CLIon your computer before, you can use the officialguide,which explains everything in great detail on how to get everything going.

The next step enables the CLI to create aCloudFormationtemplate.

$ ecs-cli up --keypair ecs --capability-iam --size 1 --instance-type t2.medium

INFO[0000] Using recommended Amazon Linux 2 AMI with ECS Agent 1.39.0 and Docker version 18.09.9-ce

INFO[0000] Created cluster cluster=foodtrucks

INFO[0001] Waitingforyour cluster resources to be created

INFO[0001] Cloudformation stack status stackStatus=CREATE_IN_PROGRESS

INFO[0062] Cloudformation stack status stackStatus=CREATE_IN_PROGRESS

INFO[0122] Cloudformation stack status stackStatus=CREATE_IN_PROGRESS

INFO[0182] Cloudformation stack status stackStatus=CREATE_IN_PROGRESS

INFO[0242] Cloudformation stack status stackStatus=CREATE_IN_PROGRESS

VPC created: vpc-0bbed8536930053a6

Security Group created: sg-0cf767fb4d01a3f99

Subnet created: subnet-05de1db2cb1a50ab8

Subnet created: subnet-01e1e8bc95d49d0fd

Cluster creation succeeded.Here we provide the name of the keypair we downloaded initially (ecsin my case), the number of instances that we want to use (--size) and the type of instances that we want the containers to run on. The--capability-iamflag tells the CLI that we acknowledge that this command may create IAM resources.

The last and final step is where we'll use ourdocker-compose.ymlfile. We'll need to make a few minor changes, so instead of modifying the original, let's make a copy of it. The contents ofthis file(after making the changes) look like (below) -

version:'2'

services:

es:

image: docker.elastic.co/elasticsearch/elasticsearch:7.6.2

cpu_shares: 100

mem_limit: 3621440000

environment:

- discovery.type=single-node

- bootstrap.memory_lock=true

-"ES_JAVA_OPTS=-Xms512m -Xmx512m"

logging:

driver: Awsl (a ta đã chết) ogs

options:

Awsl (a ta đã chết) ogs-group: foodtrucks

Awsl (a ta đã chết) ogs-region: us-east-1

Awsl (a ta đã chết) ogs-stream-prefix: es

web:

image: yourusername/foodtrucks-web

cpu_shares: 100

mem_limit: 262144000

ports:

-"80:5000"

links:

- es

logging:

driver: Awsl (a ta đã chết) ogs

options:

Awsl (a ta đã chết) ogs-group: foodtrucks

Awsl (a ta đã chết) ogs-region: us-east-1

Awsl (a ta đã chết) ogs-stream-prefix: webThe only changes we made from the originaldocker-compose.ymlare of providing themem_limit(in bytes) andcpu_sharesvalues for each container and adding some logging configuration. This allows us to view logs generated by our containers inAWS CloudWatch.Head over to CloudWatch tocreate a log groupcalledfoodtrucks.Note that since ElasticSearch typically ends up taking more memory, we've given around 3.4 GB of memory limit. Another thing we need to do before we move onto the next step is to publish our image on Docker Hub.

$ docker push yourusername/foodtrucks-webGreat! Now let's run the final command that will deploy our app on ECS!

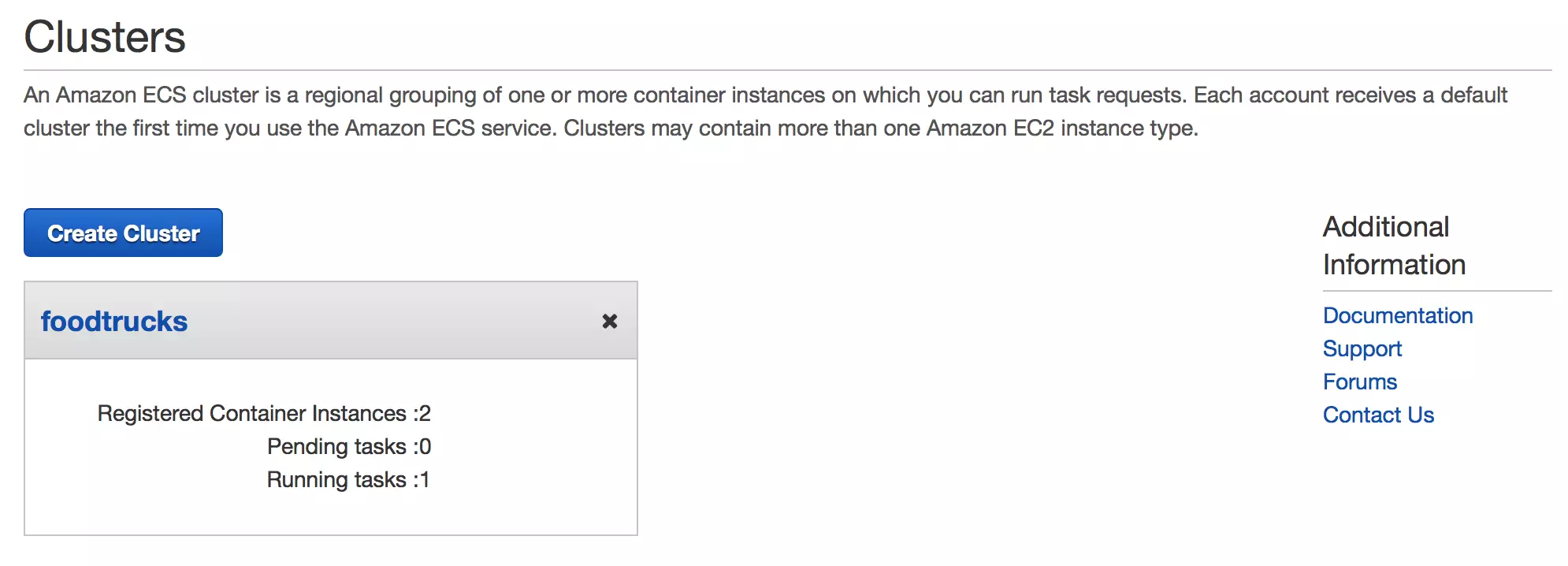

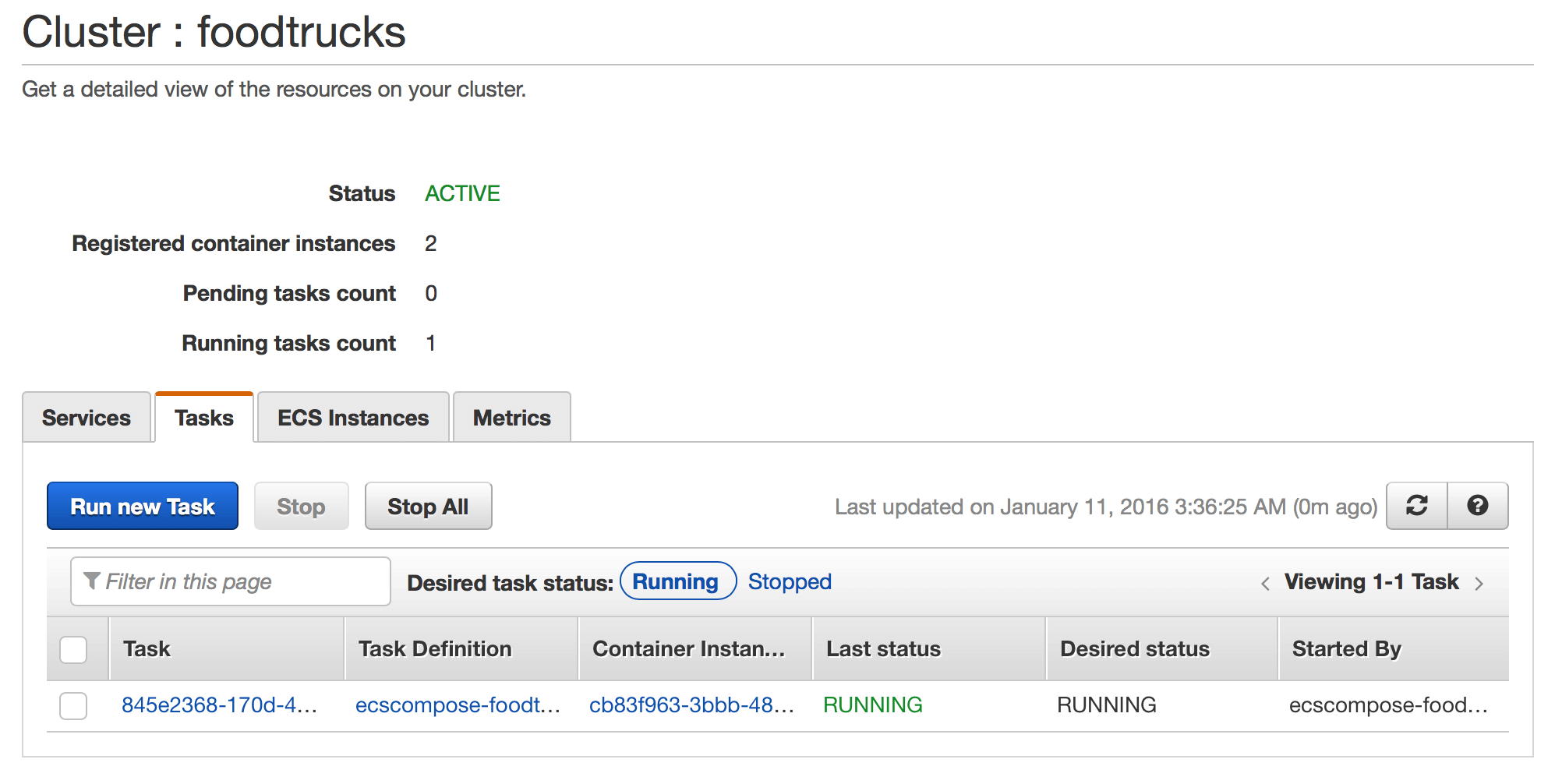

$cdaws-ecs

$ ecs-cli compose up

INFO[0000] Using ECS task definition TaskDefinition=ecscompose-foodtrucks:2

INFO[0000] Starting container... container=845e2368-170d-44a7-bf9f-84c7fcd9ae29/es

INFO[0000] Starting container... container=845e2368-170d-44a7-bf9f-84c7fcd9ae29/web

INFO[0000] Describe ECS container status container=845e2368-170d-44a7-bf9f-84c7fcd9ae29/web desiredStatus=RUNNING lastStatus=PENDING taskDefinition=ecscompose-foodtrucks:2

INFO[0000] Describe ECS container status container=845e2368-170d-44a7-bf9f-84c7fcd9ae29/es desiredStatus=RUNNING lastStatus=PENDING taskDefinition=ecscompose-foodtrucks:2

INFO[0036] Describe ECS container status container=845e2368-170d-44a7-bf9f-84c7fcd9ae29/es desiredStatus=RUNNING lastStatus=PENDING taskDefinition=ecscompose-foodtrucks:2

INFO[0048] Describe ECS container status container=845e2368-170d-44a7-bf9f-84c7fcd9ae29/web desiredStatus=RUNNING lastStatus=PENDING taskDefinition=ecscompose-foodtrucks:2

INFO[0048] Describe ECS container status container=845e2368-170d-44a7-bf9f-84c7fcd9ae29/es desiredStatus=RUNNING lastStatus=PENDING taskDefinition=ecscompose-foodtrucks:2

INFO[0060] Started container... container=845e2368-170d-44a7-bf9f-84c7fcd9ae29/web desiredStatus=RUNNING lastStatus=RUNNING taskDefinition=ecscompose-foodtrucks:2

INFO[0060] Started container... container=845e2368-170d-44a7-bf9f-84c7fcd9ae29/es desiredStatus=RUNNING lastStatus=RUNNING taskDefinition=ecscompose-foodtrucks:2It's not a coincidence that the invocation above looks similar to the one we used withDocker Compose.If everything went well, you should see adesiredStatus=RUNNING lastStatus=RUNNINGas the last line.

Awesome! Our app is live, but how can we access it?

ecs-cli ps

Name State Ports TaskDefinition

845e2368-170d-44a7-bf9f-84c7fcd9ae29/web RUNNING 54.86.14.14:80->5000/tcp ecscompose-foodtrucks:2