Kurtosis: Difference between revisions

Add: isbn, pages, bibcode. |Use this bot.Report bugs.| Suggested by Abductive |Category:Articles to be expanded from December 2009| #UCB_Category 380/470 |

|||

| (21 intermediate revisions by 13 users not shown) | |||

| Line 12: | Line 12: | ||

== Pearson moments == |

== Pearson moments == |

||

The kurtosis is the fourth [[standardized moment]], defined as |

The kurtosis is the fourth [[standardized moment]], defined as |

||

<mathdisplay= "block"> |

|||

\operatorname{Kurt}[X] = \operatorname{E}\left[\left(\frac{X - \mu}{\sigma}\right)^4\right] = \frac{\operatorname{E}\left[(X - \mu)^4\right]}{\left(\operatorname{E}\left[(X - \mu)^2\right]\right)^2} = \frac{\mu_4}{\sigma^4}, |

\operatorname{Kurt}[X] = \operatorname{E}\left[\left(\frac{X - \mu}{\sigma}\right)^4\right] = \frac{\operatorname{E}\left[(X - \mu)^4\right]}{\left(\operatorname{E}\left[(X - \mu)^2\right]\right)^2} = \frac{\mu_4}{\sigma^4}, |

||

</math> |

</math> |

||

| ⚫ | where ''μ''<sub>4</sub> is the fourth [[central moment]] and''σ''is the [[standard deviation]]. Several letters are used in the literature to denote the kurtosis. A very common choice is ''κ'', which is fine as long as it is clear that it does not refer to a [[cumulant]]. Other choices include ''γ''<sub>2</sub>, to be similar to the notation for skewness, although sometimes this is instead reserved for the excess kurtosis. |

||

| ⚫ | where ''μ''<sub>4</sub> is the fourth [[central moment]] and σ is the [[standard deviation]]. Several letters are used in the literature to denote the kurtosis. A very common choice is ''κ'', which is fine as long as it is clear that it does not refer to a [[cumulant]]. Other choices include ''γ''<sub>2</sub>, to be similar to the notation for skewness, although sometimes this is instead reserved for the excess kurtosis. |

||

The kurtosis is bounded below by the squared [[skewness]] plus 1:{{r|Pearson1916|p=432}} |

The kurtosis is bounded below by the squared [[skewness]] plus 1:{{r|Pearson1916|p=432}} |

||

<mathdisplay= "block"> \frac{\mu_4}{\sigma^4} \geq \left(\frac{\mu_3}{\sigma^3}\right)^2 + 1,</math> |

|||

where ''μ''<sub>3</sub> is the third [[central moment]]. The lower bound is realized by the [[Bernoulli distribution]]. There is no upper limit to the kurtosis of a general probability distribution, and it may be infinite. |

where ''μ''<sub>3</sub> is the third [[central moment]]. The lower bound is realized by the [[Bernoulli distribution]]. There is no upper limit to the kurtosis of a general probability distribution, and it may be infinite. |

||

A reason why some authors favor the excess kurtosis is that cumulants are [[intensive and extensive properties|extensive]]. Formulas related to the extensive property are more naturally expressed in terms of the excess kurtosis. For example, let ''X''<sub>1</sub>,..., ''X''<sub>''n''</sub> be independent random variables for which the fourth moment exists, and let ''Y'' be the random variable defined by the sum of the ''X''<sub>''i''</sub>. The excess kurtosis of ''Y'' is |

A reason why some authors favor the excess kurtosis is that cumulants are [[intensive and extensive properties|extensive]]. Formulas related to the extensive property are more naturally expressed in terms of the excess kurtosis. For example, let ''X''<sub>1</sub>,..., ''X''<sub>''n''</sub> be independent random variables for which the fourth moment exists, and let ''Y'' be the random variable defined by the sum of the ''X''<sub>''i''</sub>. The excess kurtosis of ''Y'' is |

||

| ⚫ | |||

| ⚫ | |||

where <math>\sigma_i</math> is the standard deviation of <math>X_i</math>. In particular if all of the ''X''<sub>''i''</sub> have the same variance, then this simplifies to |

where <math>\sigma_i</math> is the standard deviation of <math>X_i</math>. In particular if all of the ''X''<sub>''i''</sub> have the same variance, then this simplifies to |

||

| ⚫ | |||

| ⚫ | The reason not to subtract 3 is that the bare [[moment(statistics)|moment]] better generalizes to [[multivariate distribution]]s, especially when independence is not assumed. The [[cokurtosis]] between pairs of variables is an order four [[tensor]]. For a bivariate normal distribution, the cokurtosis tensor has off-diagonal terms that are neither 0 nor 3 in general, so attempting to "correct" for an excess becomes confusing. It is true, however, that the joint cumulants of degree greater than two for any [[multivariate normal distribution]] are zero. |

||

| ⚫ | |||

| ⚫ | The reason not to subtract 3 is that the bare [[ |

||

For two random variables, ''X'' and ''Y'', not necessarily independent, the kurtosis of the sum, ''X'' + ''Y'', is |

For two random variables, ''X'' and ''Y'', not necessarily independent, the kurtosis of the sum, ''X'' + ''Y'', is |

||

<mathdisplay= "block"> |

|||

\begin{align} |

\begin{align} |

||

\operatorname{Kurt}[X+Y] = {1 \over \sigma_{X+Y}^4} \big( & \sigma_X^4\operatorname{Kurt}[X] + 4\sigma_X^3\sigma_Y\operatorname{Cokurt}[X,X,X,Y] \\ |

\operatorname{Kurt}[X+Y] = {1 \over \sigma_{X+Y}^4} \big( & \sigma_X^4\operatorname{Kurt}[X] + 4\sigma_X^3\sigma_Y\operatorname{Cokurt}[X,X,X,Y] \\ |

||

| Line 49: | Line 46: | ||

In 1986 Moors gave an interpretation of kurtosis.{{r|Moors1986}} Let |

In 1986 Moors gave an interpretation of kurtosis.{{r|Moors1986}} Let |

||

| ⚫ | |||

| ⚫ | |||

where ''X'' is a random variable, ''μ'' is the mean and ''σ'' is the standard deviation. |

where ''X'' is a random variable, ''μ'' is the mean and ''σ'' is the standard deviation. |

||

Now by definition of the kurtosis <math> \kappa </math>, and by the well-known identity <math> E\left[V^2\right] = \operatorname{var}[V] + [E[V]]^2, </math> |

Now by definition of the kurtosis <math> \kappa </math>, and by the well-known identity <math> E\left[V^2\right] = \operatorname{var}[V] + [E[V]]^2, </math> |

||

| ⚫ | |||

| ⚫ | |||

E\left[ Z^4 \right] = |

E\left[ Z^4 \right] = |

||

\operatorname{var}\left[ Z^2 \right] + \left[E\left[Z^2\right]\right]^2 = |

\operatorname{var}\left[ Z^2 \right] + \left[E\left[Z^2\right]\right]^2 = |

||

| Line 66: | Line 60: | ||

High values of ''κ'' arise in two circumstances: |

High values of ''κ'' arise in two circumstances: |

||

* where the probability mass is concentrated around the mean and the data-generating process produces occasional values far from the mean, |

* where the probability mass is concentrated around the mean and the data-generating process produces occasional values far from the mean, |

||

* where the probability mass is concentrated in the tails of the distribution. |

* where the probability mass is concentrated in the tails of the distribution. |

||

=== Maximal entropy === |

=== Maximal entropy === |

||

The entropy of a distribution is <math>\int p(x) \ln p(x) \, dx</math>. |

The entropy of a distribution is <math>\int p(x) \ln p(x) \, dx</math>. |

||

For any <math>\mu \in \R^n, \Sigma \in \R^{n\times n}</math> with <math>\Sigma</math> positive definite, among all probability distributions on <math>\R^n</math> with mean <math>\mu</math> and covariance <math>\Sigma</math>, the normal distribution <math>\mathcal N(\mu, \Sigma)</math> has the largest entropy. |

For any <math>\mu \in \R^n, \Sigma \in \R^{n\times n}</math> with <math>\Sigma</math> positive definite, among all probability distributions on <math>\R^n</math> with mean <math>\mu</math> and covariance <math>\Sigma</math>, the normal distribution <math>\mathcal N(\mu, \Sigma)</math> has the largest entropy. |

||

Since mean <math>\mu</math> and covariance <math>\Sigma</math> are the first two moments, it is natural to consider extension to higher moments. In fact, by [[Lagrange multiplier]] method, for any prescribed first n moments, if there exists some probability distribution of form <math>p(x) \propto e^{\sum_i a_i x_i + \sum_{ij}b_{ij}x_ix_j + \cdots + \sum_{i_1\cdots i_n} x_{i_1}\cdots x_{i_n}}</math> that has the prescribed moments (if it is feasible), then it is the maximal entropy distribution under the given constraints.<ref>{{Cite journal |last=Tagliani |first=A. |date=1990-12-01 |title=On the existence of maximum entropy distributions with four and more assigned moments |url=https://dx.doi.org/10.1016/0266-8920%2890%2990017-E |journal=Probabilistic Engineering Mechanics |volume=5 |issue=4 |pages=167–170 |doi=10.1016/0266-8920(90)90017-E |issn=0266-8920}}</ref><ref>{{Cite journal |last1=Rockinger |first1=Michael |last2=Jondeau |first2=Eric |date=2002-01-01 |title=Entropy densities with an application to autoregressive conditional skewness and kurtosis |url=https://www.sciencedirect.com/science/article/pii/S0304407601000926 |journal=Journal of Econometrics |volume=106 |issue=1 |pages=119–142 |doi=10.1016/S0304-4076(01)00092-6 |issn=0304-4076}}</ref> |

Since mean <math>\mu</math> and covariance <math>\Sigma</math> are the first two moments, it is natural to consider extension to higher moments. In fact, by [[Lagrange multiplier]] method, for any prescribed first n moments, if there exists some probability distribution of form <math>p(x) \propto e^{\sum_i a_i x_i + \sum_{ij}b_{ij}x_ix_j + \cdots + \sum_{i_1\cdots i_n} x_{i_1}\cdots x_{i_n}}</math> that has the prescribed moments (if it is feasible), then it is the maximal entropy distribution under the given constraints.<ref>{{Cite journal |last=Tagliani |first=A. |date=1990-12-01 |title=On the existence of maximum entropy distributions with four and more assigned moments |url=https://dx.doi.org/10.1016/0266-8920%2890%2990017-E |journal=Probabilistic Engineering Mechanics |volume=5 |issue=4 |pages=167–170 |doi=10.1016/0266-8920(90)90017-E|bibcode=1990PEngM...5..167T|issn=0266-8920}}</ref><ref>{{Cite journal |last1=Rockinger |first1=Michael |last2=Jondeau |first2=Eric |date=2002-01-01 |title=Entropy densities with an application to autoregressive conditional skewness and kurtosis |url=https://www.sciencedirect.com/science/article/pii/S0304407601000926 |journal=Journal of Econometrics |volume=106 |issue=1 |pages=119–142 |doi=10.1016/S0304-4076(01)00092-6 |issn=0304-4076}}</ref> |

||

By serial expansion, <math display= "block" > |

|||

| ⚫ | |||

\begin{align} |

|||

& \int \frac{1}{\sqrt{2\pi}} e^{-\frac 12 x^2 - \frac 14 gx^4} x^{2n} \, dx \\[6pt] |

|||

= {} & \frac{1}{\sqrt{2\pi}} \int e^{-\frac 12 x^2 - \frac 14 gx^4} x^{2n} \, dx \\[6pt] |

|||

= {} & \sum_k \frac{1}{k!} (-g/4)^k (2n+4k-1)!! \\[6pt] |

|||

= {} & (2n-1)!! - \frac 14 g (2n+3)!! + O(g^2) |

|||

\end{align} |

|||

| ⚫ | </math>so if a random variable has probability distribution <math>p(x) = e^{-\frac 12 x^2 - \frac 14 gx^4}/Z</math>, where <math>Z</math> is a normalization constant, then its kurtosis is{{nowrap|<math>3 - 6g + O(g^2)</math>.}}<ref>{{Cite journal |last1=Bradde |first1=Serena |last2=Bialek |first2=William |date=2017-05-01 |title=PCA Meets RG |url=https://doi.org/10.1007/s10955-017-1770-6 |journal=Journal of Statistical Physics |language=en |volume=167 |issue=3 |pages=462–475 |doi=10.1007/s10955-017-1770-6 |issn=1572-9613 |pmc=6054449 |pmid=30034029|arxiv=1610.09733 |bibcode=2017JSP...167..462B }}</ref> |

||

== Excess kurtosis == |

== Excess kurtosis == |

||

| Line 83: | Line 83: | ||

=== Mesokurtic === |

=== Mesokurtic === |

||

Distributions with zero excess kurtosis are called '''mesokurtic''', or mesokurtotic. The most prominent example of a mesokurtic distribution is the normal distribution family, regardless of the values of its [[parameter]]s. A few other well-known distributions can be mesokurtic, depending on parameter values: for example, the [[binomial distribution]] is mesokurtic for <math>p = 1/2 \pm \sqrt{1/12}</math>. |

Distributions with zero excess kurtosis are called '''mesokurtic''', or'''mesokurtotic'''.The most prominent example of a mesokurtic distribution is the normal distribution family, regardless of the values of its [[parameter]]s. A few other well-known distributions can be mesokurtic, depending on parameter values: for example, the [[binomial distribution]] is mesokurtic for <mathdisplay= "inline">p = 1/2 \pm \sqrt{1/12}</math>. |

||

=== Leptokurtic === |

=== Leptokurtic === |

||

A distribution with [[Positive number|positive]] excess kurtosis is called '''leptokurtic''', or leptokurtotic. "Lepto-" means "slender".<ref>{{Cite web | url=http://medical-dictionary.thefreedictionary.com/lepto- | title=Lepto-}}</ref> In terms of shape, a leptokurtic distribution has ''[[Fat-tailed distribution|fatter tails]]''. Examples of leptokurtic distributions include the [[Student's t-distribution]], [[Rayleigh distribution]], [[Laplace distribution]], [[exponential distribution]], [[Poisson distribution]] and the [[logistic distribution]]. Such distributions are sometimes termed ''super-Gaussian''.{{r|Beneviste1980}} |

A distribution with [[Positive number|positive]] excess kurtosis is called '''leptokurtic''', or'''leptokurtotic'''."Lepto-" means "slender".<ref>{{Cite web | url=http://medical-dictionary.thefreedictionary.com/lepto- | title=Lepto-}}</ref> In terms of shape, a leptokurtic distribution has ''[[Fat-tailed distribution|fatter tails]]''. Examples of leptokurtic distributions include the [[Student's t-distribution]], [[Rayleigh distribution]], [[Laplace distribution]], [[exponential distribution]], [[Poisson distribution]] and the [[logistic distribution]]. Such distributions are sometimes termed ''super-Gaussian''.{{r|Beneviste1980}} |

||

[[File:Three probability density functions.png|thumb|Three symmetric increasingly leptokurtic probability density functions; their intersections are indicated by vertical lines.]] |

|||

=== Platykurtic === |

=== Platykurtic === |

||

[[File:1909 US Penny.jpg|thumb|The [[coin toss]] is the most platykurtic distribution]] |

[[File:1909 US Penny.jpg|thumb|The [[coin toss]] is the most platykurtic distribution]] |

||

A distribution with [[Negative number|negative]] excess kurtosis is called '''platykurtic''', or platykurtotic. "Platy-" means "broad".<ref>{{cite web| url = http://www.yourdictionary.com/platy-prefix| url-status = dead| archive-url = https://web.archive.org/web/20071020202653/http://www.yourdictionary.com/platy-prefix| archive-date = 2007-10-20| title = platy-: definition, usage and pronunciation - YourDictionary.com}}</ref> In terms of shape, a platykurtic distribution has ''thinner tails''. Examples of platykurtic distributions include the [[Continuous uniform distribution|continuous]] and [[discrete uniform distribution]]s, and the [[raised cosine distribution]]. The most platykurtic distribution of all is the [[Bernoulli distribution]] with ''p'' = 1/2 (for example the number of times one obtains "heads" when flipping a coin once, a [[coin toss]]), for which the excess kurtosis is −2. |

A distribution with [[Negative number|negative]] excess kurtosis is called '''platykurtic''', or'''platykurtotic'''."Platy-" means "broad".<ref>{{cite web| url = http://www.yourdictionary.com/platy-prefix| url-status = dead| archive-url = https://web.archive.org/web/20071020202653/http://www.yourdictionary.com/platy-prefix| archive-date = 2007-10-20| title = platy-: definition, usage and pronunciation - YourDictionary.com}}</ref> In terms of shape, a platykurtic distribution has ''thinner tails''. Examples of platykurtic distributions include the [[Continuous uniform distribution|continuous]] and [[discrete uniform distribution]]s, and the [[raised cosine distribution]]. The most platykurtic distribution of all is the [[Bernoulli distribution]] with ''p'' = 1/2 (for example the number of times one obtains "heads" when flipping a coin once, a [[coin toss]]), for which the excess kurtosis is −2. |

||

== Graphical examples == |

== Graphical examples == |

||

| Line 99: | Line 100: | ||

The effects of kurtosis are illustrated using a [[parametric family]] of distributions whose kurtosis can be adjusted while their lower-order moments and cumulants remain constant. Consider the [[Pearson distribution|Pearson type VII family]], which is a special case of the [[Pearson distribution|Pearson type IV family]] restricted to symmetric densities. The [[probability density function]] is given by |

The effects of kurtosis are illustrated using a [[parametric family]] of distributions whose kurtosis can be adjusted while their lower-order moments and cumulants remain constant. Consider the [[Pearson distribution|Pearson type VII family]], which is a special case of the [[Pearson distribution|Pearson type IV family]] restricted to symmetric densities. The [[probability density function]] is given by |

||

| ⚫ | |||

| ⚫ | |||

where ''a'' is a [[scale parameter]] and ''m'' is a [[shape parameter]]. |

where ''a'' is a [[scale parameter]] and ''m'' is a [[shape parameter]]. |

||

All densities in this family are symmetric. The ''k''th moment exists provided ''m'' > (''k'' + 1)/2. For the kurtosis to exist, we require ''m'' > 5/2. Then the mean and [[skewness]] exist and are both identically zero. Setting ''a''<sup>2</sup> = 2''m'' − 3 makes the variance equal to unity. Then the only free parameter is ''m'', which controls the fourth moment (and cumulant) and hence the kurtosis. One can reparameterize with <math>m = 5/2 + 3/\gamma_2</math>, where <math>\gamma_2</math> is the excess kurtosis as defined above. This yields a one-parameter leptokurtic family with zero mean, unit variance, zero skewness, and arbitrary non-negative excess kurtosis. The reparameterized density is |

All densities in this family are symmetric. The ''k''th moment exists provided ''m'' > (''k'' + 1)/2. For the kurtosis to exist, we require ''m'' > 5/2. Then the mean and [[skewness]] exist and are both identically zero. Setting ''a''<sup>2</sup> = 2''m'' − 3 makes the variance equal to unity. Then the only free parameter is ''m'', which controls the fourth moment (and cumulant) and hence the kurtosis. One can reparameterize with <math>m = 5/2 + 3/\gamma_2</math>, where <math>\gamma_2</math> is the excess kurtosis as defined above. This yields a one-parameter leptokurtic family with zero mean, unit variance, zero skewness, and arbitrary non-negative excess kurtosis. The reparameterized density is |

||

| ⚫ | |||

| ⚫ | |||

In the limit as <math>\gamma_2 \to \infty</math> one obtains the density |

In the limit as <math>\gamma_2 \to \infty</math> one obtains the density |

||

| ⚫ | |||

| ⚫ | |||

which is shown as the red curve in the images on the right. |

which is shown as the red curve in the images on the right. |

||

| Line 142: | Line 138: | ||

Also, there exist platykurtic densities with infinite peakedness, |

Also, there exist platykurtic densities with infinite peakedness, |

||

*e.g., an equal mixture of the [[beta distribution]] with parameters 0.5 and 1 with its reflection about 0.0 |

*e.g., an equal mixture of the [[beta distribution]] with parameters 0.5 and 1 with its reflection about 0.0 |

||

and there exist leptokurtic densities that appear flat-topped, |

and there exist leptokurtic densities that appear flat-topped, |

||

*e.g., a mixture of distribution that is uniform between |

*e.g., a mixture of distribution that is uniform between−1and 1 with a T(4.0000001) [[Student's t-distribution]], with mixing probabilities 0.999 and 0.001. |

||

{{clear}} |

{{clear}} |

||

| Line 154: | Line 150: | ||

==== A natural but biased estimator ==== |

==== A natural but biased estimator ==== |

||

For a [[sample (statistics)|sample]] of ''n'' values, a [[ |

For a [[sample (statistics)|sample]] of ''n'' values, a [[Method of moments(statistics)|method of moments]] estimator of the population excess kurtosis can be defined as |

||

| ⚫ | |||

| ⚫ | |||

where ''m''<sub>4</sub> is the fourth sample [[moment about the mean]], ''m''<sub>2</sub> is the second sample moment about the mean (that is, the [[sample variance]]), ''x''<sub>''i''</sub> is the ''i''<sup>th</sup> value, and <math>\overline{x}</math> is the [[sample mean]]. |

where ''m''<sub>4</sub> is the fourth sample [[moment about the mean]], ''m''<sub>2</sub> is the second sample moment about the mean (that is, the [[sample variance]]), ''x''<sub>''i''</sub> is the ''i''<sup>th</sup> value, and <math>\overline{x}</math> is the [[sample mean]]. |

||

This formula has the simpler representation, |

This formula has the simpler representation, |

||

| ⚫ | |||

| ⚫ | |||

where the <math> z_i </math> values are the standardized data values using the standard deviation defined using ''n'' rather than ''n'' − 1 in the denominator. |

where the <math> z_i </math> values are the standardized data values using the standard deviation defined using ''n'' rather than ''n'' − 1 in the denominator. |

||

| Line 176: | Line 168: | ||

==== Standard unbiased estimator ==== |

==== Standard unbiased estimator ==== |

||

Given a sub-set of samples from a population, the sample excess kurtosis <math>g_2</math> above is a [[biased estimator]] of the population excess kurtosis. An alternative estimator of the population excess kurtosis, which is unbiased in random samples of a normal distribution, is defined as follows:{{r|Joanes1998}} |

Given a sub-set of samples from a population, the sample excess kurtosis <math>g_2</math> above is a [[biased estimator]] of the population excess kurtosis. An alternative estimator of the population excess kurtosis, which is unbiased in random samples of a normal distribution, is defined as follows:{{r|Joanes1998}} |

||

<math display= "block" > |

|||

:<math> |

|||

\begin{align} |

\begin{align} |

||

G_2 & = \frac{k_4}{k_2^2} \\[6pt] |

G_2 & = \frac{k_4}{k_2^2} \\[6pt] |

||

| Line 187: | Line 178: | ||

\end{align} |

\end{align} |

||

</math> |

</math> |

||

where ''k''<sub>4</sub> is the unique symmetric [[bias of an estimator|unbiased]] estimator of the fourth [[cumulant]], ''k''<sub>2</sub> is the unbiased estimate of the second cumulant (identical to the unbiased estimate of the sample variance), ''m''<sub>4</sub> is the fourth sample moment about the mean, ''m''<sub>2</sub> is the second sample moment about the mean, ''x''<sub>''i''</sub> is the ''i''<sup>th</sup> value, and <math>\bar{x}</math> is the sample mean. This adjusted Fisher–Pearson standardized moment coefficient <math> G_2 </math> is the version found in [[Microsoft Excel|Excel]] and several statistical packages including [[Minitab]], [[SAS (software)|SAS]], and [[SPSS]].<ref name=Doane2011>Doane DP, Seward LE (2011) J Stat Educ 19 (2)</ref> |

where ''k''<sub>4</sub> is the unique symmetric [[bias of an estimator|unbiased]] estimator of the fourth [[cumulant]], ''k''<sub>2</sub> is the unbiased estimate of the second cumulant (identical to the unbiased estimate of the sample variance), ''m''<sub>4</sub> is the fourth sample moment about the mean, ''m''<sub>2</sub> is the second sample moment about the mean, ''x''<sub>''i''</sub> is the ''i''<sup>th</sup> value, and <math>\bar{x}</math> is the sample mean. This adjusted Fisher–Pearson standardized moment coefficient <math> G_2 </math> is the version found in [[Microsoft Excel|Excel]] and several statistical packages including [[Minitab]], [[SAS (software)|SAS]], and [[SPSS]].<ref name=Doane2011>Doane DP, Seward LE (2011) J Stat Educ 19 (2)</ref> |

||

| Line 194: | Line 184: | ||

=== Upper bound === |

=== Upper bound === |

||

An upper bound for the sample kurtosis of ''n'' (''n'' > 2) real numbers is{{r|Sharma2015}} |

An upper bound for the sample kurtosis of ''n'' (''n'' > 2) real numbers is{{r|Sharma2015}} |

||

<mathdisplay= "block"> g_2 \le \frac{1}{2} \frac{n-3}{n-2} g_1^2 + \frac{n}{2} - 3.</math> |

|||

where <math>g_1=m_3/m_2^{3/2}</math> is the corresponding sample skewness. |

where <math>g_1=m_3/m_2^{3/2}</math> is the corresponding sample skewness. |

||

=== Variance under normality === |

=== Variance under normality === |

||

The variance of the sample kurtosis of a sample of size ''n'' from the [[normal distribution]] is{{r|Fisher1930}} |

The variance of the sample kurtosis of a sample of size ''n'' from the [[normal distribution]] is{{r|Fisher1930}} |

||

<mathdisplay= "block" >\operatorname{var}(g_2) = \frac{24n(n-1)^2}{(n-3)(n-2)(n+3)(n+5)} </math> |

|||

Stated differently, under the assumption that the underlying random variable <math>X</math> is normally distributed, it can be shown that <math>\sqrt{n} g_2 \,\xrightarrow{d}\, \mathcal{N}(0, 24)</math>.{{r|Kendall1969|p=Page number needed}} |

Stated differently, under the assumption that the underlying random variable <math>X</math> is normally distributed, it can be shown that <math>\sqrt{n} g_2 \,\xrightarrow{d}\, \mathcal{N}(0, 24)</math>.{{r|Kendall1969|p=Page number needed}} |

||

| Line 212: | Line 202: | ||

For non-normal samples, the variance of the sample variance depends on the kurtosis; for details, please see [[Variance#Distribution of the sample variance|variance]]. |

For non-normal samples, the variance of the sample variance depends on the kurtosis; for details, please see [[Variance#Distribution of the sample variance|variance]]. |

||

Pearson's definition of kurtosis is used as an indicator of intermittency in [[turbulence]].{{r|Sandborn1959}} It is also used in magnetic resonance imaging to quantify non-Gaussian diffusion.<ref>{{cite journal |last1=Jensen |first1=J. |last2=Helpern |first2=J. |last3=Ramani |first3=A. |last4=Lu |first4=H.|first5=K. |last5=Kaczynski |title=Diffusional kurtosis imaging: The quantification of |

Pearson's definition of kurtosis is used as an indicator of intermittency in [[turbulence]].{{r|Sandborn1959}} It is also used in magnetic resonance imaging to quantify non-Gaussian diffusion.<ref>{{cite journal |last1=Jensen |first1=J. |last2=Helpern |first2=J. |last3=Ramani |first3=A. |last4=Lu |first4=H.|first5=K. |last5=Kaczynski |title=Diffusional kurtosis imaging: The quantification ofnon-Gaussianwater diffusion by means of magnetic resonance imaging |journal=Magn Reson Med |date=19 May 2005 |volume=53 |issue=6 |pages=1432–1440 |doi = 10.1002/mrm.20508 |pmid=15906300 |s2cid=11865594 |url=https://onlinelibrary.wiley.com/doi/full/10.1002/mrm.20508}}</ref> |

||

A concrete example is the following lemma by He, Zhang, and Zhang:<ref name=He2010>{{cite journal | last1 = He | first1 = |

A concrete example is the following lemma by He, Zhang, and Zhang:<ref name=He2010>{{cite journal | last1 = He | first1 =Simai| last2 = Zhang | first2 =Jiawei| last3 = Zhang | first3 =Shuzhong| year = 2010 | title = Bounding probability of small deviation: A fourth moment approach | journal =[[Mathematics of Operations Research]]| volume = 35 | issue = 1| pages = 208–232 | doi = 10.1287/moor.1090.0438 | s2cid = 11298475 }}</ref> |

||

Assume a random variable <math>X</math> has expectation <math>E[X] = \mu</math>, variance <math>E\left[(X - \mu)^2\right] = \sigma^2</math> and kurtosis <math>\kappa = \tfrac{1}{\sigma^4}E\left[(X - \mu)^4\right]</math> |

Assume a random variable <math>X</math> has expectation <math>E[X] = \mu</math>, variance <math>E\left[(X - \mu)^2\right] = \sigma^2</math> and kurtosis <math>\kappa = \tfrac{1}{\sigma^4}E\left[(X - \mu)^4\right].</math> |

||

Assume we sample <math>n = \tfrac{2\sqrt{3} + 3}{3}\kappa\log\tfrac{1}{\delta}</math> many independent copies. Then |

Assume we sample <math>n = \tfrac{2\sqrt{3} + 3}{3}\kappa\log\tfrac{1}{\delta}</math> many independent copies. Then |

||

<mathdisplay= "block"> |

|||

\Pr\left[\max_{i=1}^n X_i \le \mu\right] \le \delta |

\Pr\left[\max_{i=1}^n X_i \le \mu\right] \le \delta |

||

\quad\text{and}\quad |

\quad\text{and}\quad |

||

\Pr\left[\min_{i=1}^n X_i \ge \mu\right] \le \delta |

\Pr\left[\min_{i=1}^n X_i \ge \mu\right] \le \delta. |

||

</math> |

</math> |

||

This shows that with <math>\Theta(\kappa\log\tfrac{1}\delta)</math> many samples, we will see one that is above the expectation with probability at least <math>1-\delta</math>. |

This shows that with <math>\Theta(\kappa\log\tfrac{1}\delta)</math> many samples, we will see one that is above the expectation with probability at least <math>1-\delta</math>. |

||

| Line 275: | Line 265: | ||

|doi=10.1098/rsta.1916.0009 |jstor=91092 |

|doi=10.1098/rsta.1916.0009 |jstor=91092 |

||

|bibcode=1916RSPTA.216..429P |

|bibcode=1916RSPTA.216..429P |

||

}}</ref> |

|doi-access=free}}</ref> |

||

<ref name= "Balanda1988" > |

<ref name= "Balanda1988" > |

||

{{citation |

{{citation |

||

| Line 349: | Line 339: | ||

|doi=10.1098/rspa.1930.0185 |jstor=95586 |

|doi=10.1098/rspa.1930.0185 |jstor=95586 |

||

|year=1930 |volume=130 |issue=812 |pages=16–28 |

|year=1930 |volume=130 |issue=812 |pages=16–28 |

||

|bibcode=1930RSPSA.130...16F |s2cid=121520301 }}</ref> |

|bibcode=1930RSPSA.130...16F |s2cid=121520301|hdl=2440/15205 |hdl-access=free}}</ref> |

||

<ref name=Kendall1969> |

<ref name=Kendall1969> |

||

{{citation |

{{citation |

||

| Line 372: | Line 362: | ||

|journal=[[Rocky Mountain Journal of Mathematics]] |

|journal=[[Rocky Mountain Journal of Mathematics]] |

||

|year=2015 |volume=45 |issue=5 |pages=1639–1643 |

|year=2015 |volume=45 |issue=5 |pages=1639–1643 |

||

|doi=10.1216/RMJ-2015-45-5-1639 |

|doi=10.1216/RMJ-2015-45-5-1639<!-- arxiv entry removed, as the article was withdrawn from arXiv.org by the author--> |

||

<!-- arxiv entry removed, as the article was withdrawn from arXiv.org by the author--> |

|||

|s2cid=88513237 |

|s2cid=88513237 |

||

|url=http://projecteuclid.org/euclid.rmjm/1453817258 |

|url=http://projecteuclid.org/euclid.rmjm/1453817258 |

||

}}</ref> |

|arxiv=1309.2896}}</ref> |

||

<ref name=Sandborn1959> |

<ref name=Sandborn1959> |

||

{{citation |

{{citation |

||

| Line 395: | Line 384: | ||

|title=2012 IEEE International Conference on Computational Photography (ICCP) |

|title=2012 IEEE International Conference on Computational Photography (ICCP) |

||

|year=2012 <!-- |pages=no page numbers defined--> |

|year=2012 <!-- |pages=no page numbers defined--> |

||

|pages=1–10 |

|||

|doi=10.1109/ICCPhot.2012.6215223 |

|doi=10.1109/ICCPhot.2012.6215223 |

||

|publisher=IEEE |location=28-29 April 2012; Seattle, WA, USA |

|publisher=IEEE |location=28-29 April 2012; Seattle, WA, USA |

||

|isbn=978-1-4673-1662-0 |

|||

|s2cid=14386924 |

|s2cid=14386924 |

||

}}</ref> |

}}</ref> |

||

<ref name=Hosking1992> |

<ref name=Hosking1992> |

||

| Line 429: | Line 420: | ||

* [http://faculty.etsu.edu/seier/doc/Kurtosis100years.doc Celebrating 100 years of Kurtosis] a history of the topic, with different measures of kurtosis. |

* [http://faculty.etsu.edu/seier/doc/Kurtosis100years.doc Celebrating 100 years of Kurtosis] a history of the topic, with different measures of kurtosis. |

||

{{ |

{{Clear}} |

||

{{Statistics|descriptive}} |

{{Statistics|descriptive}} |

||

Latest revision as of 21:25, 23 May 2024

Inprobability theoryandstatistics,kurtosis(fromGreek:κυρτός,kyrtosorkurtos,meaning "curved, arching" ) is a measure of the "tailedness" of theprobability distributionof areal-valuedrandom variable.Likeskewness,kurtosis describes a particular aspect of a probability distribution. There are different ways to quantify kurtosis for a theoretical distribution, and there are corresponding ways of estimating it using a sample from a population. Different measures of kurtosis may have differentinterpretations.

The standard measure of a distribution's kurtosis, originating withKarl Pearson,[1]is a scaled version of the fourthmomentof the distribution. This number is related to the tails of the distribution, not its peak;[2]hence, the sometimes-seen characterization of kurtosis as "peakedness"is incorrect. For this measure, higher kurtosis corresponds to greater extremity ofdeviations(oroutliers), and not the configuration of data nearthe mean.

It is common to compare the excess kurtosis (defined below) of a distribution to 0. This value 0 is the excess kurtosis of any univariatenormal distribution.Distributions with negative excess kurtosis are said to beplatykurtic,although this does not imply the distribution is "flat-topped" as is sometimes stated. Rather, it means the distribution produces fewer and/or less extreme outliers than the normal distribution. An example of a platykurtic distribution is theuniform distribution,which does not produce outliers. Distributions with a positive excess kurtosis are said to beleptokurtic.An example of a leptokurtic distribution is theLaplace distribution,which has tails that asymptotically approach zero more slowly than a Gaussian, and therefore produces more outliers than the normal distribution. It is common practice to use excess kurtosis, which is defined as Pearson's kurtosis minus 3, to provide a simple comparison to thenormal distribution.Some authors and software packages use "kurtosis" by itself to refer to the excess kurtosis. For clarity and generality, however, this article explicitly indicates where non-excess kurtosis is meant.

Alternative measures of kurtosis are: theL-kurtosis,which is a scaled version of the fourthL-moment;measures based on four population or samplequantiles.[3]These are analogous to the alternative measures ofskewnessthat are not based on ordinary moments.[3]

Pearson moments[edit]

The kurtosis is the fourthstandardized moment,defined as whereμ4is the fourthcentral momentandσis thestandard deviation.Several letters are used in the literature to denote the kurtosis. A very common choice isκ,which is fine as long as it is clear that it does not refer to acumulant.Other choices includeγ2,to be similar to the notation for skewness, although sometimes this is instead reserved for the excess kurtosis.

The kurtosis is bounded below by the squaredskewnessplus 1:[4]: 432 whereμ3is the thirdcentral moment.The lower bound is realized by theBernoulli distribution.There is no upper limit to the kurtosis of a general probability distribution, and it may be infinite.

A reason why some authors favor the excess kurtosis is that cumulants areextensive.Formulas related to the extensive property are more naturally expressed in terms of the excess kurtosis. For example, letX1,...,Xnbe independent random variables for which the fourth moment exists, and letYbe the random variable defined by the sum of theXi.The excess kurtosis ofYis whereis the standard deviation of.In particular if all of theXihave the same variance, then this simplifies to

The reason not to subtract 3 is that the baremomentbetter generalizes tomultivariate distributions,especially when independence is not assumed. Thecokurtosisbetween pairs of variables is an order fourtensor.For a bivariate normal distribution, the cokurtosis tensor has off-diagonal terms that are neither 0 nor 3 in general, so attempting to "correct" for an excess becomes confusing. It is true, however, that the joint cumulants of degree greater than two for anymultivariate normal distributionare zero.

For two random variables,XandY,not necessarily independent, the kurtosis of the sum,X+Y,is Note that the fourth-powerbinomial coefficients(1, 4, 6, 4, 1) appear in the above equation.

Interpretation[edit]

The exact interpretation of the Pearson measure of kurtosis (or excess kurtosis) used to be disputed, but is now settled. As Westfall notes in 2014[2],"...its only unambiguous interpretation is in terms of tail extremity; i.e., either existing outliers (for the sample kurtosis) or propensity to produce outliers (for the kurtosis of a probability distribution)."The logic is simple: Kurtosis is the average (orexpected value) of the standardized data raised to the fourth power. Standardized values that are less than 1 (i.e., data within one standard deviation of the mean, where the "peak" would be) contribute virtually nothing to kurtosis, since raising a number that is less than 1 to the fourth power makes it closer to zero. The only data values (observed or observable) that contribute to kurtosis in any meaningful way are those outside the region of the peak; i.e., the outliers. Therefore, kurtosis measures outliers only; it measures nothing about the "peak".

Many incorrect interpretations of kurtosis that involve notions of peakedness have been given. One is that kurtosis measures both the "peakedness" of the distribution and theheaviness of its tail.[5]Various other incorrect interpretations have been suggested, such as "lack of shoulders" (where the "shoulder" is defined vaguely as the area between the peak and the tail, or more specifically as the area about onestandard deviationfrom the mean) or "bimodality".[6]Balanda andMacGillivrayassert that the standard definition of kurtosis "is a poor measure of the kurtosis, peakedness, or tail weight of a distribution"[5]: 114 and instead propose to "define kurtosis vaguely as the location- and scale-free movement ofprobability massfrom the shoulders of a distribution into its center and tails ".[5]

Moors' interpretation[edit]

In 1986 Moors gave an interpretation of kurtosis.[7]Let whereXis a random variable,μis the mean andσis the standard deviation.

Now by definition of the kurtosis,and by the well-known identity

The kurtosis can now be seen as a measure of the dispersion ofZ2around its expectation. Alternatively it can be seen to be a measure of the dispersion ofZaround +1 and −1.κattains its minimal value in a symmetric two-point distribution. In terms of the original variableX,the kurtosis is a measure of the dispersion ofXaround the two valuesμ±σ.

High values ofκarise in two circumstances:

- where the probability mass is concentrated around the mean and the data-generating process produces occasional values far from the mean,

- where the probability mass is concentrated in the tails of the distribution.

Maximal entropy[edit]

The entropy of a distribution is.

For anywithpositive definite, among all probability distributions onwith meanand covariance,the normal distributionhas the largest entropy.

Since meanand covarianceare the first two moments, it is natural to consider extension to higher moments. In fact, byLagrange multipliermethod, for any prescribed first n moments, if there exists some probability distribution of formthat has the prescribed moments (if it is feasible), then it is the maximal entropy distribution under the given constraints.[8][9]

By serial expansion,so if a random variable has probability distribution,whereis a normalization constant, then its kurtosis is.[10]

Excess kurtosis[edit]

Theexcess kurtosisis defined as kurtosis minus 3. There are 3 distinct regimes as described below.

Mesokurtic[edit]

Distributions with zero excess kurtosis are calledmesokurtic,ormesokurtotic.The most prominent example of a mesokurtic distribution is the normal distribution family, regardless of the values of itsparameters.A few other well-known distributions can be mesokurtic, depending on parameter values: for example, thebinomial distributionis mesokurtic for.

Leptokurtic[edit]

A distribution withpositiveexcess kurtosis is calledleptokurtic,orleptokurtotic."Lepto-" means "slender".[11]In terms of shape, a leptokurtic distribution hasfatter tails.Examples of leptokurtic distributions include theStudent's t-distribution,Rayleigh distribution,Laplace distribution,exponential distribution,Poisson distributionand thelogistic distribution.Such distributions are sometimes termedsuper-Gaussian.[12]

Platykurtic[edit]

A distribution withnegativeexcess kurtosis is calledplatykurtic,orplatykurtotic."Platy-" means "broad".[13]In terms of shape, a platykurtic distribution hasthinner tails.Examples of platykurtic distributions include thecontinuousanddiscrete uniform distributions,and theraised cosine distribution.The most platykurtic distribution of all is theBernoulli distributionwithp= 1/2 (for example the number of times one obtains "heads" when flipping a coin once, acoin toss), for which the excess kurtosis is −2.

Graphical examples[edit]

The Pearson type VII family[edit]

The effects of kurtosis are illustrated using aparametric familyof distributions whose kurtosis can be adjusted while their lower-order moments and cumulants remain constant. Consider thePearson type VII family,which is a special case of thePearson type IV familyrestricted to symmetric densities. Theprobability density functionis given by whereais ascale parameterandmis ashape parameter.

All densities in this family are symmetric. Thekth moment exists providedm> (k+ 1)/2. For the kurtosis to exist, we requirem> 5/2. Then the mean andskewnessexist and are both identically zero. Settinga2= 2m− 3 makes the variance equal to unity. Then the only free parameter ism,which controls the fourth moment (and cumulant) and hence the kurtosis. One can reparameterize with,whereis the excess kurtosis as defined above. This yields a one-parameter leptokurtic family with zero mean, unit variance, zero skewness, and arbitrary non-negative excess kurtosis. The reparameterized density is

In the limit asone obtains the density which is shown as the red curve in the images on the right.

In the other direction asone obtains thestandard normaldensity as the limiting distribution, shown as the black curve.

In the images on the right, the blue curve represents the densitywith excess kurtosis of 2. The top image shows that leptokurtic densities in this family have a higher peak than the mesokurtic normal density, although this conclusion is only valid for this select family of distributions. The comparatively fatter tails of the leptokurtic densities are illustrated in the second image, which plots the natural logarithm of the Pearson type VII densities: the black curve is the logarithm of the standard normal density, which is aparabola.One can see that the normal density allocates little probability mass to the regions far from the mean ( "has thin tails" ), compared with the blue curve of the leptokurtic Pearson type VII density with excess kurtosis of 2. Between the blue curve and the black are other Pearson type VII densities withγ2= 1, 1/2, 1/4, 1/8, and 1/16. The red curve again shows the upper limit of the Pearson type VII family, with(which, strictly speaking, means that the fourth moment does not exist). The red curve decreases the slowest as one moves outward from the origin ( "has fat tails" ).

Other well-known distributions[edit]

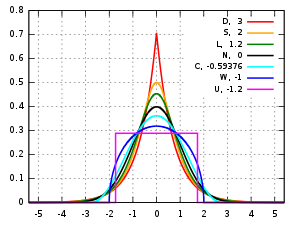

Several well-known, unimodal, and symmetric distributions from different parametric families are compared here. Each has a mean and skewness of zero. The parameters have been chosen to result in a variance equal to 1 in each case. The images on the right show curves for the following seven densities, on alinear scaleandlogarithmic scale:

- D:Laplace distribution,also known as the double exponential distribution, red curve (two straight lines in the log-scale plot), excess kurtosis = 3

- S:hyperbolic secant distribution,orange curve, excess kurtosis = 2

- L:logistic distribution,green curve, excess kurtosis = 1.2

- N:normal distribution,black curve (inverted parabola in the log-scale plot), excess kurtosis = 0

- C:raised cosine distribution,cyan curve, excess kurtosis = −0.593762...

- W:Wigner semicircle distribution,blue curve, excess kurtosis = −1

- U:uniform distribution,magenta curve (shown for clarity as a rectangle in both images), excess kurtosis = −1.2.

Note that in these cases the platykurtic densities have boundedsupport,whereas the densities with positive or zero excess kurtosis are supported on the wholereal line.

One cannot infer that high or low kurtosis distributions have the characteristics indicated by these examples. There exist platykurtic densities with infinite support,

- e.g.,exponential power distributionswith sufficiently large shape parameterb

and there exist leptokurtic densities with finite support.

- e.g., a distribution that is uniform between −3 and −0.3, between −0.3 and 0.3, and between 0.3 and 3, with the same density in the (−3, −0.3) and (0.3, 3) intervals, but with 20 times more density in the (−0.3, 0.3) interval

Also, there exist platykurtic densities with infinite peakedness,

- e.g., an equal mixture of thebeta distributionwith parameters 0.5 and 1 with its reflection about 0.0

and there exist leptokurtic densities that appear flat-topped,

- e.g., a mixture of distribution that is uniform between −1 and 1 with a T(4.0000001)Student's t-distribution,with mixing probabilities 0.999 and 0.001.

Sample kurtosis[edit]

Definitions[edit]

A natural but biased estimator[edit]

For asampleofnvalues, amethod of momentsestimator of the population excess kurtosis can be defined as wherem4is the fourth samplemoment about the mean,m2is the second sample moment about the mean (that is, thesample variance),xiis theithvalue, andis thesample mean.

This formula has the simpler representation, where thevalues are the standardized data values using the standard deviation defined usingnrather thann− 1 in the denominator.

For example, suppose the data values are 0, 3, 4, 1, 2, 3, 0, 2, 1, 3, 2, 0, 2, 2, 3, 2, 5, 2, 3, 999.

Then thevalues are −0.239, −0.225, −0.221, −0.234, −0.230, −0.225, −0.239, −0.230, −0.234, −0.225, −0.230, −0.239, −0.230, −0.230, −0.225, −0.230, −0.216, −0.230, −0.225, 4.359

and thevalues are 0.003, 0.003, 0.002, 0.003, 0.003, 0.003, 0.003, 0.003, 0.003, 0.003, 0.003, 0.003, 0.003, 0.003, 0.003, 0.003, 0.002, 0.003, 0.003, 360.976.

The average of these values is 18.05 and the excess kurtosis is thus 18.05 − 3 = 15.05. This example makes it clear that data near the "middle" or "peak" of the distribution do not contribute to the kurtosis statistic, hence kurtosis does not measure "peakedness". It is simply a measure of the outlier, 999 in this example.

Standard unbiased estimator[edit]

Given a sub-set of samples from a population, the sample excess kurtosisabove is abiased estimatorof the population excess kurtosis. An alternative estimator of the population excess kurtosis, which is unbiased in random samples of a normal distribution, is defined as follows:[3] wherek4is the unique symmetricunbiasedestimator of the fourthcumulant,k2is the unbiased estimate of the second cumulant (identical to the unbiased estimate of the sample variance),m4is the fourth sample moment about the mean,m2is the second sample moment about the mean,xiis theithvalue, andis the sample mean. This adjusted Fisher–Pearson standardized moment coefficientis the version found inExceland several statistical packages includingMinitab,SAS,andSPSS.[14]

Unfortunately, in nonnormal samplesis itself generally biased.

Upper bound[edit]

An upper bound for the sample kurtosis ofn(n> 2) real numbers is[15] whereis the corresponding sample skewness.

Variance under normality[edit]

The variance of the sample kurtosis of a sample of sizenfrom thenormal distributionis[16]

Stated differently, under the assumption that the underlying random variableis normally distributed, it can be shown that.[17]: Page number needed

Applications[edit]

This sectionneeds expansion.You can help byadding to it.(December 2009) |

The sample kurtosis is a useful measure of whether there is a problem with outliers in a data set. Larger kurtosis indicates a more serious outlier problem, and may lead the researcher to choose alternative statistical methods.

D'Agostino's K-squared testis agoodness-of-fitnormality testbased on a combination of the sample skewness and sample kurtosis, as is theJarque–Bera testfor normality.

For non-normal samples, the variance of the sample variance depends on the kurtosis; for details, please seevariance.

Pearson's definition of kurtosis is used as an indicator of intermittency inturbulence.[18]It is also used in magnetic resonance imaging to quantify non-Gaussian diffusion.[19]

A concrete example is the following lemma by He, Zhang, and Zhang:[20] Assume a random variablehas expectation,varianceand kurtosis Assume we samplemany independent copies. Then

This shows that withmany samples, we will see one that is above the expectation with probability at least. In other words: If the kurtosis is large, we might see a lot values either all below or above the mean.

Kurtosis convergence[edit]

Applyingband-pass filterstodigital images,kurtosis values tend to be uniform, independent of the range of the filter. This behavior, termedkurtosis convergence,can be used to detect image splicing inforensic analysis.[21]

Other measures[edit]

A different measure of "kurtosis" is provided by usingL-momentsinstead of the ordinary moments.[22][23]

See also[edit]

References[edit]

- ^ Pearson, Karl (1905), "Das Fehlergesetz und seine Verallgemeinerungen durch Fechner und Pearson. A Rejoinder" [The Error Law and its Generalizations by Fechner and Pearson. A Rejoinder],Biometrika,4(1–2): 169–212,doi:10.1093/biomet/4.1-2.169,JSTOR2331536

- ^ab Westfall, Peter H. (2014), "Kurtosis as Peakedness, 1905 - 2014.R.I.P.",The American Statistician,68(3): 191–195,doi:10.1080/00031305.2014.917055,PMC4321753,PMID25678714

- ^abc Joanes, Derrick N.; Gill, Christine A. (1998), "Comparing measures of sample skewness and kurtosis",Journal of the Royal Statistical Society, Series D,47(1): 183–189,doi:10.1111/1467-9884.00122,JSTOR2988433

- ^ Pearson, Karl (1916), "Mathematical Contributions to the Theory of Evolution. — XIX. Second Supplement to a Memoir on Skew Variation.",Philosophical Transactions of the Royal Society of London A,216(546): 429–457,Bibcode:1916RSPTA.216..429P,doi:10.1098/rsta.1916.0009,JSTOR91092

- ^abc Balanda, Kevin P.;MacGillivray, Helen L.(1988), "Kurtosis: A Critical Review",The American Statistician,42(2): 111–119,doi:10.2307/2684482,JSTOR2684482

- ^ Darlington, Richard B. (1970), "Is Kurtosis Really 'Peakedness'?",The American Statistician,24(2): 19–22,doi:10.1080/00031305.1970.10478885,JSTOR2681925

- ^ Moors, J. J. A. (1986), "The meaning of kurtosis: Darlington reexamined",The American Statistician,40(4): 283–284,doi:10.1080/00031305.1986.10475415,JSTOR2684603

- ^Tagliani, A. (1990-12-01)."On the existence of maximum entropy distributions with four and more assigned moments".Probabilistic Engineering Mechanics.5(4): 167–170.Bibcode:1990PEngM...5..167T.doi:10.1016/0266-8920(90)90017-E.ISSN0266-8920.

- ^Rockinger, Michael; Jondeau, Eric (2002-01-01)."Entropy densities with an application to autoregressive conditional skewness and kurtosis".Journal of Econometrics.106(1): 119–142.doi:10.1016/S0304-4076(01)00092-6.ISSN0304-4076.

- ^Bradde, Serena; Bialek, William (2017-05-01)."PCA Meets RG".Journal of Statistical Physics.167(3): 462–475.arXiv:1610.09733.Bibcode:2017JSP...167..462B.doi:10.1007/s10955-017-1770-6.ISSN1572-9613.PMC6054449.PMID30034029.

- ^"Lepto-".

- ^ Benveniste, Albert; Goursat, Maurice; Ruget, Gabriel (1980), "Robust identification of a nonminimum phase system: Blind adjustment of a linear equalizer in data communications",IEEE Transactions on Automatic Control,25(3): 385–399,doi:10.1109/tac.1980.1102343

- ^"platy-: definition, usage and pronunciation - YourDictionary.com".Archived fromthe originalon 2007-10-20.

- ^Doane DP, Seward LE (2011) J Stat Educ 19 (2)

- ^ Sharma, Rajesh; Bhandari, Rajeev K. (2015),"Skewness, kurtosis and Newton's inequality",Rocky Mountain Journal of Mathematics,45(5): 1639–1643,arXiv:1309.2896,doi:10.1216/RMJ-2015-45-5-1639,S2CID88513237

- ^ Fisher, Ronald A.(1930), "The Moments of the Distribution for Normal Samples of Measures of Departure from Normality",Proceedings of the Royal Society A,130(812): 16–28,Bibcode:1930RSPSA.130...16F,doi:10.1098/rspa.1930.0185,hdl:2440/15205,JSTOR95586,S2CID121520301

- ^ Kendall, Maurice G.; Stuart, Alan (1969),The Advanced Theory of Statistics, Volume 1: Distribution Theory(3rd ed.), London, UK: Charles Griffin & Company Limited,ISBN0-85264-141-9

- ^ Sandborn, Virgil A. (1959), "Measurements of Intermittency of Turbulent Motion in a Boundary Layer",Journal of Fluid Mechanics,6(2): 221–240,Bibcode:1959JFM.....6..221S,doi:10.1017/S0022112059000581,S2CID121838685

- ^Jensen, J.; Helpern, J.; Ramani, A.; Lu, H.; Kaczynski, K. (19 May 2005)."Diffusional kurtosis imaging: The quantification of non-Gaussian water diffusion by means of magnetic resonance imaging".Magn Reson Med.53(6): 1432–1440.doi:10.1002/mrm.20508.PMID15906300.S2CID11865594.

- ^He, Simai; Zhang, Jiawei; Zhang, Shuzhong (2010). "Bounding probability of small deviation: A fourth moment approach".Mathematics of Operations Research.35(1): 208–232.doi:10.1287/moor.1090.0438.S2CID11298475.

- ^

Pan, Xunyu; Zhang, Xing; Lyu, Siwei (2012), "Exposing Image Splicing with Inconsistent Local Noise Variances",2012 IEEE International Conference on Computational Photography (ICCP),28-29 April 2012; Seattle, WA, USA: IEEE, pp. 1–10,doi:10.1109/ICCPhot.2012.6215223,ISBN978-1-4673-1662-0,S2CID14386924

{{citation}}:CS1 maint: location (link) - ^ Hosking, Jonathan R. M. (1992), "Moments orLmoments? An example comparing two measures of distributional shape ",The American Statistician,46(3): 186–189,doi:10.1080/00031305.1992.10475880,JSTOR2685210

- ^ Hosking, Jonathan R. M. (2006), "On the characterization of distributions by theirL-moments ",Journal of Statistical Planning and Inference,136(1): 193–198,doi:10.1016/j.jspi.2004.06.004

Further reading[edit]

- Kim, Tae-Hwan; White, Halbert (2003)."On More Robust Estimation of Skewness and Kurtosis: Simulation and Application to the S&P500 Index".Finance Research Letters.1:56–70.doi:10.1016/S1544-6123(03)00003-5.S2CID16913409.Alternative source(Comparison of kurtosis estimators)

- Seier, E.; Bonett, D.G. (2003). "Two families of kurtosis measures".Metrika.58:59–70.doi:10.1007/s001840200223.S2CID115990880.

External links[edit]

- "Excess coefficient",Encyclopedia of Mathematics,EMS Press,2001 [1994]

- Kurtosis calculator

- Free Online Software (Calculator)computes various types of skewness and kurtosis statistics for any dataset (includes small and large sample tests)..

- Kurtosison theEarliest known uses of some of the words of mathematics

- Celebrating 100 years of Kurtosisa history of the topic, with different measures of kurtosis.

![{\displaystyle \operatorname {Kurt} [X]=\operatorname {E} \left[\left({\frac {X-\mu }{\sigma }}\right)^{4}\right]={\frac {\operatorname {E} \left[(X-\mu )^{4}\right]}{\left(\operatorname {E} \left[(X-\mu )^{2}\right]\right)^{2}}}={\frac {\mu _{4}}{\sigma ^{4}}},}](https://wikimedia.org/api/rest_v1/media/math/render/svg/abb6badbf13364972b05d9249962f5ff87aba236)

![{\displaystyle \operatorname {Kurt} [Y]-3={\frac {1}{\left(\sum _{j=1}^{n}\sigma _{j}^{\,2}\right)^{2}}}\sum _{i=1}^{n}\sigma _{i}^{\,4}\cdot \left(\operatorname {Kurt} \left[X_{i}\right]-3\right),}](https://wikimedia.org/api/rest_v1/media/math/render/svg/8f295e334581a6f264d3ca40bf75df3293fb0f3e)

![{\displaystyle \operatorname {Kurt} [Y]-3={1 \over n^{2}}\sum _{i=1}^{n}\left(\operatorname {Kurt} \left[X_{i}\right]-3\right).}](https://wikimedia.org/api/rest_v1/media/math/render/svg/6c397ae36f0e23dd0fff0c1137e5dcddc2e9217b)

![{\displaystyle {\begin{aligned}\operatorname {Kurt} [X+Y]={1 \over \sigma _{X+Y}^{4}}{\big (}&\sigma _{X}^{4}\operatorname {Kurt} [X]+4\sigma _{X}^{3}\sigma _{Y}\operatorname {Cokurt} [X,X,X,Y]\\&{}+6\sigma _{X}^{2}\sigma _{Y}^{2}\operatorname {Cokurt} [X,X,Y,Y]\\[6pt]&{}+4\sigma _{X}\sigma _{Y}^{3}\operatorname {Cokurt} [X,Y,Y,Y]+\sigma _{Y}^{4}\operatorname {Kurt} [Y]{\big )}.\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/ca0a7f4889310fb96ed071c23a6d6af959ef500d)

![{\displaystyle E\left[V^{2}\right]=\operatorname {var} [V]+[E[V]]^{2},}](https://wikimedia.org/api/rest_v1/media/math/render/svg/6782f578cb9d22c509a08ab7d43a21735eba1cde)

![{\displaystyle \kappa =E\left[Z^{4}\right]=\operatorname {var} \left[Z^{2}\right]+\left[E\left[Z^{2}\right]\right]^{2}=\operatorname {var} \left[Z^{2}\right]+[\operatorname {var} [Z]]^{2}=\operatorname {var} \left[Z^{2}\right]+1.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/c14062cd540b9bba4aa7aac49d5e5a5eccf1718e)

![{\displaystyle {\begin{aligned}&\int {\frac {1}{\sqrt {2\pi }}}e^{-{\frac {1}{2}}x^{2}-{\frac {1}{4}}gx^{4}}x^{2n}\,dx\\[6pt]={}&{\frac {1}{\sqrt {2\pi }}}\int e^{-{\frac {1}{2}}x^{2}-{\frac {1}{4}}gx^{4}}x^{2n}\,dx\\[6pt]={}&\sum _{k}{\frac {1}{k!}}(-g/4)^{k}(2n+4k-1)!!\\[6pt]={}&(2n-1)!!-{\frac {1}{4}}g(2n+3)!!+O(g^{2})\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f7c48b4902dc57596c5d3d22c5642c9b6a7b8847)

![{\displaystyle f(x;a,m)={\frac {\Gamma (m)}{a\,{\sqrt {\pi }}\,\Gamma (m-1/2)}}\left[1+\left({\frac {x}{a}}\right)^{2}\right]^{-m},}](https://wikimedia.org/api/rest_v1/media/math/render/svg/faa28a12d8d7612d4c54447dfabdde07ab6c0c53)

![{\displaystyle g_{2}={\frac {m_{4}}{m_{2}^{2}}}-3={\frac {{\tfrac {1}{n}}\sum _{i=1}^{n}(x_{i}-{\overline {x}})^{4}}{\left[{\tfrac {1}{n}}\sum _{i=1}^{n}(x_{i}-{\overline {x}})^{2}\right]^{2}}}-3}](https://wikimedia.org/api/rest_v1/media/math/render/svg/e30ed8db4466ff3e448b71e729464506ddce7770)

![{\displaystyle {\begin{aligned}G_{2}&={\frac {k_{4}}{k_{2}^{2}}}\\[6pt]&={\frac {n^{2}\,[(n+1)\,m_{4}-3\,(n-1)\,m_{2}^{2}]}{(n-1)\,(n-2)\,(n-3)}}\;{\frac {(n-1)^{2}}{n^{2}\,m_{2}^{2}}}\\[6pt]&={\frac {n-1}{(n-2)\,(n-3)}}\left[(n+1)\,{\frac {m_{4}}{m_{2}^{2}}}-3\,(n-1)\right]\\[6pt]&={\frac {n-1}{(n-2)\,(n-3)}}\left[(n+1)\,g_{2}+6\right]\\[6pt]&={\frac {(n+1)\,n\,(n-1)}{(n-2)\,(n-3)}}\;{\frac {\sum _{i=1}^{n}(x_{i}-{\bar {x}})^{4}}{\left(\sum _{i=1}^{n}(x_{i}-{\bar {x}})^{2}\right)^{2}}}-3\,{\frac {(n-1)^{2}}{(n-2)\,(n-3)}}\\[6pt]&={\frac {(n+1)\,n}{(n-1)\,(n-2)\,(n-3)}}\;{\frac {\sum _{i=1}^{n}(x_{i}-{\bar {x}})^{4}}{k_{2}^{2}}}-3\,{\frac {(n-1)^{2}}{(n-2)(n-3)}}\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/51b0b3b5d766ffd8f2c3be0fbe899873b41bb961)

![{\displaystyle E[X]=\mu }](https://wikimedia.org/api/rest_v1/media/math/render/svg/51ed977b56d8e513d9eb92193de5454ac545231e)

![{\displaystyle E\left[(X-\mu )^{2}\right]=\sigma ^{2}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/cedd17629b1a84a72ee82ac046028e7628811148)

![{\displaystyle \kappa ={\tfrac {1}{\sigma ^{4}}}E\left[(X-\mu )^{4}\right].}](https://wikimedia.org/api/rest_v1/media/math/render/svg/ba57733216b88c28481cf490a6e2f90d0ab83d84)

![{\displaystyle \Pr \left[\max _{i=1}^{n}X_{i}\leq \mu \right]\leq \delta \quad {\text{and}}\quad \Pr \left[\min _{i=1}^{n}X_{i}\geq \mu \right]\leq \delta .}](https://wikimedia.org/api/rest_v1/media/math/render/svg/193e367b4d43bcc0eaae3de498bd2970fd38b98b)