Binomial distribution

|

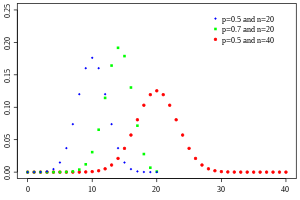

Probability mass function  | |||

|

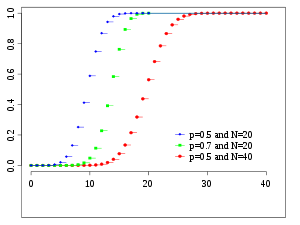

Cumulative distribution function  | |||

| Notation | |||

|---|---|---|---|

| Parameters |

– number of trials – success probability for each trial | ||

| Support | – number of successes | ||

| PMF | |||

| CDF | (theregularized incomplete beta function) | ||

| Mean | |||

| Median | or | ||

| Mode | or | ||

| Variance | |||

| Skewness | |||

| Excess kurtosis | |||

| Entropy |

inshannons.Fornats,use the natural log in the log. | ||

| MGF | |||

| CF | |||

| PGF | |||

| Fisher information |

(for fixed) | ||

| Part of a series onstatistics |

| Probability theory |

|---|

|

withnandkas inPascal's triangle

The probability that a ball in aGalton boxwith 8 layers (n= 8) ends up in the central bin (k= 4) is.

Inprobability theoryandstatistics,thebinomial distributionwith parametersnandpis thediscrete probability distributionof the number of successes in a sequence ofnindependentexperiments,each asking ayes–no question,and each with its ownBoolean-valuedoutcome:success(with probabilityp) orfailure(with probability). A single success/failure experiment is also called aBernoulli trialor Bernoulli experiment, and a sequence of outcomes is called aBernoulli process;for a single trial, i.e.,n= 1, the binomial distribution is aBernoulli distribution.The binomial distribution is the basis for the popularbinomial testofstatistical significance.[1]

The binomial distribution is frequently used to model the number of successes in a sample of sizendrawnwith replacementfrom a population of sizeN.If the sampling is carried out without replacement, the draws are not independent and so the resulting distribution is ahypergeometric distribution,not a binomial one. However, forNmuch larger thann,the binomial distribution remains a good approximation, and is widely used.

Definitions[edit]

Probability mass function[edit]

In general, if therandom variableXfollows the binomial distribution with parametersn∈andp∈ [0,1], we writeX~ B(n,p). The probability of getting exactlyksuccesses innindependent Bernoulli trials (with the same ratep) is given by theprobability mass function:

fork= 0, 1, 2,...,n,where

is thebinomial coefficient,hence the name of the distribution. The formula can be understood as follows:is the probability of obtaining the sequence ofBernoulli trials in which the firsttrials are “successes “and the remaining (last)trials result in “failure “. Since the trials are independent with probabilities remaining constant between them, any sequence (permutation) oftrials withsuccesses (andfailures) has the same probability of being achieved (regardless of positions of successes within the sequence). There aresuch sequences, sincecounts the number ofpermutations(possible sequences) ofobjects of two types, withbeing the number of objects of one type (andthe number of objects of the other type, with “type “meaning a collection of identical objects and the two being “success “and “failure “here). The binomial distribution is concerned with the probability of obtaininganyof these sequences, meaning the probability of obtaining one of them ( ) must be addedtimes, hence.

In creating reference tables for binomial distribution probability, usually the table is filled in up ton/2 values. This is because fork>n/2, the probability can be calculated by its complement as

Looking at the expressionf(k,n,p) as a function ofk,there is akvalue that maximizes it. Thiskvalue can be found by calculating

and comparing it to 1. There is always an integerMthat satisfies[2]

f(k,n,p) is monotone increasing fork<Mand monotone decreasing fork>M,with the exception of the case where (n+ 1)pis an integer. In this case, there are two values for whichfis maximal: (n+ 1)pand (n+ 1)p− 1.Mis themost probableoutcome (that is, the most likely, although this can still be unlikely overall) of the Bernoulli trials and is called themode.

Equivalently,.Taking thefloor function,we obtain.[note 1]

Example[edit]

Suppose abiased coincomes up heads with probability 0.3 when tossed. The probability of seeing exactly 4 heads in 6 tosses is

Cumulative distribution function[edit]

Thecumulative distribution functioncan be expressed as:

whereis the "floor" underk,i.e. thegreatest integerless than or equal tok.

It can also be represented in terms of theregularized incomplete beta function,as follows:[3]

which is equivalent to thecumulative distribution functionof theF-distribution:[4]

Some closed-form bounds for the cumulative distribution function are givenbelow.

Properties[edit]

Expected value and variance[edit]

IfX~B(n,p), that is,Xis a binomially distributed random variable,nbeing the total number of experiments andpthe probability of each experiment yielding a successful result, then theexpected valueofXis:[5]

This follows from the linearity of the expected value along with the fact thatXis the sum ofnidentical Bernoulli random variables, each with expected valuep.In other words, ifare identical (and independent) Bernoulli random variables with parameterp,thenand

Thevarianceis:

This similarly follows from the fact that the variance of a sum of independent random variables is the sum of the variances.

Higher moments[edit]

The first 6central moments,defined as,are given by

The non-central moments satisfy

whereare theStirling numbers of the second kind,andis thethfalling powerof. A simple bound [8]follows by bounding the Binomial moments via thehigher Poisson moments:

This shows that if,thenis at most a constant factor away from

Mode[edit]

Usually themodeof a binomialB(n, p) distribution is equal to,whereis thefloor function.However, when (n+ 1)pis an integer andpis neither 0 nor 1, then the distribution has two modes: (n+ 1)pand (n+ 1)p− 1. Whenpis equal to 0 or 1, the mode will be 0 andncorrespondingly. These cases can be summarized as follows:

Proof:Let

Foronlyhas a nonzero value with.Forwe findandfor.This proves that the mode is 0 forandfor.

Let.We find

- .

From this follows

So whenis an integer, thenandis a mode. In the case that,then onlyis a mode.[9]

Median[edit]

In general, there is no single formula to find themedianfor a binomial distribution, and it may even be non-unique. However, several special results have been established:

- Ifis an integer, then the mean, median, and mode coincide and equal.[10][11]

- Any medianmmust lie within the interval.[12]

- A medianmcannot lie too far away from the mean:.[13]

- The median is unique and equal tom=round(np) when(except for the case whenandnis odd).[12]

- Whenpis a rational number (with the exception ofandnodd) the median is unique.[14]

- Whenandnis odd, any numbermin the intervalis a median of the binomial distribution. Ifandnis even, thenis the unique median.

Tail bounds[edit]

Fork≤np,upper bounds can be derived for the lower tail of the cumulative distribution function,the probability that there are at mostksuccesses. Since,these bounds can also be seen as bounds for the upper tail of the cumulative distribution function fork≥np.

Hoeffding's inequalityyields the simple bound

which is however not very tight. In particular, forp= 1, we have thatF(k;n,p) = 0 (for fixedk,nwithk<n), but Hoeffding's bound evaluates to a positive constant.

A sharper bound can be obtained from theChernoff bound:[15]

whereD(a||p) is therelative entropy (or Kullback-Leibler divergence)between ana-coin and ap-coin (i.e. between the Bernoulli(a) and Bernoulli(p) distribution):

Asymptotically, this bound is reasonably tight; see[15]for details.

One can also obtainlowerbounds on the tail,known as anti-concentration bounds. By approximating the binomial coefficient withStirling's formulait can be shown that[16]

which implies the simpler but looser bound

Forp= 1/2 andk≥ 3n/8 for evenn,it is possible to make the denominator constant:[17]

Statistical inference[edit]

Estimation of parameters[edit]

Whennis known, the parameterpcan be estimated using the proportion of successes:

This estimator is found usingmaximum likelihood estimatorand also themethod of moments.This estimator isunbiasedand uniformly withminimum variance,proven usingLehmann–Scheffé theorem,since it is based on aminimal sufficientandcompletestatistic (i.e.:x). It is alsoconsistentboth in probability and inMSE.

A closed formBayes estimatorforpalso exists when using theBeta distributionas aconjugateprior distribution.When using a generalas a prior, theposterior meanestimator is:

The Bayes estimator isasymptotically efficientand as the sample size approaches infinity (n→ ∞), it approaches theMLEsolution.[18]The Bayes estimator isbiased(how much depends on the priors),admissibleandconsistentin probability.

For the special case of using thestandard uniform distributionas anon-informative prior,,the posterior mean estimator becomes:

(Aposterior modeshould just lead to the standard estimator.) This method is called therule of succession,which was introduced in the 18th century byPierre-Simon Laplace.

When relying onJeffreys prior,the prior is,[19]which leads to the estimator:

When estimatingpwith very rare events and a smalln(e.g.: if x=0), then using the standard estimator leads towhich sometimes is unrealistic and undesirable. In such cases there are various alternative estimators.[20]One way is to use the Bayes estimator,leading to:

Another method is to use the upper bound of theconfidence intervalobtained using therule of three:

Confidence intervals[edit]

Even for quite large values ofn,the actual distribution of the mean is significantly nonnormal.[21]Because of this problem several methods to estimate confidence intervals have been proposed.

In the equations for confidence intervals below, the variables have the following meaning:

- n1is the number of successes out ofn,the total number of trials

- is the proportion of successes

- is thequantileof astandard normal distribution(i.e.,probit) corresponding to the target error rate.For example, for a 95%confidence levelthe error= 0.05, so= 0.975 and= 1.96.

Wald method[edit]

Acontinuity correctionof 0.5/nmay be added.[clarification needed]

Agresti–Coull method[edit]

Here the estimate ofpis modified to

This method works well forand.[23]See here for.[24]Foruse the Wilson (score) method below.

Arcsine method[edit]

Wilson (score) method[edit]

The notation in the formula below differs from the previous formulas in two respects:[26]

- Firstly,zxhas a slightly different interpretation in the formula below: it has its ordinary meaning of 'thexth quantile of the standard normal distribution', rather than being a shorthand for 'the (1 −x)-th quantile'.

- Secondly, this formula does not use a plus-minus to define the two bounds. Instead, one may useto get the lower bound, or useto get the upper bound. For example: for a 95% confidence level the error= 0.05, so one gets the lower bound by using,and one gets the upper bound by using.

Comparison[edit]

The so-called "exact" (Clopper–Pearson) method is the most conservative.[21](Exactdoes not mean perfectly accurate; rather, it indicates that the estimates will not be less conservative than the true value.)

The Wald method, although commonly recommended in textbooks, is the most biased.[clarification needed]

Related distributions[edit]

Sums of binomials[edit]

IfX~ B(n,p) andY~ B(m,p) are independent binomial variables with the same probabilityp,thenX+Yis again a binomial variable; its distribution isZ=X+Y~ B(n+m,p):[28]

A Binomial distributed random variableX~ B(n,p) can be considered as the sum ofnBernoulli distributed random variables. So the sum of two Binomial distributed random variableX~ B(n,p) andY~ B(m,p) is equivalent to the sum ofn+mBernoulli distributed random variables, which meansZ=X+Y~ B(n+m,p). This can also be proven directly using the addition rule.

However, ifXandYdo not have the same probabilityp,then the variance of the sum will besmaller than the variance of a binomial variabledistributed as

Poisson binomial distribution[edit]

The binomial distribution is a special case of thePoisson binomial distribution,which is the distribution of a sum ofnindependent non-identicalBernoulli trialsB(pi).[29]

Ratio of two binomial distributions[edit]

This result was first derived by Katz and coauthors in 1978.[30]

LetX~ B(n,p1)andY~ B(m,p2)be independent. LetT= (X/n) / (Y/m).

Then log(T) is approximately normally distributed with mean log(p1/p2) and variance((1/p1) − 1)/n+ ((1/p2) − 1)/m.

Conditional binomials[edit]

IfX~ B(n,p) andY|X~ B(X,q) (the conditional distribution ofY,givenX), thenYis a simple binomial random variable with distributionY~ B(n,pq).

For example, imagine throwingnballs to a basketUXand taking the balls that hit and throwing them to another basketUY.Ifpis the probability to hitUXthenX~ B(n,p) is the number of balls that hitUX.Ifqis the probability to hitUYthen the number of balls that hitUYisY~ B(X,q) and thereforeY~ B(n,pq).

Sinceand,by thelaw of total probability,

Sincethe equation above can be expressed as

Factoringand pulling all the terms that don't depend onout of the sum now yields

After substitutingin the expression above, we get

Notice that the sum (in the parentheses) above equalsby thebinomial theorem.Substituting this in finally yields

and thusas desired.

Bernoulli distribution[edit]

TheBernoulli distributionis a special case of the binomial distribution, wheren= 1. Symbolically,X~ B(1,p) has the same meaning asX~ Bernoulli(p). Conversely, any binomial distribution, B(n,p), is the distribution of the sum ofnindependentBernoulli trials,Bernoulli(p), each with the same probabilityp.[31]

Normal approximation[edit]

Ifnis large enough, then the skew of the distribution is not too great. In this case a reasonable approximation to B(n,p) is given by thenormal distribution

and this basic approximation can be improved in a simple way by using a suitablecontinuity correction. The basic approximation generally improves asnincreases (at least 20) and is better whenpis not near to 0 or 1.[32]Variousrules of thumbmay be used to decide whethernis large enough, andpis far enough from the extremes of zero or one:

- One rule[32]is that forn> 5the normal approximation is adequate if the absolute value of the skewness is strictly less than 0.3; that is, if

This can be made precise using theBerry–Esseen theorem.

- A stronger rule states that the normal approximation is appropriate only if everything within 3 standard deviations of its mean is within the range of possible values; that is, only if

- This 3-standard-deviation rule is equivalent to the following conditions, which also imply the first rule above.

The ruleis totally equivalent to request that

Moving terms around yields:

Since,we can apply the square power and divide by the respective factorsand,to obtain the desired conditions:

Notice that these conditions automatically imply that.On the other hand, apply again the square root and divide by 3,

Subtracting the second set of inequalities from the first one yields:

and so, the desired first rule is satisfied,

- Another commonly used rule is that both valuesandmust be greater than[33][34]or equal to 5. However, the specific number varies from source to source, and depends on how good an approximation one wants. In particular, if one uses 9 instead of 5, the rule implies the results stated in the previous paragraphs.

Assume that both valuesandare greater than 9. Since,we easily have that

We only have to divide now by the respective factorsand,to deduce the alternative form of the 3-standard-deviation rule:

The following is an example of applying acontinuity correction.Suppose one wishes to calculate Pr(X≤ 8) for a binomial random variableX.IfYhas a distribution given by the normal approximation, then Pr(X≤ 8) is approximated by Pr(Y≤ 8.5). The addition of 0.5 is the continuity correction; the uncorrected normal approximation gives considerably less accurate results.

This approximation, known asde Moivre–Laplace theorem,is a huge time-saver when undertaking calculations by hand (exact calculations with largenare very onerous); historically, it was the first use of the normal distribution, introduced inAbraham de Moivre's bookThe Doctrine of Chancesin 1738. Nowadays, it can be seen as a consequence of thecentral limit theoremsince B(n,p) is a sum ofnindependent, identically distributedBernoulli variableswith parameterp.This fact is the basis of ahypothesis test,a "proportion z-test", for the value ofpusingx/n,the sample proportion and estimator ofp,in acommon test statistic.[35]

For example, suppose one randomly samplesnpeople out of a large population and ask them whether they agree with a certain statement. The proportion of people who agree will of course depend on the sample. If groups ofnpeople were sampled repeatedly and truly randomly, the proportions would follow an approximate normal distribution with mean equal to the true proportionpof agreement in the population and with standard deviation

Poisson approximation[edit]

The binomial distribution converges towards thePoisson distributionas the number of trials goes to infinity while the productnpconverges to a finite limit. Therefore, the Poisson distribution with parameterλ=npcan be used as an approximation to B(n,p) of the binomial distribution ifnis sufficiently large andpis sufficiently small. According to rules of thumb, this approximation is good ifn≥ 20 andp≤ 0.05[36]such that np ≤ 1, or if n > 50 and p < 0.1 such that np < 5,[37]or ifn≥ 100 andnp≤ 10.[38][39]

Concerning the accuracy of Poisson approximation, see Novak,[40]ch. 4, and references therein.

Limiting distributions[edit]

- Poisson limit theorem:Asnapproaches ∞ andpapproaches 0 with the productnpheld fixed, the Binomial(n,p) distribution approaches thePoisson distributionwithexpected valueλ = np.[38]

- de Moivre–Laplace theorem:Asnapproaches ∞ whilepremains fixed, the distribution of

- approaches thenormal distributionwith expected value 0 andvariance1. This result is sometimes loosely stated by saying that the distribution ofXisasymptotically normalwith expected value 0 andvariance1. This result is a specific case of thecentral limit theorem.

Beta distribution[edit]

The binomial distribution and beta distribution are different views of the same model of repeated Bernoulli trials. The binomial distribution is thePMFofksuccesses givennindependent events each with a probabilitypof success. Mathematically, whenα=k+ 1andβ=n−k+ 1,the beta distribution and the binomial distribution are related by[clarification needed]a factor ofn+ 1:

Beta distributionsalso provide a family ofprior probability distributionsfor binomial distributions inBayesian inference:[41]

Given a uniform prior, the posterior distribution for the probability of successpgivennindependent events withkobserved successes is a beta distribution.[42]

Computational methods[edit]

Random number generation[edit]

Methods forrandom number generationwhere themarginal distributionis a binomial distribution are well-established.[43][44] One way to generaterandom variatessamples from a binomial distribution is to use an inversion algorithm. To do so, one must calculate the probability thatPr(X=k)for all valueskfrom0throughn.(These probabilities should sum to a value close to one, in order to encompass the entire sample space.) Then by using apseudorandom number generatorto generate samples uniformly between 0 and 1, one can transform the calculated samples into discrete numbers by using the probabilities calculated in the first step.

History[edit]

This distribution was derived byJacob Bernoulli.He considered the case wherep=r/(r+s) wherepis the probability of success andrandsare positive integers.Blaise Pascalhad earlier considered the case wherep= 1/2, tabulating the corresponding binomial coefficients in what is now recognized asPascal's triangle.[45]

See also[edit]

- Logistic regression

- Multinomial distribution

- Negative binomial distribution

- Beta-binomial distribution

- Binomial measure, an example of amultifractalmeasure.[46]

- Statistical mechanics

- Piling-up lemma,the resulting probability whenXOR-ing independent Boolean variables

References[edit]

- ^Westland, J. Christopher (2020).Audit Analytics: Data Science for the Accounting Profession.Chicago, IL, USA: Springer. p. 53.ISBN978-3-030-49091-1.

- ^Feller, W. (1968).An Introduction to Probability Theory and Its Applications(Third ed.). New York: Wiley. p.151(theorem in section VI.3).

- ^Wadsworth, G. P. (1960).Introduction to Probability and Random Variables.New York: McGraw-Hill. p.52.

- ^Jowett, G. H. (1963). "The Relationship Between the Binomial and F Distributions".Journal of the Royal Statistical Society, Series D.13(1): 55–57.doi:10.2307/2986663.JSTOR2986663.

- ^SeeProof Wiki

- ^Knoblauch, Andreas (2008),"Closed-Form Expressions for the Moments of the Binomial Probability Distribution",SIAM Journal on Applied Mathematics,69(1): 197–204,doi:10.1137/070700024,JSTOR40233780

- ^Nguyen, Duy (2021),"A probabilistic approach to the moments of binomial random variables and application",The American Statistician,75(1): 101–103,doi:10.1080/00031305.2019.1679257,S2CID209923008

- ^D. Ahle, Thomas (2022), "Sharp and Simple Bounds for the raw Moments of the Binomial and Poisson Distributions",Statistics & Probability Letters,182:109306,arXiv:2103.17027,doi:10.1016/j.spl.2021.109306

- ^See alsoNicolas, André (January 7, 2019)."Finding mode in Binomial distribution".Stack Exchange.

- ^Neumann, P. (1966). "Über den Median der Binomial- and Poissonverteilung".Wissenschaftliche Zeitschrift der Technischen Universität Dresden(in German).19:29–33.

- ^Lord, Nick. (July 2010). "Binomial averages when the mean is an integer",The Mathematical Gazette94, 331-332.

- ^abKaas, R.; Buhrman, J.M. (1980). "Mean, Median and Mode in Binomial Distributions".Statistica Neerlandica.34(1): 13–18.doi:10.1111/j.1467-9574.1980.tb00681.x.

- ^Hamza, K. (1995). "The smallest uniform upper bound on the distance between the mean and the median of the binomial and Poisson distributions".Statistics & Probability Letters.23:21–25.doi:10.1016/0167-7152(94)00090-U.

- ^Nowakowski, Sz. (2021). "Uniqueness of a Median of a Binomial Distribution with Rational Probability".Advances in Mathematics: Scientific Journal.10(4): 1951–1958.arXiv:2004.03280.doi:10.37418/amsj.10.4.9.ISSN1857-8365.S2CID215238991.

- ^abArratia, R.; Gordon, L. (1989). "Tutorial on large deviations for the binomial distribution".Bulletin of Mathematical Biology.51(1): 125–131.doi:10.1007/BF02458840.PMID2706397.S2CID189884382.

- ^Robert B. Ash (1990).Information Theory.Dover Publications. p.115.ISBN9780486665214.

- ^Matoušek, J.; Vondrak, J."The Probabilistic Method"(PDF).lecture notes.Archived(PDF)from the original on 2022-10-09.

- ^Wilcox, Rand R. (1979)."Estimating the Parameters of the Beta-Binomial Distribution".Educational and Psychological Measurement.39(3): 527–535.doi:10.1177/001316447903900302.ISSN0013-1644.S2CID121331083.

- ^Marko Lalovic (https://stats.stackexchange.com/users/105848/marko-lalovic), Jeffreys prior for binomial likelihood, URL (version: 2019-03-04):https://stats.stackexchange.com/q/275608

- ^Razzaghi, Mehdi (2002)."On the estimation of binomial success probability with zero occurrence in sample".Journal of Modern Applied Statistical Methods.1(2): 326–332.doi:10.22237/jmasm/1036110000.

- ^abBrown, Lawrence D.; Cai, T. Tony; DasGupta, Anirban (2001),"Interval Estimation for a Binomial Proportion",Statistical Science,16(2): 101–133,CiteSeerX10.1.1.323.7752,doi:10.1214/ss/1009213286,retrieved2015-01-05

- ^Agresti, Alan; Coull, Brent A. (May 1998),"Approximate is better than 'exact' for interval estimation of binomial proportions"(PDF),The American Statistician,52(2): 119–126,doi:10.2307/2685469,JSTOR2685469,retrieved2015-01-05

- ^Gulotta, Joseph."Agresti-Coull Interval Method".pellucid.atlassian.net.Retrieved18 May2021.

- ^"Confidence intervals".itl.nist.gov.Retrieved18 May2021.

- ^Pires, M. A. (2002)."Confidence intervals for a binomial proportion: comparison of methods and software evaluation"(PDF).In Klinke, S.; Ahrend, P.; Richter, L. (eds.).Proceedings of the Conference CompStat 2002.Short Communications and Posters.Archived(PDF)from the original on 2022-10-09.

- ^Wilson, Edwin B. (June 1927),"Probable inference, the law of succession, and statistical inference"(PDF),Journal of the American Statistical Association,22(158): 209–212,doi:10.2307/2276774,JSTOR2276774,archived fromthe original(PDF)on 2015-01-13,retrieved2015-01-05

- ^"Confidence intervals".Engineering Statistics Handbook.NIST/Sematech. 2012.Retrieved2017-07-23.

- ^Dekking, F.M.; Kraaikamp, C.; Lopohaa, H.P.; Meester, L.E. (2005).A Modern Introduction of Probability and Statistics(1 ed.). Springer-Verlag London.ISBN978-1-84628-168-6.

- ^ Wang, Y. H. (1993)."On the number of successes in independent trials"(PDF).Statistica Sinica.3(2): 295–312. Archived fromthe original(PDF)on 2016-03-03.

- ^Katz, D.; et al. (1978). "Obtaining confidence intervals for the risk ratio in cohort studies".Biometrics.34(3): 469–474.doi:10.2307/2530610.JSTOR2530610.

- ^Taboga, Marco."Lectures on Probability Theory and Mathematical Statistics".statlect.com.Retrieved18 December2017.

- ^abBox, Hunter and Hunter (1978).Statistics for experimenters.Wiley. p.130.ISBN9780471093152.

- ^Chen, Zac (2011).H2 Mathematics Handbook(1 ed.). Singapore: Educational Publishing House. p. 350.ISBN9789814288484.

- ^"6.4: Normal Approximation to the Binomial Distribution - Statistics LibreTexts".2023-05-29. Archived from the original on 2023-05-29.Retrieved2023-10-07.

{{cite web}}:CS1 maint: bot: original URL status unknown (link) - ^NIST/SEMATECH,"7.2.4. Does the proportion of defectives meet requirements?"e-Handbook of Statistical Methods.

- ^"12.4 - Approximating the Binomial Distribution | STAT 414".2023-03-28. Archived from the original on 2023-03-28.Retrieved2023-10-08.

{{cite web}}:CS1 maint: bot: original URL status unknown (link) - ^Chen, Zac (2011).H2 mathematics handbook(1 ed.). Singapore: Educational publishing house. p. 348.ISBN9789814288484.

- ^abNIST/SEMATECH,"6.3.3.1. Counts Control Charts",e-Handbook of Statistical Methods.

- ^"The Connection Between the Poisson and Binomial Distributions".2023-03-13. Archived from the original on 2023-03-13.Retrieved2023-10-08.

{{cite web}}:CS1 maint: bot: original URL status unknown (link) - ^Novak S.Y. (2011) Extreme value methods with applications to finance. London: CRC/ Chapman & Hall/Taylor & Francis.ISBN9781-43983-5746.

- ^MacKay, David (2003).Information Theory, Inference and Learning Algorithms.Cambridge University Press; First Edition.ISBN978-0521642989.

- ^"Beta distribution".

- ^Devroye, Luc (1986)Non-Uniform Random Variate Generation,New York: Springer-Verlag. (See especiallyChapter X, Discrete Univariate Distributions)

- ^Kachitvichyanukul, V.; Schmeiser, B. W. (1988). "Binomial random variate generation".Communications of the ACM.31(2): 216–222.doi:10.1145/42372.42381.S2CID18698828.

- ^Katz, Victor (2009). "14.3: Elementary Probability".A History of Mathematics: An Introduction.Addison-Wesley. p. 491.ISBN978-0-321-38700-4.

- ^Mandelbrot, B. B., Fisher, A. J., & Calvet, L. E. (1997). A multifractal model of asset returns.3.2 The Binomial Measure is the Simplest Example of a Multifractal

- ^Except the trivial case of,which must be checked separately.

Further reading[edit]

- Hirsch, Werner Z. (1957)."Binomial Distribution—Success or Failure, How Likely Are They?".Introduction to Modern Statistics.New York: MacMillan. pp. 140–153.

- Neter, John; Wasserman, William; Whitmore, G. A. (1988).Applied Statistics(Third ed.). Boston: Allyn & Bacon. pp. 185–192.ISBN0-205-10328-6.

External links[edit]

- Interactive graphic:Univariate Distribution Relationships

- Binomial distribution formula calculator

- Difference of two binomial variables:X-Yor|X-Y|

- Querying the binomial probability distribution in WolframAlpha

- Confidence (credible) intervals for binomial probability, p:online calculatoravailable atcausaScientia.org

![{\displaystyle p\in [0,1]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/33c3a52aa7b2d00227e85c641cca67e85583c43c)

![{\displaystyle G(z)=[q+pz]^{n}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/40494c697ce2f88ebb396ac0191946285cadcbdd)

![{\displaystyle \operatorname {E} [X]=np.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/3f16b365410a1b23b5592c53d3ae6354f1a79aff)

![{\displaystyle \operatorname {E} [X]=\operatorname {E} [X_{1}+\cdots +X_{n}]=\operatorname {E} [X_{1}]+\cdots +\operatorname {E} [X_{n}]=p+\cdots +p=np.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/5f238d520c68a1d1b9b318492ddda39f4cc45bb8)

![{\displaystyle \mu _{c}=\operatorname {E} \left[(X-\operatorname {E} [X])^{c}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/1c5ea3e05b674668550675c3c4593c725a1ec86b)

![{\displaystyle {\begin{aligned}\operatorname {E} [X]&=np,\\\operatorname {E} [X^{2}]&=np(1-p)+n^{2}p^{2},\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/b3f2b3a9af52fc1ea633476400290069fc3ae7b4)

![{\displaystyle \operatorname {E} [X^{c}]=\sum _{k=0}^{c}\left\{{c \atop k}\right\}n^{\underline {k}}p^{k},}](https://wikimedia.org/api/rest_v1/media/math/render/svg/db435ced7af59fa481fe26a023a1429d18a6a83a)

![{\displaystyle \operatorname {E} [X^{c}]\leq \left({\frac {c}{\log(c/(np)+1)}}\right)^{c}\leq (np)^{c}\exp \left({\frac {c^{2}}{2np}}\right).}](https://wikimedia.org/api/rest_v1/media/math/render/svg/e6b86926254189719acfa57fcc1650caf698c292)

![{\displaystyle \operatorname {E} [X^{c}]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/5989f9c9f5202059ad1c0a4026d267ee2a975761)

![{\displaystyle \operatorname {E} [X]^{c}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/74d46bd564a6f77db38e12b156443bdbc3262cfb)

![{\displaystyle {\begin{aligned}\operatorname {P} (Z=k)&=\sum _{i=0}^{k}\left[{\binom {n}{i}}p^{i}(1-p)^{n-i}\right]\left[{\binom {m}{k-i}}p^{k-i}(1-p)^{m-k+i}\right]\\&={\binom {n+m}{k}}p^{k}(1-p)^{n+m-k}\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/38fc38e9a5e2c49743f45b4dab5dae6230ab2ad5)

![{\displaystyle {\begin{aligned}\Pr[Y=m]&=\sum _{k=m}^{n}\Pr[Y=m\mid X=k]\Pr[X=k]\\[2pt]&=\sum _{k=m}^{n}{\binom {n}{k}}{\binom {k}{m}}p^{k}q^{m}(1-p)^{n-k}(1-q)^{k-m}\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/7e8f896e04f3bc2c13d2eed61e48bd43e63f6406)

![{\displaystyle \Pr[Y=m]=\sum _{k=m}^{n}{\binom {n}{m}}{\binom {n-m}{k-m}}p^{k}q^{m}(1-p)^{n-k}(1-q)^{k-m}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/8369ef846ffda72900efc67b334923f70ce48ca5)

![{\displaystyle {\begin{aligned}\Pr[Y=m]&={\binom {n}{m}}p^{m}q^{m}\left(\sum _{k=m}^{n}{\binom {n-m}{k-m}}p^{k-m}(1-p)^{n-k}(1-q)^{k-m}\right)\\[2pt]&={\binom {n}{m}}(pq)^{m}\left(\sum _{k=m}^{n}{\binom {n-m}{k-m}}\left(p(1-q)\right)^{k-m}(1-p)^{n-k}\right)\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/caaa36dbcb3b4c43d7d5310533ef9d4809ba9db8)

![{\displaystyle \Pr[Y=m]={\binom {n}{m}}(pq)^{m}\left(\sum _{i=0}^{n-m}{\binom {n-m}{i}}(p-pq)^{i}(1-p)^{n-m-i}\right)}](https://wikimedia.org/api/rest_v1/media/math/render/svg/54401b2dd0936ca0904832912df2fe9b2c3d5153)

![{\displaystyle {\begin{aligned}\Pr[Y=m]&={\binom {n}{m}}(pq)^{m}(p-pq+1-p)^{n-m}\\[4pt]&={\binom {n}{m}}(pq)^{m}(1-pq)^{n-m}\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/324b106ed5f362da979eebfc0feaa94b44b713b2)