Character (computing)

Incomputerand machine-basedtelecommunicationsterminology, acharacteris a unit ofinformationthat roughly corresponds to agrapheme,grapheme-like unit, orsymbol,such as in analphabetorsyllabaryin thewrittenform of anatural language.[1]

Examples of characters includeletters,numerical digits,commonpunctuationmarks (such as "." or "-" ), andwhitespace.The concept also includescontrol characters,which do not correspond to visible symbols but rather to instructions to format or process the text. Examples of control characters includecarriage returnandtabas well as other instructions toprintersor other devices that display or otherwise process text.

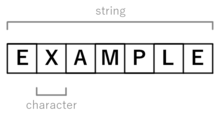

Characters are typically combined intostrings.

Historically, the termcharacterwas used to denote a specific number of contiguousbits.While a character is most commonly assumed to refer to 8 bits (onebyte) today, other options like the6-bit character codewere once popular,[2][3]and the5-bit Baudot codehas been used in the past as well. The term has even been applied to 4 bits[4]with only 16 possible values. All modern systems use a varying-size sequence of these fixed-sized pieces, for instanceUTF-8uses a varying number of 8-bitcode unitsto define a "code point"andUnicodeuses varying number ofthoseto define a "character".

Encoding[edit]

Computers and communication equipment represent characters using acharacter encodingthat assigns each character to something – anintegerquantity represented by a sequence ofdigits,typically – that can bestoredor transmitted through anetwork.Two examples of usual encodings areASCIIand theUTF-8encoding forUnicode.While most character encodings map characters to numbers and/or bit sequences,Morse codeinstead represents characters using a series of electrical impulses of varying length.

Terminology[edit]

This sectionneeds additional citations forverification.(January 2019) |

Historically, the termcharacterhas been widely used by industry professionals to refer to anencoded character,often as defined by the programming language orAPI.Likewise,character sethas been widely used to refer to a specific repertoire of characters that have been mapped to specific bit sequences or numerical codes. The termglyphis used to describe a particular visual appearance of a character. Many computerfontsconsist of glyphs that are indexed by the numerical code of the corresponding character.

With the advent and widespread acceptance of Unicode[5]and bit-agnosticcoded character sets,[clarification needed]a character is increasingly being seen as a unit ofinformation,independent of any particular visual manifestation. TheISO/IEC 10646 (Unicode) International Standarddefinescharacter,orabstract characteras "a member of a set of elements used for the organization, control, or representation of data". Unicode's definition supplements this with explanatory notes that encourage the reader to differentiate between characters, graphemes, and glyphs, among other things. Such differentiation is an instance of the wider theme of theseparation of presentation and content.

For example, theHebrew letteraleph( "א" ) is often used by mathematicians to denote certain kinds ofinfinity(ℵ), but it is also used in ordinary Hebrew text. In Unicode, these two uses are considered different characters, and have two different Unicode numerical identifiers ( "code points"), though they may be rendered identically. Conversely, theChineselogogramfor water ( "Thủy" ) may have a slightly different appearance inJapanesetexts than it does in Chinese texts, and localtypefacesmay reflect this. But nonetheless in Unicode they are considered the same character, and share the same code point.

The Unicode standard also differentiates between these abstract characters andcoded charactersorencoded charactersthat have been paired with numeric codes that facilitate their representation in computers.

Combining character[edit]

Thecombining characteris also addressed by Unicode. For instance, Unicode allocates a code point to each of

- 'i ' (U+0069),

- the combiningdiaeresis(U+0308), and

- 'ï' (U+00EF).

This makes it possible to code the middle character of the word 'naïve' either as a single character 'ï' or as a combination of the character'i 'with the combining diaeresis: (U+0069 LATIN SMALL LETTER I + U+0308 COMBINING DIAERESIS); this is also rendered as'ï '.

These are considered canonically equivalent by the Unicode standard.

char[edit]

Acharin theC programming languageis a data type with the size of exactly onebyte,[6][7]which in turn is defined to be large enough to contain any member of the "basic execution character set". The exact number of bits can be checked viaCHAR_BITmacro. By far the most common size is 8 bits, and the POSIX standardrequiresit to be 8 bits.[8]In newer C standardscharis required to holdUTF-8code units[6][7]which requires a minimum size of 8 bits.

AUnicodecode point may require as many as 21 bits.[9]This will not fit in acharon most systems, so more than one is used for some of them, as in the variable-length encodingUTF-8where each code point takes 1 to 4 bytes. Furthermore, a "character" may require more than one code point (for instance withcombining characters), depending on what is meant by the word "character".

The fact that a character was historically stored in a single byte led to the two terms ( "char" and "character" ) being used interchangeably in most documentation. This often makes the documentation confusing or misleading when multibyte encodings such as UTF-8 are used, and has led to inefficient and incorrect implementations of string manipulation functions (such as computing the "length" of a string as a count of code units rather than bytes). Modern POSIX documentation attempts to fix this, defining "character" as a sequence of one or more bytes representing a single graphic symbol or control code, and attempts to use "byte" when referring to char data.[10][11]However it still contains errors such as defining an array ofcharas acharacter array(rather than abyte array).[12]

Unicode can also be stored in strings made up of code units that are larger thanchar.These are called "wide characters".The original C type was calledwchar_t.Due to some platforms definingwchar_tas 16 bits and others defining it as 32 bits, recent versions have addedchar16_t,char32_t.Even then the objects being stored might not be characters, for instance the variable-lengthUTF-16is often stored in arrays ofchar16_t.

Other languages also have achartype. Some such asC++use at least 8 bits like C.[7]Others such asJavause 16 bits forcharin order to represent UTF-16 values.

See also[edit]

- Character literal

- Character (symbol)

- Fill character

- Combining character

- Universal Character Set characters

- Homoglyph

References[edit]

- ^"Definition of CHARACTER".Merriam-Webster.Retrieved2018-04-01.

- ^Dreyfus, Phillippe(1958). "System design of the Gamma 60".Managing Requirements Knowledge, International Workshop on, Los Angeles.New York. pp. 130–133.doi:10.1109/AFIPS.1958.32.

[…] Internal data code is used: Quantitative (numerical) data are coded in a 4-bit decimal code; qualitative (alpha-numerical) data are coded in a 6-bit alphanumerical code. The internalinstruction codemeans that the instructions are coded in straight binary code.

As to the internal information length, the information quantum is called a "catena,"and it is composed of 24 bits representing either 6 decimal digits, or 4 alphanumerical characters. This quantum must contain a multiple of 4 and 6 bits to represent a whole number of decimal or alphanumeric characters. Twenty-four bits was found to be a good compromise between the minimum 12 bits, which would lead to a too-low transfer flow from a parallel readout core memory, and 36 bits or more, which was judged as too large an information quantum. The catena is to be considered as the equivalent of a character in variablewordlength machines, but it cannot be called so, as it may contain several characters. It is transferred in series to and from the main memory.

Not wanting to call a "quantum" a word, or a set of characters a letter, (a word is a word, and a quantum is something else), a new word was made, and it was called a "catena." It is an English word and exists inWebster'salthough it does not in French. Webster's definition of the word catena is, "a connected series;" therefore, a 24-bit information item. The word catena will be used hereafter.

The internal code, therefore, has been defined. Now what are the external data codes? These depend primarily upon the information handling device involved. TheGamma 60is designed to handle information relevant to any binary coded structure. Thus an 80-column punched card is considered as a 960-bit information item; 12 rows multiplied by 80 columns equals 960 possible punches; is stored as an exact image in 960 magnetic cores of the main memory with 2 card columns occupying one catena. […] - ^Blaauw, Gerrit Anne;Brooks Jr., Frederick Phillips;Buchholz, Werner(1962),"4: Natural Data Units"(PDF),inBuchholz, Werner(ed.),Planning a Computer System – Project Stretch,McGraw-Hill Book Company, Inc./ The Maple Press Company, York, PA., pp. 39–40,LCCN61-10466,archived(PDF)from the original on 2017-04-03,retrieved2017-04-03,

[…] Terms used here to describe the structure imposed by the machine design, in addition tobit,are listed below.

Bytedenotes a group of bits used to encode a character, or the number of bits transmitted in parallel to and from input-output units. A term other thancharacteris used here because a given character may be represented in different applications by more than one code, and different codes may use different numbers of bits (i.e., different byte sizes). In input-output transmission the grouping of bits may be completely arbitrary and have no relation to actual characters. (The term is coined frombite,but respelled to avoid accidental mutation tobit.)

Awordconsists of the number of data bits transmitted in parallel from or to memory in one memory cycle.Word sizeis thus defined as a structural property of the memory. (The termcatenawas coined for this purpose by the designers of theBullGAMMA 60computer.)

Blockrefers to the number of words transmitted to or from an input-output unit in response to a single input-output instruction. Block size is a structural property of an input-output unit; it may have been fixed by the design or left to be varied by the program. […] - ^"Terms And Abbreviations".MCS-4 Assembly Language Programming Manual - The INTELLEC 4 Microcomputer System Programming Manual(PDF)(Preliminary ed.). Santa Clara, California, US:Intel Corporation.December 1973. pp. v, 2-6. MCS-030-1273-1.Archived(PDF)from the original on 2020-03-01.Retrieved2020-03-02.

[…]Bit- The smallest unit of information which can be represented. (A bit may be in one of two states I 0 or 1). […]Byte- A group of 8 contiguous bits occupying a single memory location. […] Character - A group of 4 contiguous bits of data. […]

(NB. ThisIntel 4004manual uses the termcharacterreferring to4-bitrather than 8-bitdataentities. Intel switched to use the more common termnibblefor 4-bit entities in their documentation for the succeeding processor4040in 1974 already.) - ^Davis, Mark (2008-05-05)."Moving to Unicode 5.1".Google Blog.Retrieved2008-09-28.

- ^ab"§5.2.4.2.1 Sizes of integer types <limits.h> / §6.2.5 Types / §6.5.3.4 The sizeof and _Alignof operators".ISO/IEC 9899:2018 - Information technology -- Programming languages -- C.

{{cite book}}:|website=ignored (help) - ^abc"§1.7 The C++ memory model / §5.3.3 Sizeof".ISO/IEC 14882:2011.

- ^"<limits.h>".pubs.opengroup.org.Retrieved2018-04-01.

- ^"Glossary of Unicode Terms – Code Point".Retrieved2019-05-14.

- ^"POSIX definition of Character".

- ^"POSIX strlen reference".

- ^"POSIX definition of Character Array".

External links[edit]

- Characters: A Brief Introductionby The Linux Information Project (LINFO)

- ISO/IEC TR 15285:1998summarizes the ISO/IEC's character model, focusing on terminology definitions and differentiating between characters and glyphs