Eigenvalues and eigenvectors

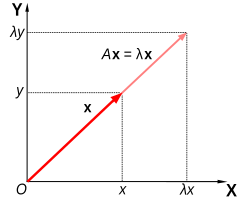

Inlinear algebra,it is often important to know whichvectorshave their directions unchanged by a givenlinear transformation.Aneigenvector(⫽ˈaɪɡən-⫽EYE-gən-) orcharacteristic vectoris such a vector. More precisely, an eigenvectorof a linear transformationisscaled by a constant factorwhen the linear transformation is applied to it:.The correspondingeigenvalue,characteristic value,orcharacteristic rootis the multiplying factor.

Geometrically, vectorsare multi-dimensionalquantities with magnitude and direction, often pictured as arrows. A linear transformationrotates,stretches,orshearsthe vectors upon which it acts. Its eigenvectors are those vectors that are only stretched, with neither rotation nor shear. The corresponding eigenvalue is the factor by which an eigenvector is stretched or squished. If the eigenvalue is negative, the eigenvector's direction is reversed.[1]

The eigenvectors and eigenvalues of a linear transformation serve to characterize it, and so they play important roles in all the areas where linear algebra is applied, fromgeologytoquantum mechanics.In particular, it is often the case that a system is represented by a linear transformation whose outputs are fed as inputs to the same transformation (feedback). In such an application, the largest eigenvalue is of particular importance, because it governs the long-term behavior of the system after many applications of the linear transformation, and the associated eigenvector is thesteady stateof the system.

Definition[edit]

Consider a matrixAand a nonzero vector.If applyingAto(denoted by) simply scalesby a factor ofλ,whereλis ascalar,thenis an eigenvector ofA,andλis the corresponding eigenvalue. This relationship can be expressed as:.[2]

There is a direct correspondence betweenn-by-nsquare matricesand linear transformations from ann-dimensionalvector space into itself, given anybasisof the vector space. Hence, in a finite-dimensional vector space, it is equivalent to define eigenvalues and eigenvectors using either the language ofmatrices,or the language of linear transformations.[3][4]

IfVis finite-dimensional, the above equation is equivalent to[5]

whereAis the matrix representation ofTanduis thecoordinate vectorofv.

Overview[edit]

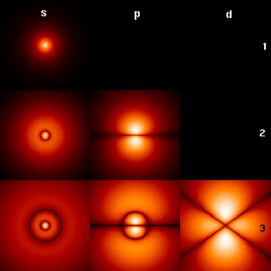

Eigenvalues and eigenvectors feature prominently in the analysis of linear transformations. The prefixeigen-is adopted from theGermanwordeigen(cognatewith theEnglishwordown) for 'proper', 'characteristic', 'own'.[6][7]Originally used to studyprincipal axesof the rotational motion ofrigid bodies,eigenvalues and eigenvectors have a wide range of applications, for example instability analysis,vibration analysis,atomic orbitals,facial recognition,andmatrix diagonalization.

In essence, an eigenvectorvof a linear transformationTis a nonzero vector that, whenTis applied to it, does not change direction. ApplyingTto the eigenvector only scales the eigenvector by the scalar valueλ,called an eigenvalue. This condition can be written as the equation referred to as theeigenvalue equationoreigenequation.In general,λmay be anyscalar.For example,λmay be negative, in which case the eigenvector reverses direction as part of the scaling, or it may be zero orcomplex.

The example here, based on theMona Lisa,provides a simple illustration. Each point on the painting can be represented as a vector pointing from the center of the painting to that point. The linear transformation in this example is called ashear mapping.Points in the top half are moved to the right, and points in the bottom half are moved to the left, proportional to how far they are from the horizontal axis that goes through the middle of the painting. The vectors pointing to each point in the original image are therefore tilted right or left, and made longer or shorter by the transformation. Pointsalongthe horizontal axis do not move at all when this transformation is applied. Therefore, any vector that points directly to the right or left with no vertical component is an eigenvector of this transformation, because the mapping does not change its direction. Moreover, these eigenvectors all have an eigenvalue equal to one, because the mapping does not change their length either.

Linear transformations can take many different forms, mapping vectors in a variety of vector spaces, so the eigenvectors can also take many forms. For example, the linear transformation could be adifferential operatorlike,in which case the eigenvectors are functions calledeigenfunctionsthat are scaled by that differential operator, such as Alternatively, the linear transformation could take the form of annbynmatrix, in which case the eigenvectors arenby 1 matrices. If the linear transformation is expressed in the form of annbynmatrixA,then the eigenvalue equation for a linear transformation above can be rewritten as the matrix multiplication where the eigenvectorvis annby 1 matrix. For a matrix, eigenvalues and eigenvectors can be used todecompose the matrix—for example bydiagonalizingit.

Eigenvalues and eigenvectors give rise to many closely related mathematical concepts, and the prefixeigen-is applied liberally when naming them:

- The set of all eigenvectors of a linear transformation, each paired with its corresponding eigenvalue, is called theeigensystemof that transformation.[8][9]

- The set of all eigenvectors ofTcorresponding to the same eigenvalue, together with the zero vector, is called aneigenspace,or thecharacteristic spaceofTassociated with that eigenvalue.[10]

- If a set of eigenvectors ofTforms abasisof the domain ofT,then this basis is called aneigenbasis.

History[edit]

Eigenvalues are often introduced in the context oflinear algebraormatrix theory.Historically, however, they arose in the study ofquadratic formsanddifferential equations.

In the 18th century,Leonhard Eulerstudied the rotational motion of arigid body,and discovered the importance of theprincipal axes.[a]Joseph-Louis Lagrangerealized that the principal axes are the eigenvectors of the inertia matrix.[11]

In the early 19th century,Augustin-Louis Cauchysaw how their work could be used to classify thequadric surfaces,and generalized it to arbitrary dimensions.[12]Cauchy also coined the termracine caractéristique(characteristic root), for what is now calledeigenvalue;his term survives incharacteristic equation.[b]

Later,Joseph Fourierused the work of Lagrange andPierre-Simon Laplaceto solve theheat equationbyseparation of variablesin his famous 1822 bookThéorie analytique de la chaleur.[13]Charles-François Sturmdeveloped Fourier's ideas further, and brought them to the attention of Cauchy, who combined them with his own ideas and arrived at the fact that realsymmetric matriceshave real eigenvalues.[12]This was extended byCharles Hermitein 1855 to what are now calledHermitian matrices.[14]

Around the same time,Francesco Brioschiproved that the eigenvalues oforthogonal matriceslie on theunit circle,[12]andAlfred Clebschfound the corresponding result forskew-symmetric matrices.[14]Finally,Karl Weierstrassclarified an important aspect in thestability theorystarted by Laplace, by realizing thatdefective matricescan cause instability.[12]

In the meantime,Joseph Liouvillestudied eigenvalue problems similar to those of Sturm; the discipline that grew out of their work is now calledSturm–Liouville theory.[15]Schwarzstudied the first eigenvalue ofLaplace's equationon general domains towards the end of the 19th century, whilePoincaréstudiedPoisson's equationa few years later.[16]

At the start of the 20th century,David Hilbertstudied the eigenvalues ofintegral operatorsby viewing the operators as infinite matrices.[17]He was the first to use theGermanwordeigen,which means "own",[7]to denote eigenvalues and eigenvectors in 1904,[c]though he may have been following a related usage byHermann von Helmholtz.For some time, the standard term in English was "proper value", but the more distinctive term "eigenvalue" is the standard today.[18]

The first numerical algorithm for computing eigenvalues and eigenvectors appeared in 1929, whenRichard von Misespublished thepower method.One of the most popular methods today, theQR algorithm,was proposed independently byJohn G. F. Francis[19]andVera Kublanovskaya[20]in 1961.[21][22]

Eigenvalues and eigenvectors of matrices[edit]

Eigenvalues and eigenvectors are often introduced to students in the context of linear algebra courses focused on matrices.[23][24] Furthermore, linear transformations over a finite-dimensional vector space can be represented using matrices,[3][4]which is especially common in numerical and computational applications.[25]

Considern-dimensional vectors that are formed as a list ofnscalars, such as the three-dimensional vectors

These vectors are said to bescalar multiplesof each other, orparallelorcollinear,if there is a scalarλsuch that

In this case,.

Now consider the linear transformation ofn-dimensional vectors defined by annbynmatrixA, or where, for each row,

If it occurs thatvandware scalar multiples, that is if

| (1) |

thenvis aneigenvectorof the linear transformationAand the scale factorλis theeigenvaluecorresponding to that eigenvector. Equation (1) is theeigenvalue equationfor the matrixA.

Equation (1) can be stated equivalently as

| (2) |

whereIis thenbynidentity matrixand0is the zero vector.

Eigenvalues and the characteristic polynomial[edit]

Equation (2) has a nonzero solutionvif and only ifthedeterminantof the matrix(A−λI)is zero. Therefore, the eigenvalues ofAare values ofλthat satisfy the equation

| (3) |

Using theLeibniz formula for determinants,the left-hand side of equation (3) is apolynomialfunction of the variableλand thedegreeof this polynomial isn,the order of the matrixA.Itscoefficientsdepend on the entries ofA,except that its term of degreenis always (−1)nλn.This polynomial is called thecharacteristic polynomialofA.Equation (3) is called thecharacteristic equationor thesecular equationofA.

Thefundamental theorem of algebraimplies that the characteristic polynomial of ann-by-nmatrixA,being a polynomial of degreen,can befactoredinto the product ofnlinear terms,

| (4) |

where eachλimay be real but in general is a complex number. The numbersλ1,λ2,...,λn,which may not all have distinct values, are roots of the polynomial and are the eigenvalues ofA.

As a brief example, which is described in more detail in the examples section later, consider the matrix

Taking the determinant of(A−λI),the characteristic polynomial ofAis

Setting the characteristic polynomial equal to zero, it has roots atλ=1andλ=3,which are the two eigenvalues ofA.The eigenvectors corresponding to each eigenvalue can be found by solving for the components ofvin the equation.In this example, the eigenvectors are any nonzero scalar multiples of

If the entries of the matrixAare all real numbers, then the coefficients of the characteristic polynomial will also be real numbers, but the eigenvalues may still have nonzero imaginary parts. The entries of the corresponding eigenvectors therefore may also have nonzero imaginary parts. Similarly, the eigenvalues may beirrational numberseven if all the entries ofAarerational numbersor even if they are all integers. However, if the entries ofAare allalgebraic numbers,which include the rationals, the eigenvalues must also be algebraic numbers.

The non-real roots of a real polynomial with real coefficients can be grouped into pairs ofcomplex conjugates,namely with the two members of each pair having imaginary parts that differ only in sign and the same real part. If the degree is odd, then by theintermediate value theoremat least one of the roots is real. Therefore, anyreal matrixwith odd order has at least one real eigenvalue, whereas a real matrix with even order may not have any real eigenvalues. The eigenvectors associated with these complex eigenvalues are also complex and also appear in complex conjugate pairs.

Spectrum of a matrix[edit]

Thespectrumof a matrix is the list of eigenvalues, repeated according to multiplicity; in an alternative notation the set of eigenvalues with their multiplicities.

An important quantity associated with the spectrum is the maximum absolute value of any eigenvalue. This is known as thespectral radiusof the matrix.

Algebraic multiplicity[edit]

Letλibe an eigenvalue of annbynmatrixA.Thealgebraic multiplicityμA(λi) of the eigenvalue is itsmultiplicity as a rootof the characteristic polynomial, that is, the largest integerksuch that (λ−λi)kdivides evenlythat polynomial.[10][26][27]

Suppose a matrixAhas dimensionnandd≤ndistinct eigenvalues. Whereas equation (4) factors the characteristic polynomial ofAinto the product ofnlinear terms with some terms potentially repeating, the characteristic polynomial can also be written as the product ofdterms each corresponding to a distinct eigenvalue and raised to the power of the algebraic multiplicity,

Ifd=nthen the right-hand side is the product ofnlinear terms and this is the same as equation (4). The size of each eigenvalue's algebraic multiplicity is related to the dimensionnas

IfμA(λi) = 1, thenλiis said to be asimple eigenvalue.[27]IfμA(λi) equals the geometric multiplicity ofλi,γA(λi), defined in the next section, thenλiis said to be asemisimple eigenvalue.

Eigenspaces, geometric multiplicity, and the eigenbasis for matrices[edit]

Given a particular eigenvalueλof thenbynmatrixA,define thesetEto be all vectorsvthat satisfy equation (2),

On one hand, this set is precisely thekernelor nullspace of the matrix (A−λI). On the other hand, by definition, any nonzero vector that satisfies this condition is an eigenvector ofAassociated withλ.So, the setEis theunionof the zero vector with the set of all eigenvectors ofAassociated withλ,andEequals the nullspace of (A−λI).Eis called theeigenspaceorcharacteristic spaceofAassociated withλ.[28][10]In generalλis a complex number and the eigenvectors are complexnby 1 matrices. A property of the nullspace is that it is alinear subspace,soEis a linear subspace of.

Because the eigenspaceEis a linear subspace, it isclosedunder addition. That is, if two vectorsuandvbelong to the setE,writtenu,v∈E,then(u+v) ∈Eor equivalentlyA(u+v) =λ(u+v).This can be checked using thedistributive propertyof matrix multiplication. Similarly, becauseEis a linear subspace, it is closed under scalar multiplication. That is, ifv∈Eandαis a complex number,(αv) ∈Eor equivalentlyA(αv) =λ(αv).This can be checked by noting that multiplication of complex matrices by complex numbers iscommutative.As long asu+vandαvare not zero, they are also eigenvectors ofAassociated withλ.

The dimension of the eigenspaceEassociated withλ,or equivalently the maximum number of linearly independent eigenvectors associated withλ,is referred to as the eigenvalue'sgeometric multiplicity.BecauseEis also the nullspace of (A−λI), the geometric multiplicity ofλis the dimension of the nullspace of (A−λI), also called thenullityof (A−λI), which relates to the dimension and rank of (A−λI) as

Because of the definition of eigenvalues and eigenvectors, an eigenvalue's geometric multiplicity must be at least one, that is, each eigenvalue has at least one associated eigenvector. Furthermore, an eigenvalue's geometric multiplicity cannot exceed its algebraic multiplicity. Additionally, recall that an eigenvalue's algebraic multiplicity cannot exceedn.

To prove the inequality,consider how the definition of geometric multiplicity implies the existence oforthonormaleigenvectors,such that.We can therefore find a (unitary) matrixwhose firstcolumns are these eigenvectors, and whose remaining columns can be any orthonormal set ofvectors orthogonal to these eigenvectors of.Thenhas full rank and is therefore invertible. Evaluating,we get a matrix whose top left block is the diagonal matrix.This can be seen by evaluating what the left-hand side does to the first column basis vectors. By reorganizing and addingon both sides, we getsincecommutes with.In other words,is similar to,and.But from the definition of,we know thatcontains a factor,which means that the algebraic multiplicity ofmust satisfy.

Supposehasdistinct eigenvalues,where the geometric multiplicity ofis.The total geometric multiplicity of, is the dimension of thesumof all the eigenspaces of's eigenvalues, or equivalently the maximum number of linearly independent eigenvectors of.If,then

- The direct sum of the eigenspaces of all of's eigenvalues is the entire vector space.

- A basis ofcan be formed fromlinearly independent eigenvectors of;such a basis is called aneigenbasis

- Any vector incan be written as a linear combination of eigenvectors of.

Additional properties of eigenvalues[edit]

Letbe an arbitrarymatrix of complex numbers with eigenvalues.Each eigenvalue appearstimes in this list, whereis the eigenvalue's algebraic multiplicity. The following are properties of this matrix and its eigenvalues:

- Thetraceof,defined as the sum of its diagonal elements, is also the sum of all eigenvalues,[29][30][31]

- Thedeterminantofis the product of all its eigenvalues,[29][32][33]

- The eigenvalues of theth power of;i.e., the eigenvalues of,for any positive integer,are.

- The matrixisinvertibleif and only if every eigenvalue is nonzero.

- Ifis invertible, then the eigenvalues ofareand each eigenvalue's geometric multiplicity coincides. Moreover, since the characteristic polynomial of the inverse is thereciprocal polynomialof the original, the eigenvalues share the same algebraic multiplicity.

- Ifis equal to itsconjugate transpose,or equivalently ifisHermitian,then every eigenvalue is real. The same is true of anysymmetricreal matrix.

- Ifis not only Hermitian but alsopositive-definite,positive-semidefinite, negative-definite, or negative-semidefinite, then every eigenvalue is positive, non-negative, negative, or non-positive, respectively.

- Ifisunitary,every eigenvalue has absolute value.

- Ifis amatrix andare its eigenvalues, then the eigenvalues of matrix(whereis the identity matrix) are.Moreover, if,the eigenvalues ofare.More generally, for a polynomialthe eigenvalues of matrixare.

Left and right eigenvectors[edit]

Many disciplines traditionally represent vectors as matrices with a single column rather than as matrices with a single row. For that reason, the word "eigenvector" in the context of matrices almost always refers to aright eigenvector,namely acolumnvector thatrightmultiplies thematrixin the defining equation, equation (1),

The eigenvalue and eigenvector problem can also be defined forrowvectors thatleftmultiply matrix.In this formulation, the defining equation is

whereis a scalar andis amatrix. Any row vectorsatisfying this equation is called aleft eigenvectorofandis its associated eigenvalue. Taking the transpose of this equation,

Comparing this equation to equation (1), it follows immediately that a left eigenvector ofis the same as the transpose of a right eigenvector of,with the same eigenvalue. Furthermore, since the characteristic polynomial ofis the same as the characteristic polynomial of,the left and right eigenvectors ofare associated with the same eigenvalues.

Diagonalization and the eigendecomposition[edit]

Suppose the eigenvectors ofAform a basis, or equivalentlyAhasnlinearly independent eigenvectorsv1,v2,...,vnwith associated eigenvaluesλ1,λ2,...,λn.The eigenvalues need not be distinct. Define asquare matrixQwhose columns are thenlinearly independent eigenvectors ofA,

Since each column ofQis an eigenvector ofA,right multiplyingAbyQscales each column ofQby its associated eigenvalue,

With this in mind, define a diagonal matrix Λ where each diagonal element Λiiis the eigenvalue associated with theith column ofQ.Then

Because the columns ofQare linearly independent, Q is invertible. Right multiplying both sides of the equation byQ−1,

or by instead left multiplying both sides byQ−1,

Acan therefore be decomposed into a matrix composed of its eigenvectors, a diagonal matrix with its eigenvalues along the diagonal, and the inverse of the matrix of eigenvectors. This is called theeigendecompositionand it is asimilarity transformation.Such a matrixAis said to besimilarto the diagonal matrix Λ ordiagonalizable.The matrixQis the change of basis matrix of the similarity transformation. Essentially, the matricesAand Λ represent the same linear transformation expressed in two different bases. The eigenvectors are used as the basis when representing the linear transformation as Λ.

Conversely, suppose a matrixAis diagonalizable. LetPbe a non-singular square matrix such thatP−1APis some diagonal matrixD.Left multiplying both byP,AP=PD.Each column ofPmust therefore be an eigenvector ofAwhose eigenvalue is the corresponding diagonal element ofD.Since the columns ofPmust be linearly independent forPto be invertible, there existnlinearly independent eigenvectors ofA.It then follows that the eigenvectors ofAform a basis if and only ifAis diagonalizable.

A matrix that is not diagonalizable is said to bedefective.For defective matrices, the notion of eigenvectors generalizes togeneralized eigenvectorsand the diagonal matrix of eigenvalues generalizes to theJordan normal form.Over an algebraically closed field, any matrixAhas aJordan normal formand therefore admits a basis of generalized eigenvectors and a decomposition intogeneralized eigenspaces.

Variational characterization[edit]

In theHermitiancase, eigenvalues can be given a variational characterization. The largest eigenvalue ofis the maximum value of thequadratic form.A value ofthat realizes that maximum is an eigenvector.

Matrix examples[edit]

Two-dimensional matrix example[edit]

Consider the matrix

The figure on the right shows the effect of this transformation on point coordinates in the plane. The eigenvectorsvof this transformation satisfy equation (1), and the values ofλfor which the determinant of the matrix (A−λI) equals zero are the eigenvalues.

Taking the determinant to find characteristic polynomial ofA,

Setting the characteristic polynomial equal to zero, it has roots atλ=1andλ=3,which are the two eigenvalues ofA.

Forλ=1,equation (2) becomes,

Any nonzero vector withv1= −v2solves this equation. Therefore, is an eigenvector ofAcorresponding toλ= 1, as is any scalar multiple of this vector.

Forλ=3,equation (2) becomes

Any nonzero vector withv1=v2solves this equation. Therefore,

is an eigenvector ofAcorresponding toλ= 3, as is any scalar multiple of this vector.

Thus, the vectorsvλ=1andvλ=3are eigenvectors ofAassociated with the eigenvaluesλ=1andλ=3,respectively.

Three-dimensional matrix example[edit]

Consider the matrix

The characteristic polynomial ofAis

The roots of the characteristic polynomial are 2, 1, and 11, which are the only three eigenvalues ofA.These eigenvalues correspond to the eigenvectors,,and,or any nonzero multiple thereof.

Three-dimensional matrix example with complex eigenvalues[edit]

Consider thecyclic permutation matrix

This matrix shifts the coordinates of the vector up by one position and moves the first coordinate to the bottom. Its characteristic polynomial is 1 −λ3,whose roots are whereis animaginary unitwith.

For the real eigenvalueλ1= 1, any vector with three equal nonzero entries is an eigenvector. For example,

For the complex conjugate pair of imaginary eigenvalues,

Then and

Therefore, the other two eigenvectors ofAare complex and areandwith eigenvaluesλ2andλ3,respectively. The two complex eigenvectors also appear in a complex conjugate pair,

Diagonal matrix example[edit]

Matrices with entries only along the main diagonal are calleddiagonal matrices.The eigenvalues of a diagonal matrix are the diagonal elements themselves. Consider the matrix

The characteristic polynomial ofAis

which has the rootsλ1= 1,λ2= 2,andλ3= 3.These roots are the diagonal elements as well as the eigenvalues ofA.

Each diagonal element corresponds to an eigenvector whose only nonzero component is in the same row as that diagonal element. In the example, the eigenvalues correspond to the eigenvectors,

respectively, as well as scalar multiples of these vectors.

Triangular matrix example[edit]

A matrix whose elements above the main diagonal are all zero is called alowertriangular matrix,while a matrix whose elements below the main diagonal are all zero is called anupper triangular matrix.As with diagonal matrices, the eigenvalues of triangular matrices are the elements of the main diagonal.

Consider the lower triangular matrix,

The characteristic polynomial ofAis

which has the rootsλ1= 1,λ2= 2,andλ3= 3.These roots are the diagonal elements as well as the eigenvalues ofA.

These eigenvalues correspond to the eigenvectors,

respectively, as well as scalar multiples of these vectors.

Matrix with repeated eigenvalues example[edit]

As in the previous example, the lower triangular matrix has a characteristic polynomial that is the product of its diagonal elements,

The roots of this polynomial, and hence the eigenvalues, are 2 and 3. Thealgebraic multiplicityof each eigenvalue is 2; in other words they are both double roots. The sum of the algebraic multiplicities of all distinct eigenvalues isμA= 4 =n,the order of the characteristic polynomial and the dimension ofA.

On the other hand, thegeometric multiplicityof the eigenvalue 2 is only 1, because its eigenspace is spanned by just one vectorand is therefore 1-dimensional. Similarly, the geometric multiplicity of the eigenvalue 3 is 1 because its eigenspace is spanned by just one vector.The total geometric multiplicityγAis 2, which is the smallest it could be for a matrix with two distinct eigenvalues. Geometric multiplicities are defined in a later section.

Eigenvector-eigenvalue identity[edit]

For aHermitian matrix,the norm squared of thejth component of a normalized eigenvector can be calculated using only the matrix eigenvalues and the eigenvalues of the correspondingminor matrix, whereis thesubmatrixformed by removing thejth row and column from the original matrix.[34][35][36]This identity also extends todiagonalizable matrices,and has been rediscovered many times in the literature.[35][37]

Eigenvalues and eigenfunctions of differential operators[edit]

The definitions of eigenvalue and eigenvectors of a linear transformationTremains valid even if the underlying vector space is an infinite-dimensionalHilbertorBanach space.A widely used class of linear transformations acting on infinite-dimensional spaces are thedifferential operatorsonfunction spaces.LetDbe a linear differential operator on the spaceC∞of infinitelydifferentiablereal functions of a real argumentt.The eigenvalue equation forDis thedifferential equation

The functions that satisfy this equation are eigenvectors ofDand are commonly calledeigenfunctions.

Derivative operator example[edit]

Consider the derivative operatorwith eigenvalue equation

This differential equation can be solved by multiplying both sides bydt/f(t) andintegrating.Its solution, theexponential function is the eigenfunction of the derivative operator. In this case the eigenfunction is itself a function of its associated eigenvalue. In particular, forλ= 0 the eigenfunctionf(t) is a constant.

The maineigenfunctionarticle gives other examples.

General definition[edit]

The concept of eigenvalues and eigenvectors extends naturally to arbitrarylinear transformationson arbitrary vector spaces. LetVbe any vector space over somefieldKofscalars,and letTbe a linear transformation mappingVintoV,

We say that a nonzero vectorv∈Vis aneigenvectorofTif and only if there exists a scalarλ∈Ksuch that

| (5) |

This equation is called the eigenvalue equation forT,and the scalarλis theeigenvalueofTcorresponding to the eigenvectorv.T(v) is the result of applying the transformationTto the vectorv,whileλvis the product of the scalarλwithv.[38][39]

Eigenspaces, geometric multiplicity, and the eigenbasis[edit]

Given an eigenvalueλ,consider the set

which is the union of the zero vector with the set of all eigenvectors associated withλ.Eis called theeigenspaceorcharacteristic spaceofTassociated withλ.[40]

By definition of a linear transformation,

forx,y∈Vandα∈K.Therefore, ifuandvare eigenvectors ofTassociated with eigenvalueλ,namelyu,v∈E,then

So, bothu+vand αvare either zero or eigenvectors ofTassociated withλ,namelyu+v,αv∈E,andEis closed under addition and scalar multiplication. The eigenspaceEassociated withλis therefore a linear subspace ofV.[41] If that subspace has dimension 1, it is sometimes called aneigenline.[42]

Thegeometric multiplicityγT(λ) of an eigenvalueλis the dimension of the eigenspace associated withλ,i.e., the maximum number of linearly independent eigenvectors associated with that eigenvalue.[10][27][43]By the definition of eigenvalues and eigenvectors,γT(λ) ≥ 1 because every eigenvalue has at least one eigenvector.

The eigenspaces ofTalways form adirect sum.As a consequence, eigenvectors ofdifferenteigenvalues are always linearly independent. Therefore, the sum of the dimensions of the eigenspaces cannot exceed the dimensionnof the vector space on whichToperates, and there cannot be more thanndistinct eigenvalues.[d]

Any subspace spanned by eigenvectors ofTis aninvariant subspaceofT,and the restriction ofTto such a subspace is diagonalizable. Moreover, if the entire vector spaceVcan be spanned by the eigenvectors ofT,or equivalently if the direct sum of the eigenspaces associated with all the eigenvalues ofTis the entire vector spaceV,then a basis ofVcalled aneigenbasiscan be formed from linearly independent eigenvectors ofT.WhenTadmits an eigenbasis,Tis diagonalizable.

Spectral theory[edit]

Ifλis an eigenvalue ofT,then the operator (T−λI) is notone-to-one,and therefore its inverse (T−λI)−1does not exist. The converse is true for finite-dimensional vector spaces, but not for infinite-dimensional vector spaces. In general, the operator (T−λI) may not have an inverse even ifλis not an eigenvalue.

For this reason, infunctional analysiseigenvalues can be generalized to thespectrum of a linear operatorTas the set of all scalarsλfor which the operator (T−λI) has noboundedinverse. The spectrum of an operator always contains all its eigenvalues but is not limited to them.

Associative algebras and representation theory[edit]

One can generalize the algebraic object that is acting on the vector space, replacing a single operator acting on a vector space with analgebra representation– anassociative algebraacting on amodule.The study of such actions is the field ofrepresentation theory.

Therepresentation-theoretical concept of weightis an analog of eigenvalues, whileweight vectorsandweight spacesare the analogs of eigenvectors and eigenspaces, respectively.

Dynamic equations[edit]

The simplestdifference equationshave the form

The solution of this equation forxin terms oftis found by using its characteristic equation

which can be found by stacking into matrix form a set of equations consisting of the above difference equation and thek– 1 equationsgiving ak-dimensional system of the first order in the stacked variable vectorin terms of its once-lagged value, and taking the characteristic equation of this system's matrix. This equation giveskcharacteristic rootsfor use in the solution equation

A similar procedure is used for solving adifferential equationof the form

Calculation[edit]

The calculation of eigenvalues and eigenvectors is a topic where theory, as presented in elementary linear algebra textbooks, is often very far from practice.

Classical method[edit]

The classical method is to first find the eigenvalues, and then calculate the eigenvectors for each eigenvalue. It is in several ways poorly suited for non-exact arithmetics such asfloating-point.

Eigenvalues[edit]

The eigenvalues of a matrixcan be determined by finding the roots of the characteristic polynomial. This is easy formatrices, but the difficulty increases rapidly with the size of the matrix.

In theory, the coefficients of the characteristic polynomial can be computed exactly, since they are sums of products of matrix elements; and there are algorithms that can find all the roots of a polynomial of arbitrary degree to any requiredaccuracy.[44]However, this approach is not viable in practice because the coefficients would be contaminated by unavoidableround-off errors,and the roots of a polynomial can be an extremely sensitive function of the coefficients (as exemplified byWilkinson's polynomial).[44]Even for matrices whose elements are integers the calculation becomes nontrivial, because the sums are very long; the constant term is thedeterminant,which for anmatrix is a sum ofdifferent products.[e]

Explicitalgebraic formulasfor the roots of a polynomial exist only if the degreeis 4 or less. According to theAbel–Ruffini theoremthere is no general, explicit and exact algebraic formula for the roots of a polynomial with degree 5 or more. (Generality matters because any polynomial with degreeis the characteristic polynomial of somecompanion matrixof order.) Therefore, for matrices of order 5 or more, the eigenvalues and eigenvectors cannot be obtained by an explicit algebraic formula, and must therefore be computed by approximatenumerical methods.Even theexact formulafor the roots of a degree 3 polynomial is numerically impractical.

Eigenvectors[edit]

Once the (exact) value of an eigenvalue is known, the corresponding eigenvectors can be found by finding nonzero solutions of the eigenvalue equation, that becomes asystem of linear equationswith known coefficients. For example, once it is known that 6 is an eigenvalue of the matrix

we can find its eigenvectors by solving the equation,that is

This matrix equation is equivalent to twolinear equations that is

Both equations reduce to the single linear equation.Therefore, any vector of the form,for any nonzero real number,is an eigenvector ofwith eigenvalue.

The matrixabove has another eigenvalue.A similar calculation shows that the corresponding eigenvectors are the nonzero solutions of,that is, any vector of the form,for any nonzero real number.

Simple iterative methods[edit]

The converse approach, of first seeking the eigenvectors and then determining each eigenvalue from its eigenvector, turns out to be far more tractable for computers. The easiest algorithm here consists of picking an arbitrary starting vector and then repeatedly multiplying it with the matrix (optionally normalizing the vector to keep its elements of reasonable size); this makes the vector converge towards an eigenvector.A variationis to instead multiply the vector by;this causes it to converge to an eigenvector of the eigenvalue closest to.

Ifis (a good approximation of) an eigenvector of,then the corresponding eigenvalue can be computed as

wheredenotes theconjugate transposeof.

Modern methods[edit]

Efficient, accurate methods to compute eigenvalues and eigenvectors of arbitrary matrices were not known until theQR algorithmwas designed in 1961.[44]Combining theHouseholder transformationwith the LU decomposition results in an algorithm with better convergence than the QR algorithm.[citation needed]For largeHermitiansparse matrices,theLanczos algorithmis one example of an efficientiterative methodto compute eigenvalues and eigenvectors, among several other possibilities.[44]

Most numeric methods that compute the eigenvalues of a matrix also determine a set of corresponding eigenvectors as a by-product of the computation, although sometimes implementors choose to discard the eigenvector information as soon as it is no longer needed.

Applications[edit]

Geometric transformations[edit]

Eigenvectors and eigenvalues can be useful for understanding linear transformations of geometric shapes. The following table presents some example transformations in the plane along with their 2×2 matrices, eigenvalues, and eigenvectors.

| Scaling | Unequal scaling | Rotation | Horizontal shear | Hyperbolic rotation | |

|---|---|---|---|---|---|

| Illustration |

|

|

|

|

|

| Matrix | |||||

| Characteristic polynomial |

|||||

| Eigenvalues, | |||||

| Algebraicmult., |

|||||

| Geometricmult., |

|||||

| Eigenvectors | All nonzero vectors |

The characteristic equation for a rotation is aquadratic equationwithdiscriminant,which is a negative number wheneverθis not an integer multiple of 180°. Therefore, except for these special cases, the two eigenvalues are complex numbers,;and all eigenvectors have non-real entries. Indeed, except for those special cases, a rotation changes the direction of every nonzero vector in the plane.

A linear transformation that takes a square to a rectangle of the same area (asqueeze mapping) has reciprocal eigenvalues.

Principal component analysis[edit]

Theeigendecompositionof asymmetricpositive semidefinite(PSD)matrixyields anorthogonal basisof eigenvectors, each of which has a nonnegative eigenvalue. The orthogonal decomposition of a PSD matrix is used inmultivariate analysis,where thesamplecovariance matricesare PSD. This orthogonal decomposition is calledprincipal component analysis(PCA) in statistics. PCA studieslinear relationsamong variables. PCA is performed on thecovariance matrixor thecorrelation matrix(in which each variable is scaled to have itssample varianceequal to one). For the covariance or correlation matrix, the eigenvectors correspond toprincipal componentsand the eigenvalues to thevariance explainedby the principal components. Principal component analysis of the correlation matrix provides anorthogonal basisfor the space of the observed data: In this basis, the largest eigenvalues correspond to the principal components that are associated with most of the covariability among a number of observed data.

Principal component analysis is used as a means ofdimensionality reductionin the study of largedata sets,such as those encountered inbioinformatics.InQ methodology,the eigenvalues of the correlation matrix determine the Q-methodologist's judgment ofpracticalsignificance (which differs from thestatistical significanceofhypothesis testing;cf.criteria for determining the number of factors). More generally, principal component analysis can be used as a method offactor analysisinstructural equation modeling.

Graphs[edit]

Inspectral graph theory,an eigenvalue of agraphis defined as an eigenvalue of the graph'sadjacency matrix,or (increasingly) of the graph'sLaplacian matrixdue to itsdiscrete Laplace operator,which is either(sometimes called thecombinatorial Laplacian) or(sometimes called thenormalized Laplacian), whereis a diagonal matrix withequal to the degree of vertex,and in,theth diagonal entry is.Theth principal eigenvector of a graph is defined as either the eigenvector corresponding to theth largest orth smallest eigenvalue of the Laplacian. The first principal eigenvector of the graph is also referred to merely as the principal eigenvector.

The principal eigenvector is used to measure thecentralityof its vertices. An example isGoogle'sPageRankalgorithm. The principal eigenvector of a modifiedadjacency matrixof the World Wide Web graph gives the page ranks as its components. This vector corresponds to thestationary distributionof theMarkov chainrepresented by the row-normalized adjacency matrix; however, the adjacency matrix must first be modified to ensure a stationary distribution exists. The second smallest eigenvector can be used to partition the graph into clusters, viaspectral clustering.Other methods are also available for clustering.

Markov chains[edit]

AMarkov chainis represented by a matrix whose entries are thetransition probabilitiesbetween states of a system. In particular the entries are non-negative, and every row of the matrix sums to one, being the sum of probabilities of transitions from one state to some other state of the system. ThePerron–Frobenius theoremgives sufficient conditions for a Markov chain to have a unique dominant eigenvalue, which governs the convergence of the system to a steady state.

Vibration analysis[edit]

Eigenvalue problems occur naturally in the vibration analysis of mechanical structures with manydegrees of freedom.The eigenvalues are thenatural frequencies(oreigenfrequencies) of vibration, and the eigenvectors are the shapes of these vibrational modes. In particular, undamped vibration is governed by or

That is, acceleration is proportional to position (i.e., we expectto be sinusoidal in time).

Indimensions,becomes amass matrixandastiffness matrix.Admissible solutions are then a linear combination of solutions to thegeneralized eigenvalue problem whereis the eigenvalue andis the (imaginary)angular frequency.The principalvibration modesare different from the principal compliance modes, which are the eigenvectors ofalone. Furthermore,damped vibration,governed by leads to a so-calledquadratic eigenvalue problem,

This can be reduced to a generalized eigenvalue problem byalgebraic manipulationat the cost of solving a larger system.

The orthogonality properties of the eigenvectors allows decoupling of thedifferential equationsso that the system can be represented as linear summation of the eigenvectors. The eigenvalue problem of complex structures is often solved usingfinite element analysis,but neatly generalize the solution to scalar-valued vibration problems.

Tensor of moment of inertia[edit]

Inmechanics,the eigenvectors of themoment of inertia tensordefine theprincipal axesof arigid body.Thetensorof moment ofinertiais a key quantity required to determine the rotation of a rigid body around itscenter of mass.

Stress tensor[edit]

Insolid mechanics,thestresstensor is symmetric and so can be decomposed into adiagonaltensor with the eigenvalues on the diagonal and eigenvectors as a basis. Because it is diagonal, in this orientation, the stress tensor has noshearcomponents; the components it does have are the principal components.

Schrödinger equation[edit]

An example of an eigenvalue equation where the transformationis represented in terms of a differential operator is the time-independentSchrödinger equationinquantum mechanics:

where,theHamiltonian,is a second-orderdifferential operatorand,thewavefunction,is one of its eigenfunctions corresponding to the eigenvalue,interpreted as itsenergy.

However, in the case where one is interested only in thebound statesolutions of the Schrödinger equation, one looks forwithin the space ofsquare integrablefunctions. Since this space is aHilbert spacewith a well-definedscalar product,one can introduce abasis setin whichandcan be represented as a one-dimensional array (i.e., a vector) and a matrix respectively. This allows one to represent the Schrödinger equation in a matrix form.

Thebra–ket notationis often used in this context. A vector, which represents a state of the system, in the Hilbert space of square integrable functions is represented by.In this notation, the Schrödinger equation is:

whereis aneigenstateofandrepresents the eigenvalue.is anobservableself-adjoint operator,the infinite-dimensional analog of Hermitian matrices. As in the matrix case, in the equation aboveis understood to be the vector obtained by application of the transformationto.

Wave transport[edit]

Light,acoustic waves,andmicrowavesare randomlyscatterednumerous times when traversing a staticdisordered system.Even though multiple scattering repeatedly randomizes the waves, ultimately coherent wave transport through the system is a deterministic process which can be described by a field transmission matrix.[45][46]The eigenvectors of the transmission operatorform a set of disorder-specific input wavefronts which enable waves to couple into the disordered system's eigenchannels: the independent pathways waves can travel through the system. The eigenvalues,,ofcorrespond to the intensity transmittance associated with each eigenchannel. One of the remarkable properties of the transmission operator of diffusive systems is their bimodal eigenvalue distribution withand.[46]Furthermore, one of the striking properties of open eigenchannels, beyond the perfect transmittance, is the statistically robust spatial profile of the eigenchannels.[47]

Molecular orbitals[edit]

Inquantum mechanics,and in particular inatomicandmolecular physics,within theHartree–Focktheory, theatomicandmolecular orbitalscan be defined by the eigenvectors of theFock operator.The corresponding eigenvalues are interpreted asionization potentialsviaKoopmans' theorem.In this case, the term eigenvector is used in a somewhat more general meaning, since the Fock operator is explicitly dependent on the orbitals and their eigenvalues. Thus, if one wants to underline this aspect, one speaks of nonlinear eigenvalue problems. Such equations are usually solved by aniterationprocedure, called in this caseself-consistent fieldmethod. Inquantum chemistry,one often represents the Hartree–Fock equation in a non-orthogonalbasis set.This particular representation is ageneralized eigenvalue problemcalledRoothaan equations.

Geology and glaciology[edit]

This sectionmay be too technical for most readers to understand.(December 2023) |

Ingeology,especially in the study ofglacial till,eigenvectors and eigenvalues are used as a method by which a mass of information of a clast fabric's constituents' orientation and dip can be summarized in a 3-D space by six numbers. In the field, a geologist may collect such data for hundreds or thousands ofclastsin a soil sample, which can only be compared graphically such as in a Tri-Plot (Sneed and Folk) diagram,[48][49]or as a Stereonet on a Wulff Net.[50]

The output for the orientation tensor is in the three orthogonal (perpendicular) axes of space. The three eigenvectors are orderedby their eigenvalues;[51] then is the primary orientation/dip of clast,is the secondary andis the tertiary, in terms of strength. The clast orientation is defined as the direction of the eigenvector, on acompass roseof360°.Dip is measured as the eigenvalue, the modulus of the tensor: this is valued from 0° (no dip) to 90° (vertical). The relative values of,,andare dictated by the nature of the sediment's fabric. If,the fabric is said to be isotropic. If,the fabric is said to be planar. If,the fabric is said to be linear.[52]

Basic reproduction number[edit]

The basic reproduction number () is a fundamental number in the study of how infectious diseases spread. If one infectious person is put into a population of completely susceptible people, thenis the average number of people that one typical infectious person will infect. The generation time of an infection is the time,,from one person becoming infected to the next person becoming infected. In a heterogeneous population, the next generation matrix defines how many people in the population will become infected after timehas passed. The valueis then the largest eigenvalue of the next generation matrix.[53][54]

Eigenfaces[edit]

Inimage processing,processed images of faces can be seen as vectors whose components are thebrightnessesof eachpixel.[55]The dimension of this vector space is the number of pixels. The eigenvectors of thecovariance matrixassociated with a large set of normalized pictures of faces are calledeigenfaces;this is an example ofprincipal component analysis.They are very useful for expressing any face image as alinear combinationof some of them. In thefacial recognitionbranch ofbiometrics,eigenfaces provide a means of applyingdata compressionto faces foridentificationpurposes. Research related to eigen vision systems determining hand gestures has also been made.

Similar to this concept,eigenvoicesrepresent the general direction of variability in human pronunciations of a particular utterance, such as a word in a language. Based on a linear combination of such eigenvoices, a new voice pronunciation of the word can be constructed. These concepts have been found useful in automatic speech recognition systems for speaker adaptation.

See also[edit]

- Antieigenvalue theory

- Eigenoperator

- Eigenplane

- Eigenmoments

- Eigenvalue algorithm

- Quantum states

- Jordan normal form

- List of numerical-analysis software

- Nonlinear eigenproblem

- Normal eigenvalue

- Quadratic eigenvalue problem

- Singular value

- Spectrum of a matrix

Notes[edit]

- ^Note:

- In 1751, Leonhard Euler proved that any body has a principal axis of rotation: Leonhard Euler (presented: October 1751; published: 1760)"Du mouvement d'un corps solide quelconque lorsqu'il tourne autour d'un axe mobile"(On the movement of any solid body while it rotates around a moving axis),Histoire de l'Académie royale des sciences et des belles lettres de Berlin,pp. 176–227.On p. 212,Euler proves that any body contains a principal axis of rotation:"Théorem. 44. De quelque figure que soit le corps, on y peut toujours assigner un tel axe, qui passe par son centre de gravité, autour duquel le corps peut tourner librement & d'un mouvement uniforme."(Theorem. 44. Whatever be the shape of the body, one can always assign to it such an axis, which passes through its center of gravity, around which it can rotate freely and with a uniform motion.)

- In 1755,Johann Andreas Segnerproved that any body has three principal axes of rotation: Johann Andreas Segner,Specimen theoriae turbinum[Essay on the theory of tops (i.e., rotating bodies)] ( Halle ( "Halae" ), (Germany): Gebauer, 1755). (https://books.google.com/books?id=29p. xxviiii [29]), Segner derives a third-degree equation int,which proves that a body has three principal axes of rotation. He then states (on the same page):"Non autem repugnat tres esse eiusmodi positiones plani HM, quia in aequatione cubica radices tres esse possunt, et tres tangentis t valores."(However, it is not inconsistent [that there] be three such positions of the plane HM, because in cubic equations, [there] can be three roots, and three values of the tangent t.)

- The relevant passage of Segner's work was discussed briefly byArthur Cayley.See: A. Cayley (1862) "Report on the progress of the solution of certain special problems of dynamics,"Report of the Thirty-second meeting of the British Association for the Advancement of Science; held at Cambridge in October 1862,32:184–252; see especiallypp. 225–226.

- ^Kline 1972,pp. 807–808 Augustin Cauchy (1839) "Mémoire sur l'intégration des équations linéaires" (Memoir on the integration of linear equations),Comptes rendus,8:827–830, 845–865, 889–907, 931–937.From p. 827:"On sait d'ailleurs qu'en suivant la méthode de Lagrange, on obtient pour valeur générale de la variable prinicipale une fonction dans laquelle entrent avec la variable principale les racines d'une certaine équation que j'appellerai l'équation caractéristique,le degré de cette équation étant précisément l'order de l'équation différentielle qu'il s'agit d'intégrer. "(One knows, moreover, that by following Lagrange's method, one obtains for the general value of the principal variable a function in which there appear, together with the principal variable, the roots of a certain equation that I will call the "characteristic equation", the degree of this equation being precisely the order of the differential equation that must be integrated.)

- ^See:

- David Hilbert (1904)"Grundzüge einer allgemeinen Theorie der linearen Integralgleichungen. (Erste Mitteilung)"(Fundamentals of a general theory of linear integral equations. (First report)),Nachrichten von der Gesellschaft der Wissenschaften zu Göttingen, Mathematisch-Physikalische Klasse(News of the Philosophical Society at Göttingen, mathematical-physical section), pp. 49–91.From p. 51:"Insbesondere in dieser ersten Mitteilung gelange ich zu Formeln, die die Entwickelung einer willkürlichen Funktion nach gewissen ausgezeichneten Funktionen, die ich 'Eigenfunktionen' nenne, liefern:..."(In particular, in this first report I arrive at formulas that provide the [series] development of an arbitrary function in terms of some distinctive functions, which I calleigenfunctions:... ) Later on the same page:"Dieser Erfolg ist wesentlich durch den Umstand bedingt, daß ich nicht, wie es bisher geschah, in erster Linie auf den Beweis für die Existenz der Eigenwerte ausgehe,..."(This success is mainly attributable to the fact that I do not, as it has happened until now, first of all aim at a proof of the existence of eigenvalues,... )

- For the origin and evolution of the terms eigenvalue, characteristic value, etc., see:Earliest Known Uses of Some of the Words of Mathematics (E)

- ^For a proof of this lemma, seeRoman 2008,Theorem 8.2 on p. 186;Shilov 1977,p. 109;Hefferon 2001,p. 364;Beezer 2006,Theorem EDELI on p. 469; andLemma for linear independence of eigenvectors

- ^By doingGaussian eliminationoverformal power seriestruncated toterms it is possible to get away withoperations, but that does not takecombinatorial explosioninto account.

Citations[edit]

- ^Burden & Faires 1993,p. 401.

- ^Gilbert Strang. "6: Eigenvalues and Eigenvectors".Introduction to Linear Algebra(PDF)(5 ed.). Wellesley-Cambridge Press.

- ^abHerstein 1964,pp. 228, 229.

- ^abNering 1970,p. 38.

- ^Weisstein n.d.

- ^Betteridge 1965.

- ^ab"Eigenvector and Eigenvalue".www.mathsisfun.com.Retrieved19 August2020.

- ^Press et al. 2007,p. 536.

- ^Wolfram.com: Eigenvector.

- ^abcdNering 1970,p. 107.

- ^Hawkins 1975,§2.

- ^abcdHawkins 1975,§3.

- ^Kline 1972,p. 673.

- ^abKline 1972,pp. 807–808.

- ^Kline 1972,pp. 715–716.

- ^Kline 1972,pp. 706–707.

- ^Kline 1972,p. 1063, p..

- ^Aldrich 2006.

- ^Francis 1961,pp. 265–271.

- ^Kublanovskaya 1962.

- ^Golub & Van Loan 1996,§7.3.

- ^Meyer 2000,§7.3.

- ^Cornell University Department of Mathematics (2016)Lower-Level Courses for Freshmen and Sophomores.Accessed on 2016-03-27.

- ^University of Michigan Mathematics (2016)Math Course CatalogueArchived2015-11-01 at theWayback Machine.Accessed on 2016-03-27.

- ^Press et al. 2007,p. 38.

- ^Fraleigh 1976,p. 358.

- ^abcGolub & Van Loan 1996,p. 316.

- ^Anton 1987,pp. 305, 307.

- ^abBeauregard & Fraleigh 1973,p. 307.

- ^Herstein 1964,p. 272.

- ^Nering 1970,pp. 115–116.

- ^Herstein 1964,p. 290.

- ^Nering 1970,p. 116.

- ^Wolchover 2019.

- ^abDenton et al. 2022.

- ^Van Mieghem 2014.

- ^Van Mieghem 2024.

- ^Korn & Korn 2000,Section 14.3.5a.

- ^Friedberg, Insel & Spence 1989,p. 217.

- ^Roman 2008,p. 186 §8

- ^Nering 1970,p. 107;Shilov 1977,p. 109Lemma for the eigenspace

- ^Lipschutz & Lipson 2002,p. 111.

- ^Roman 2008,p. 189 §8.

- ^abcdTrefethen & Bau 1997.

- ^Vellekoop & Mosk 2007,pp. 2309–2311.

- ^abRotter & Gigan 2017,p. 15005.

- ^Bender et al. 2020,p. 165901.

- ^Graham & Midgley 2000,pp. 1473–1477.

- ^Sneed & Folk 1958,pp. 114–150.

- ^Knox-Robinson & Gardoll 1998,p. 243.

- ^Busche, Christian; Schiller, Beate."Endogene Geologie - Ruhr-Universität Bochum".www.ruhr-uni-bochum.de.

- ^Benn & Evans 2004,pp. 103–107.

- ^Diekmann, Heesterbeek & Metz 1990,pp. 365–382.

- ^Heesterbeek & Diekmann 2000.

- ^Xirouhakis, Votsis & Delopoulus 2004.

Sources[edit]

- Aldrich, John (2006),"Eigenvalue, eigenfunction, eigenvector, and related terms",in Miller, Jeff (ed.),Earliest Known Uses of Some of the Words of Mathematics

- Anton, Howard (1987),Elementary Linear Algebra(5th ed.), New York:Wiley,ISBN0-471-84819-0

- Beauregard, Raymond A.; Fraleigh, John B. (1973),A First Course In Linear Algebra: with Optional Introduction to Groups, Rings, and Fields,Boston:Houghton Mifflin Co.,ISBN0-395-14017-X

- Beezer, Robert A. (2006),A first course in linear algebra,Free online book under GNU licence, University of Puget Sound

- Bender, Nicholas; Yamilov, Alexey; Yilmaz, Hasan; Cao, Hui (14 October 2020)."Fluctuations and Correlations of Transmission Eigenchannels in Diffusive Media".Physical Review Letters.125(16): 165901.arXiv:2004.12167.Bibcode:2020PhRvL.125p5901B.doi:10.1103/physrevlett.125.165901.ISSN0031-9007.PMID33124845.S2CID216553547.

- Benn, D.; Evans, D. (2004),A Practical Guide to the study of Glacial Sediments,London: Arnold, pp. 103–107

- Betteridge, Harold T. (1965),The New Cassell's German Dictionary,New York:Funk & Wagnall,LCCN58-7924

- Burden, Richard L.; Faires, J. Douglas (1993),Numerical Analysis(5th ed.), Boston: Prindle, Weber and Schmidt,ISBN0-534-93219-3

- Denton, Peter B.; Parke, Stephen J.; Tao, Terence; Zhang, Xining (January 2022)."Eigenvectors from Eigenvalues: A Survey of a Basic Identity in Linear Algebra"(PDF).Bulletin of the American Mathematical Society.59(1): 31–58.arXiv:1908.03795.doi:10.1090/bull/1722.S2CID213918682.Archived(PDF)from the original on 19 January 2022.

- Diekmann, O; Heesterbeek, JA; Metz, JA (1990),"On the definition and the computation of the basic reproduction ratio R0 in models for infectious diseases in heterogeneous populations",Journal of Mathematical Biology,28(4): 365–382,doi:10.1007/BF00178324,hdl:1874/8051,PMID2117040,S2CID22275430

- Fraleigh, John B. (1976),A First Course In Abstract Algebra(2nd ed.), Reading:Addison-Wesley,ISBN0-201-01984-1

- Francis, J. G. F. (1961), "The QR Transformation, I (part 1)",The Computer Journal,4(3): 265–271,doi:10.1093/comjnl/4.3.265

- Francis, J. G. F. (1962), "The QR Transformation, II (part 2)",The Computer Journal,4(4): 332–345,doi:10.1093/comjnl/4.4.332

- Friedberg, Stephen H.; Insel, Arnold J.; Spence, Lawrence E. (1989),Linear algebra(2nd ed.), Englewood Cliffs, NJ: Prentice Hall,ISBN0-13-537102-3

- Golub, Gene H.;Van Loan, Charles F.(1996),Matrix computations(3rd ed.), Baltimore, MD: Johns Hopkins University Press,ISBN978-0-8018-5414-9

- Graham, D.; Midgley, N. (2000), "Graphical representation of particle shape using triangular diagrams: an Excel spreadsheet method",Earth Surface Processes and Landforms,25(13): 1473–1477,Bibcode:2000ESPL...25.1473G,doi:10.1002/1096-9837(200012)25:13<1473::AID-ESP158>3.0.CO;2-C,S2CID128825838

- Hawkins, T. (1975), "Cauchy and the spectral theory of matrices",Historia Mathematica,2:1–29,doi:10.1016/0315-0860(75)90032-4

- Heesterbeek, J. A. P.; Diekmann, Odo (2000),Mathematical epidemiology of infectious diseases,Wiley series in mathematical and computational biology, West Sussex, England: John Wiley & Sons[permanent dead link]

- Hefferon, Jim (2001),Linear Algebra,Colchester, VT: Online book, St Michael's College

- Herstein, I. N. (1964),Topics In Algebra,Waltham: Blaisdell Publishing Company,ISBN978-1114541016

- Kline, Morris (1972),Mathematical thought from ancient to modern times,Oxford University Press,ISBN0-19-501496-0

- Knox-Robinson, C.; Gardoll, Stephen J. (1998), "GIS-stereoplot: an interactive stereonet plotting module for ArcView 3.0 geographic information system",Computers & Geosciences,24(3): 243,Bibcode:1998CG.....24..243K,doi:10.1016/S0098-3004(97)00122-2

- Korn, Granino A.;Korn, Theresa M.(2000), "Mathematical Handbook for Scientists and Engineers: Definitions, Theorems, and Formulas for Reference and Review",New York: McGraw-Hill(2nd Revised ed.),Bibcode:1968mhse.book.....K,ISBN0-486-41147-8

- Kublanovskaya, Vera N. (1962), "On some algorithms for the solution of the complete eigenvalue problem",USSR Computational Mathematics and Mathematical Physics,1(3): 637–657,doi:10.1016/0041-5553(63)90168-X

- Lipschutz, Seymour; Lipson, Marc (12 August 2002).Schaum's Easy Outline of Linear Algebra.McGraw Hill Professional. p. 111.ISBN978-007139880-0.

- Meyer, Carl D. (2000),Matrix analysis and applied linear algebra,Philadelphia: Society for Industrial and Applied Mathematics (SIAM),ISBN978-0-89871-454-8

- Nering, Evar D. (1970),Linear Algebra and Matrix Theory(2nd ed.), New York:Wiley,LCCN76091646

- Press, William H.;Teukolsky, Saul A.;Vetterling, William T.; Flannery, Brian P. (2007),Numerical Recipes: The Art of Scientific Computing(3rd ed.), Cambridge University Press,ISBN978-0521880688

- Roman, Steven (2008),Advanced linear algebra(3rd ed.), New York: Springer Science + Business Media,ISBN978-0-387-72828-5

- Rotter, Stefan; Gigan, Sylvain (2 March 2017)."Light fields in complex media: Mesoscopic scattering meets wave control".Reviews of Modern Physics.89(1): 015005.arXiv:1702.05395.Bibcode:2017RvMP...89a5005R.doi:10.1103/RevModPhys.89.015005.S2CID119330480.

- Shilov, Georgi E. (1977),Linear algebra,Translated and edited by Richard A. Silverman, New York: Dover Publications,ISBN0-486-63518-X

- Sneed, E. D.; Folk, R. L. (1958), "Pebbles in the lower Colorado River, Texas, a study of particle morphogenesis",Journal of Geology,66(2): 114–150,Bibcode:1958JG.....66..114S,doi:10.1086/626490,S2CID129658242

- Trefethen, Lloyd N.; Bau, David (1997),Numerical Linear Algebra,SIAM

- Van Mieghem, Piet (18 January 2014). "Graph eigenvectors, fundamental weights and centrality metrics for nodes in networks".arXiv:1401.4580[math.SP].

- Vellekoop, I. M.; Mosk, A. P. (15 August 2007)."Focusing coherent light through opaque strongly scattering media".Optics Letters.32(16): 2309–2311.Bibcode:2007OptL...32.2309V.doi:10.1364/OL.32.002309.ISSN1539-4794.PMID17700768.S2CID45359403.

- Weisstein, Eric W."Eigenvector".mathworld.wolfram.com.Retrieved4 August2019.

- Weisstein, Eric W. (n.d.)."Eigenvalue".mathworld.wolfram.com.Retrieved19 August2020.

- Wolchover, Natalie (13 November 2019)."Neutrinos Lead to Unexpected Discovery in Basic Math".Quanta Magazine.Retrieved27 November2019.

- Xirouhakis, A.; Votsis, G.; Delopoulus, A. (2004),Estimation of 3D motion and structure of human faces(PDF),National Technical University of Athens

- Van Mieghem, P. (2024)."Eigenvector components of symmetric, graph-related matrices".Linear Algebra and Its Applications.692:91–134.doi:10.1016/j.laa.2024.03.035.

Further reading[edit]

- Golub, Gene F.; van der Vorst, Henk A. (2000),"Eigenvalue Computation in the 20th Century"(PDF),Journal of Computational and Applied Mathematics,123(1–2): 35–65,Bibcode:2000JCoAM.123...35G,doi:10.1016/S0377-0427(00)00413-1,hdl:1874/2663

- Hill, Roger (2009)."λ – Eigenvalues".Sixty Symbols.Brady Haranfor theUniversity of Nottingham.

- Kuttler, Kenneth (2017),An introduction to linear algebra(PDF),Brigham Young University

- Strang, Gilbert (1993),Introduction to linear algebra,Wellesley, MA: Wellesley-Cambridge Press,ISBN0-9614088-5-5

- Strang, Gilbert (2006),Linear algebra and its applications,Belmont, CA: Thomson, Brooks/Cole,ISBN0-03-010567-6

External links[edit]

This article'suse ofexternal linksmay not follow Wikipedia's policies or guidelines.(December 2019) |

- What are Eigen Values?– non-technical introduction from PhysLink.com's "Ask the Experts"

- Eigen Values and Eigen Vectors Numerical Examples– Tutorial and Interactive Program from Revoledu.

- Introduction to Eigen Vectors and Eigen Values– lecture from Khan Academy

- Eigenvectors and eigenvalues | Essence of linear algebra, chapter 10– A visual explanation with3Blue1Brown

- Matrix Eigenvectors Calculatorfrom Symbolab (Click on the bottom right button of the 2×12 grid to select a matrix size. Select ansize (for a square matrix), then fill out the entries numerically and click on the Go button. It can accept complex numbers as well.)

![]() Wikiversity uses introductory physics to introduceEigenvalues and eigenvectors

Wikiversity uses introductory physics to introduceEigenvalues and eigenvectors

Theory[edit]

- Computation of Eigenvalues

- Numerical solution of eigenvalue problemsEdited by Zhaojun Bai,James Demmel,Jack Dongarra, Axel Ruhe, andHenk van der Vorst

![{\displaystyle \left[{\begin{smallmatrix}2&1\\1&2\end{smallmatrix}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/838a30dc9d065ec434dff490bd84061ed569db3b)

![{\displaystyle {\begin{aligned}\det(A-\lambda I)&=\left|{\begin{bmatrix}2&1\\1&2\end{bmatrix}}-\lambda {\begin{bmatrix}1&0\\0&1\end{bmatrix}}\right|={\begin{vmatrix}2-\lambda &1\\1&2-\lambda \end{vmatrix}}\\[6pt]&=3-4\lambda +\lambda ^{2}\\[6pt]&=(\lambda -3)(\lambda -1).\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/852afe30ae1c99b2f2ff91b62e226d28cef2609a)

![{\displaystyle {\begin{aligned}\det(A-\lambda I)&=\left|{\begin{bmatrix}2&0&0\\0&3&4\\0&4&9\end{bmatrix}}-\lambda {\begin{bmatrix}1&0&0\\0&1&0\\0&0&1\end{bmatrix}}\right|={\begin{vmatrix}2-\lambda &0&0\\0&3-\lambda &4\\0&4&9-\lambda \end{vmatrix}},\\[6pt]&=(2-\lambda ){\bigl [}(3-\lambda )(9-\lambda )-16{\bigr ]}=-\lambda ^{3}+14\lambda ^{2}-35\lambda +22.\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/bdfec3c58ac4306d8cc19110ac4b2b5bfbea234e)