Fibonacci heap

| Fibonacci heap | |||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Type | heap | ||||||||||||||||||||||||||||||||

| Invented | 1984 | ||||||||||||||||||||||||||||||||

| Invented by | Michael L. Fredman and Robert E. Tarjan | ||||||||||||||||||||||||||||||||

| Complexities inbig O notation | |||||||||||||||||||||||||||||||||

| |||||||||||||||||||||||||||||||||

Incomputer science,aFibonacci heapis adata structureforpriority queueoperations, consisting of a collection ofheap-ordered trees.It has a betteramortizedrunning time than many other priority queue data structures including thebinary heapandbinomial heap.Michael L. FredmanandRobert E. Tarjandeveloped Fibonacci heaps in 1984 and published them in a scientific journal in 1987. Fibonacci heaps are named after theFibonacci numbers,which are used in their running time analysis.

The amortized times of all operations on Fibonacci heaps is constant, exceptdelete-min.[1][2]Deleting an element (most often used in the special case of deleting the minimum element) works inamortized time, whereis the size of the heap.[2]This means that starting from an empty data structure, any sequence ofainsert anddecrease-keyoperations andbdelete-minoperations would takeworst case time, whereis the maximum heap size. In a binary or binomial heap, such a sequence of operations would taketime. A Fibonacci heap is thus better than a binary or binomial heap whenis smaller thanby a non-constant factor. It is also possible to merge two Fibonacci heaps in constant amortized time, improving on the logarithmic merge time of a binomial heap, and improving on binary heaps which cannot handle merges efficiently.

Using Fibonacci heaps improves the asymptotic running time of algorithms which utilize priority queues. For example,Dijkstra's algorithmandPrim's algorithmcan be made to run intime.

Structure

[edit]

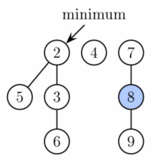

A Fibonacci heap is a collection oftreessatisfying theminimum-heap property,that is, the key of a child is always greater than or equal to the key of the parent. This implies that the minimum key is always at the root of one of the trees. Compared with binomial heaps, the structure of a Fibonacci heap is more flexible. The trees do not have a prescribed shape and in the extreme case the heap can have every element in a separate tree. This flexibility allows some operations to be executed in alazymanner, postponing the work for later operations. For example, merging heaps is done simply by concatenating the two lists of trees, and operationdecrease keysometimes cuts a node from its parent and forms a new tree.

However, at some point order needs to be introduced to the heap to achieve the desired running time. In particular, degrees of nodes (here degree means the number of direct children) are kept quite low: every node has degree at mostand the size of a subtree rooted in a node of degreeis at least,whereis thethFibonacci number.This is achieved by the rule: at most one child can be cut off each non-root node. When a second child is cut, the node itself needs to be cut from its parent and becomes the root of a new tree (see Proof of degree bounds, below). The number of trees is decreased in the operationdelete-min,where trees are linked together.

As a result of a relaxed structure, some operations can take a long time while others are done very quickly. For theamortized running timeanalysis, we use thepotential method,in that we pretend that very fast operations take a little bit longer than they actually do. This additional time is then later combined and subtracted from the actual running time of slow operations. The amount of time saved for later use is measured at any given moment by a potential function. The potentialof a Fibonacci heap is given by

- ,

whereis the number of trees in the Fibonacci heap, andis the number of marked nodes. A node is marked if at least one of its children was cut, since this node was made a child of another node (all roots are unmarked). The amortized time for an operation is given by the sum of the actual time andtimes the difference in potential, wherecis a constant (chosen to match the constant factors in thebig O notationfor the actual time).

Thus, the root of each tree in a heap has one unit of time stored. This unit of time can be used later to link this tree with another tree at amortized time 0. Also, each marked node has two units of time stored. One can be used to cut the node from its parent. If this happens, the node becomes a root and the second unit of time will remain stored in it as in any other root.

Operations

[edit]To allow fast deletion and concatenation, the roots of all trees are linked using a circulardoubly linked list.The children of each node are also linked using such a list. For each node, we maintain its number of children and whether the node is marked.

Find-min

[edit]We maintain a pointer to the root containing the minimum key, allowingaccess to the minimum. This pointer must be updated during the other operations, which adds only a constant time overhead.

Merge

[edit]The merge operation simply concatenates the root lists of two heaps together, and setting the minimum to be the smaller of the two heaps. This can be done in constant time, and the potential does not change, leading again to constant amortized time.

Insert

[edit]The insertion operation can be considered a special case of the merge operation, with a single node. The node is simply appended to the root list, increasing the potential by one. The amortized cost is thus still constant.

Delete-min

[edit]

The delete-min operation does most of the work in restoring the structure of the heap. It has three phases:

- The root containing the minimum element is removed, and each of itschildren becomes a new root. It takes timeto process these new roots, and the potential increases by.Therefore, the amortized running time of this phase is.

- There may be up toroots. We therefore decrease the number of roots by successively linking together roots of the same degree. When two roots have the same degree, we make the one with the larger key a child of the other, so that the minimum heap property is observed. The degree of the smaller root increases by one. This is repeated until every root has a different degree. To find trees of the same degree efficiently, we use an array of lengthin which we keep a pointer to one root of each degree. When a second root is found of the same degree, the two are linked and the array is updated. The actual running time is,whereis the number of roots at the beginning of the second phase. In the end, we will have at mostroots (because each has a different degree). Therefore, the difference in the potential from before to after this phase is.Thus, the amortized running time is.By choosing a sufficiently largesuch that the terms incancel out, this simplifies to.

- Search the final list of roots to find the minimum, and update the minimum pointer accordingly. This takestime, because the number of roots has been reduced.

Overall, the amortized time of this operation is,provided that.The proof of this is given in the following section.

Decrease-key

[edit]

If decreasing the key of a nodecauses it to become smaller than its parent, then it is cut from its parent, becoming a new unmarked root. If it is also less than the minimum key, then the minimum pointer is updated.

We then initiate a series ofcascading cuts,starting with the parent of.As long as the current node is marked, it is cut from its parent and made an unmarked root. Its original parent is then considered. This process stops when we reach an unmarked node.Ifis not a root, it is marked. In this process we introduce some number, say,of new trees. Except possibly,each of these new trees loses its original mark. The terminating nodemay become marked. Therefore, the change in the number of marked nodes is between ofand.The resulting change in potential is.The actual time required to perform the cutting was.Hence, the amortized time is,which is constant, providedis sufficiently large.

Proof of degree bounds

[edit]The amortized performance of a Fibonacci heap depends on the degree (number of children) of any tree root being,whereis the size of the heap. Here we show that the size of the (sub)tree rooted at any nodeof degreein the heap must have size at least,whereis thethFibonacci number.The degree bound follows from this and the fact (easily proved by induction) thatfor all integers,whereis thegolden ratio.We then have,and taking the log to baseof both sides givesas required.

Letbe an arbitrary node in a Fibonacci heap, not necessarily a root. Defineto be the size of the tree rooted at(the number of descendants of,includingitself). We prove by induction on the height of(the length of the longest path fromto a descendant leaf) that,whereis the degree of.

Base case:Ifhas height,then,and.

Inductive case:Supposehas positive height and degree.Letbe the children of,indexed in order of the times they were most recently made children of(being the earliest andthe latest), and letbe their respective degrees.

We claim thatfor each.Just beforewas made a child of,were already children of,and somust have had degree at leastat that time. Since trees are combined only when the degrees of their roots are equal, it must have been the case thatalso had degree at leastat the time when it became a child of.From that time to the present,could have only lost at most one child (as guaranteed by the marking process), and so its current degreeis at least.This proves the claim.

Since the heights of all theare strictly less than that of,we can apply the inductive hypothesis to them to getThe nodesandeach contribute at least 1 to,and so we havewhere the last step is an identity for Fibonacci numbers. This gives the desired lower bound on.

Performance

[edit]Although Fibonacci heaps look very efficient, they have the following two drawbacks:[3]

- They are complicated to implement.

- They are not as efficient in practice when compared with theoretically less efficient forms of heaps.[4]In their simplest version, they require manipulation of four pointers per node, whereas only two or three pointers per node are needed in other structures, such as thebinomial heap,orpairing heap.This results in large memory consumption per node and high constant factors on all operations.

Although the total running time of a sequence of operations starting with an empty structure is bounded by the bounds given above, some (very few) operations in the sequence can take very long to complete (in particular, delete-min has linear running time in the worst case). For this reason, Fibonacci heaps and other amortized data structures may not be appropriate forreal-time systems.

It is possible to create a data structure which has the same worst-case performance as the Fibonacci heap has amortized performance. One such structure, theBrodal queue,[5]is, in the words of the creator, "quite complicated" and "[not] applicable in practice." Invented in 2012, thestrict Fibonacci heap[6]is a simpler (compared to Brodal's) structure with the same worst-case bounds. Despite being simpler, experiments show that in practice the strict Fibonacci heap performs slower than more complicatedBrodal queueand also slower than basic Fibonacci heap.[7][8]The run-relaxed heaps of Driscoll et al. give good worst-case performance for all Fibonacci heap operations except merge.[9]Recent experimental results suggest that the Fibonacci heap is more efficient in practice than most of its later derivatives, including quake heaps, violation heaps, strict Fibonacci heaps, and rank pairing heaps, but less efficient than pairing heaps or array-based heaps.[8]

Summary of running times

[edit]Here aretime complexities[10]of various heap data structures. The abbreviationam.indicates that the given complexity is amortized, otherwise it is a worst-case complexity. For the meaning of "O(f) "and"Θ(f) "seeBig O notation.Names of operations assume a min-heap.

| Operation | find-min | delete-min | decrease-key | insert | meld | make-heap[a] |

|---|---|---|---|---|---|---|

| Binary[10] | Θ(1) | Θ(logn) | Θ(logn) | Θ(logn) | Θ(n) | Θ(n) |

| Skew[11] | Θ(1) | O(logn)am. | O(logn)am. | O(logn)am. | O(logn)am. | Θ(n)am. |

| Leftist[12] | Θ(1) | Θ(logn) | Θ(logn) | Θ(logn) | Θ(logn) | Θ(n) |

| Binomial[10][14] | Θ(1) | Θ(logn) | Θ(logn) | Θ(1)am. | Θ(logn)[b] | Θ(n) |

| Skew binomial[15] | Θ(1) | Θ(logn) | Θ(logn) | Θ(1) | Θ(logn)[b] | Θ(n) |

| 2–3 heap[17] | Θ(1) | O(logn)am. | Θ(1) | Θ(1)am. | O(logn)[b] | Θ(n) |

| Bottom-up skew[11] | Θ(1) | O(logn)am. | O(logn)am. | Θ(1)am. | Θ(1)am. | Θ(n)am. |

| Pairing[18] | Θ(1) | O(logn)am. | o(logn)am.[c] | Θ(1) | Θ(1) | Θ(n) |

| Rank-pairing[21] | Θ(1) | O(logn)am. | Θ(1)am. | Θ(1) | Θ(1) | Θ(n) |

| Fibonacci[10][2] | Θ(1) | O(logn)am. | Θ(1)am. | Θ(1) | Θ(1) | Θ(n) |

| Strict Fibonacci[22][d] | Θ(1) | Θ(logn) | Θ(1) | Θ(1) | Θ(1) | Θ(n) |

| Brodal[23][d] | Θ(1) | Θ(logn) | Θ(1) | Θ(1) | Θ(1) | Θ(n)[24] |

- ^make-heapis the operation of building a heap from a sequence ofnunsorted elements. It can be done inΘ(n) time whenevermeldruns inO(logn) time (where both complexities can be amortized).[11][12]Another algorithm achievesΘ(n) for binary heaps.[13]

- ^abcForpersistentheaps (not supportingdecrease-key), a generic transformation reduces the cost ofmeldto that ofinsert,while the new cost ofdelete-minis the sum of the old costs ofdelete-minandmeld.[16]Here, it makesmeldrun inΘ(1) time (amortized, if the cost ofinsertis) whiledelete-minstill runs inO(logn). Applied to skew binomial heaps, it yields Brodal-Okasaki queues, persistent heaps with optimal worst-case complexities.[15]

- ^Lower bound of[19]upper bound of[20]

- ^abBrodal queues and strict Fibonacci heaps achieve optimal worst-case complexities for heaps. They were first described as imperative data structures. The Brodal-Okasaki queue is apersistentdata structure achieving the same optimum, except thatdecrease-keyis not supported.

References

[edit]- ^Cormen, Thomas H.;Leiserson, Charles E.;Rivest, Ronald L.;Stein, Clifford(2001) [1990]. "Chapter 20: Fibonacci Heaps".Introduction to Algorithms(2nd ed.). MIT Press and McGraw-Hill. pp. 476–497.ISBN0-262-03293-7.Third edition p. 518.

- ^abcFredman, Michael Lawrence;Tarjan, Robert E.(July 1987)."Fibonacci heaps and their uses in improved network optimization algorithms"(PDF).Journal of the Association for Computing Machinery.34(3): 596–615.CiteSeerX10.1.1.309.8927.doi:10.1145/28869.28874.

- ^Fredman, Michael L.;Sedgewick, Robert;Sleator, Daniel D.;Tarjan, Robert E.(1986)."The pairing heap: a new form of self-adjusting heap"(PDF).Algorithmica.1(1–4): 111–129.doi:10.1007/BF01840439.S2CID23664143.

- ^http://www.cs.princeton.edu/~wayne/kleinberg-tardos/pdf/FibonacciHeaps.pdf,p. 79

- ^Gerth Stølting Brodal (1996),"Worst-Case Efficient Priority Queues",Proc. 7th ACM-SIAM Symposium on Discrete Algorithms,Society for Industrial and Applied Mathematics:52–58,CiteSeerX10.1.1.43.8133,ISBN0-89871-366-8

- ^Brodal, G. S. L.; Lagogiannis, G.; Tarjan, R. E. (2012).Strict Fibonacci heaps(PDF).Proceedings of the 44th symposium on Theory of Computing - STOC '12. p. 1177.doi:10.1145/2213977.2214082.ISBN978-1-4503-1245-5.

- ^Mrena, Michal; Sedlacek, Peter; Kvassay, Miroslav (June 2019). "Practical Applicability of Advanced Implementations of Priority Queues in Finding Shortest Paths".2019 International Conference on Information and Digital Technologies (IDT).Zilina, Slovakia: IEEE. pp. 335–344.doi:10.1109/DT.2019.8813457.ISBN9781728114019.S2CID201812705.

- ^abLarkin, Daniel; Sen, Siddhartha; Tarjan, Robert (2014). "A Back-to-Basics Empirical Study of Priority Queues".Proceedings of the Sixteenth Workshop on Algorithm Engineering and Experiments:61–72.arXiv:1403.0252.Bibcode:2014arXiv1403.0252L.doi:10.1137/1.9781611973198.7.ISBN978-1-61197-319-8.S2CID15216766.

- ^Driscoll, James R.; Gabow, Harold N.; Shrairman, Ruth; Tarjan, Robert E. (November 1988)."Relaxed heaps: An alternative to Fibonacci heaps with applications to parallel computation".Communications of the ACM.31(11): 1343–1354.doi:10.1145/50087.50096.S2CID16078067.

- ^abcdCormen, Thomas H.;Leiserson, Charles E.;Rivest, Ronald L.(1990).Introduction to Algorithms(1st ed.). MIT Press and McGraw-Hill.ISBN0-262-03141-8.

- ^abcSleator, Daniel Dominic;Tarjan, Robert Endre(February 1986)."Self-Adjusting Heaps".SIAM Journal on Computing.15(1): 52–69.CiteSeerX10.1.1.93.6678.doi:10.1137/0215004.ISSN0097-5397.

- ^abTarjan, Robert(1983). "3.3. Leftist heaps".Data Structures and Network Algorithms.pp. 38–42.doi:10.1137/1.9781611970265.ISBN978-0-89871-187-5.

- ^Hayward, Ryan; McDiarmid, Colin (1991)."Average Case Analysis of Heap Building by Repeated Insertion"(PDF).J. Algorithms.12:126–153.CiteSeerX10.1.1.353.7888.doi:10.1016/0196-6774(91)90027-v.Archived fromthe original(PDF)on 2016-02-05.Retrieved2016-01-28.

- ^"Binomial Heap | Brilliant Math & Science Wiki".brilliant.org.Retrieved2019-09-30.

- ^abBrodal, Gerth Stølting; Okasaki, Chris (November 1996), "Optimal purely functional priority queues",Journal of Functional Programming,6(6): 839–857,doi:10.1017/s095679680000201x

- ^Okasaki, Chris(1998). "10.2. Structural Abstraction".Purely Functional Data Structures(1st ed.). pp. 158–162.ISBN9780521631242.

- ^Takaoka, Tadao(1999),Theory of 2–3 Heaps(PDF),p. 12

- ^Iacono, John(2000), "Improved upper bounds for pairing heaps",Proc. 7th Scandinavian Workshop on Algorithm Theory(PDF),Lecture Notes in Computer Science, vol. 1851, Springer-Verlag, pp. 63–77,arXiv:1110.4428,CiteSeerX10.1.1.748.7812,doi:10.1007/3-540-44985-X_5,ISBN3-540-67690-2

- ^Fredman, Michael Lawrence(July 1999)."On the Efficiency of Pairing Heaps and Related Data Structures"(PDF).Journal of the Association for Computing Machinery.46(4): 473–501.doi:10.1145/320211.320214.

- ^Pettie, Seth (2005).Towards a Final Analysis of Pairing Heaps(PDF).FOCS '05 Proceedings of the 46th Annual IEEE Symposium on Foundations of Computer Science. pp. 174–183.CiteSeerX10.1.1.549.471.doi:10.1109/SFCS.2005.75.ISBN0-7695-2468-0.

- ^Haeupler, Bernhard; Sen, Siddhartha;Tarjan, Robert E.(November 2011)."Rank-pairing heaps"(PDF).SIAM J. Computing.40(6): 1463–1485.doi:10.1137/100785351.

- ^Brodal, Gerth Stølting;Lagogiannis, George;Tarjan, Robert E.(2012).Strict Fibonacci heaps(PDF).Proceedings of the 44th symposium on Theory of Computing - STOC '12. pp. 1177–1184.CiteSeerX10.1.1.233.1740.doi:10.1145/2213977.2214082.ISBN978-1-4503-1245-5.

- ^Brodal, Gerth S.(1996),"Worst-Case Efficient Priority Queues"(PDF),Proc. 7th Annual ACM-SIAM Symposium on Discrete Algorithms,pp. 52–58

- ^Goodrich, Michael T.;Tamassia, Roberto(2004). "7.3.6. Bottom-Up Heap Construction".Data Structures and Algorithms in Java(3rd ed.). pp. 338–341.ISBN0-471-46983-1.