Transpose

Inlinear algebra,thetransposeof amatrixis an operator which flips a matrix over its diagonal; that is, it switches the row and column indices of the matrixAby producing another matrix, often denoted byAT(among other notations).[1]

The transpose of a matrix was introduced in 1858 by the British mathematicianArthur Cayley.[2]In the case of alogical matrixrepresenting abinary relationR, the transpose corresponds to theconverse relationRT.

Transpose of a matrix[edit]

Definition[edit]

The transpose of a matrixA,denoted byAT,[3]⊤A,A⊤,,[4][5]A′,[6]Atr,tAorAt,may be constructed by any one of the following methods:

- ReflectAover itsmain diagonal(which runs from top-left to bottom-right) to obtainAT

- Write the rows ofAas the columns ofAT

- Write the columns ofAas the rows ofAT

Formally, thei-th row,j-th column element ofATis thej-th row,i-th column element ofA:

IfAis anm×nmatrix, thenATis ann×mmatrix.

In the case of square matrices,ATmay also denote theTth power of the matrixA.For avoiding a possible confusion, many authors use left upperscripts, that is, they denote the transpose asTA.An advantage of this notation is that no parentheses are needed when exponents are involved: as(TA)n=T(An),notationTAnis not ambiguous.

In this article this confusion is avoided by never using the symbolTas avariablename.

Matrix definitions involving transposition[edit]

A square matrix whose transpose is equal to itself is called asymmetric matrix;that is,Ais symmetric if

A square matrix whose transpose is equal to its negative is called askew-symmetric matrix;that is,Ais skew-symmetric if

A squarecomplexmatrix whose transpose is equal to the matrix with every entry replaced by itscomplex conjugate(denoted here with an overline) is called aHermitian matrix(equivalent to the matrix being equal to itsconjugate transpose); that is,Ais Hermitian if

A squarecomplexmatrix whose transpose is equal to the negation of its complex conjugate is called askew-Hermitian matrix;that is,Ais skew-Hermitian if

A square matrix whose transpose is equal to itsinverseis called anorthogonal matrix;that is,Ais orthogonal if

A square complex matrix whose transpose is equal to its conjugate inverse is called aunitary matrix;that is,Ais unitary if

Examples[edit]

Properties[edit]

LetAandBbe matrices andcbe ascalar.

-

- The operation of taking the transpose is aninvolution(self-inverse).

-

- The transpose respectsaddition.

-

- The transpose of a scalar is the same scalar. Together with the preceding property, this implies that the transpose is alinear mapfrom thespaceofm×nmatrices to the space of then×mmatrices.

-

- The order of the factors reverses. By induction, this result extends to the general case of multiple matrices, so

- (A1A2...Ak−1Ak)T=AkTAk−1T…A2TA1T.

- The order of the factors reverses. By induction, this result extends to the general case of multiple matrices, so

-

- Thedeterminantof a square matrix is the same as the determinant of its transpose.

- Thedot productof two column vectorsaandbcan be computed as the single entry of the matrix product

- IfAhas only real entries, thenATAis apositive-semidefinite matrix.

-

- The transpose of an invertible matrix is also invertible, and its inverse is the transpose of the inverse of the original matrix.

The notationA−Tis sometimes used to represent either of these equivalent expressions.

- The transpose of an invertible matrix is also invertible, and its inverse is the transpose of the inverse of the original matrix.

- IfAis a square matrix, then itseigenvaluesare equal to the eigenvalues of its transpose, since they share the samecharacteristic polynomial.

- Over any field,a square matrixissimilarto.

- This implies thatandhave the sameinvariant factors,which implies they share the same minimal polynomial, characteristic polynomial, and eigenvalues, among other properties.

- A proof of this property uses the following two observations.

- Letandbematrices over some base fieldand letbe afield extensionof.Ifandare similar as matrices over,then they are similar over.In particular this applies whenis thealgebraic closureof.

- Ifis a matrix over an algebraically closed field inJordan normal formwith respect to some basis, thenis similar to.This further reduces to proving the same fact whenis a single Jordan block, which is a straightforward exercise.

Products[edit]

IfAis anm×nmatrix andATis its transpose, then the result ofmatrix multiplicationwith these two matrices gives two square matrices:A ATism×mandATAisn×n.Furthermore, these products aresymmetric matrices.Indeed, the matrix productA AThas entries that are theinner productof a row ofAwith a column ofAT.But the columns ofATare the rows ofA,so the entry corresponds to the inner product of two rows ofA.Ifpi jis the entry of the product, it is obtained from rowsiandjinA.The entrypj iis also obtained from these rows, thuspi j=pj i,and the product matrix (pi j) is symmetric. Similarly, the productATAis a symmetric matrix.

A quick proof of the symmetry ofA ATresults from the fact that it is its own transpose:

Implementation of matrix transposition on computers[edit]

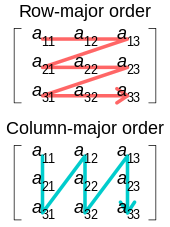

On acomputer,one can often avoid explicitly transposing a matrix inmemoryby simply accessing the same data in a different order. For example,software librariesforlinear algebra,such asBLAS,typically provide options to specify that certain matrices are to be interpreted in transposed order to avoid the necessity of data movement.

However, there remain a number of circumstances in which it is necessary or desirable to physically reorder a matrix in memory to its transposed ordering. For example, with a matrix stored inrow-major order,the rows of the matrix are contiguous in memory and the columns are discontiguous. If repeated operations need to be performed on the columns, for example in afast Fourier transformalgorithm, transposing the matrix in memory (to make the columns contiguous) may improve performance by increasingmemory locality.

Ideally, one might hope to transpose a matrix with minimal additional storage. This leads to the problem of transposing ann×mmatrixin-place,withO(1)additional storage or at most storage much less thanmn.Forn≠m,this involves a complicatedpermutationof the data elements that is non-trivial to implement in-place. Therefore, efficientin-place matrix transpositionhas been the subject of numerous research publications incomputer science,starting in the late 1950s, and several algorithms have been developed.

Transposes of linear maps and bilinear forms[edit]

As the main use of matrices is to represent linear maps betweenfinite-dimensional vector spaces,the transpose is an operation on matrices that may be seen as the representation of some operation on linear maps.

This leads to a much more general definition of the transpose that works on every linear map, even when linear maps cannot be represented by matrices (such as in the case of infinite dimensional vector spaces). In the finite dimensional case, the matrix representing the transpose of a linear map is the transpose of the matrix representing the linear map, independently of thebasischoice.

Transpose of a linear map[edit]

LetX#denote thealgebraic dual spaceof anR-moduleX. LetXandYbeR-modules. Ifu:X→Yis alinear map,then itsalgebraic adjointordual,[8]is the mapu#:Y#→X#defined byf↦f∘u. The resulting functionalu#(f)is called thepullbackoffbyu. The followingrelationcharacterizes the algebraic adjoint ofu[9]

- ⟨u#(f),x⟩=⟨f,u(x)⟩for allf∈Y#andx∈X

where⟨•, •⟩is thenatural pairing(i.e. defined by⟨h,z⟩:=h(z)). This definition also applies unchanged to left modules and to vector spaces.[10]

The definition of the transpose may be seen to be independent of any bilinear form on the modules, unlike the adjoint (below).

Thecontinuous dual spaceof atopological vector space(TVS)Xis denoted byX'. IfXandYare TVSs then a linear mapu:X→Yisweakly continuousif and only ifu#(Y') ⊆X',in which case we lettu:Y'→X'denote the restriction ofu#toY'. The maptuis called thetranspose[11]ofu.

If the matrixAdescribes a linear map with respect tobasesofVandW,then the matrixATdescribes the transpose of that linear map with respect to thedual bases.

Transpose of a bilinear form[edit]

Every linear map to the dual spaceu:X→X#defines a bilinear formB:X×X→F,with the relationB(x,y) =u(x)(y). By defining the transpose of this bilinear form as the bilinear formtBdefined by the transposetu:X##→X#i.e.tB(y,x) =tu(Ψ(y))(x),we find thatB(x,y) =tB(y,x). Here,Ψis the naturalhomomorphismX→X##into thedouble dual.

Adjoint[edit]

If the vector spacesXandYhave respectivelynondegeneratebilinear formsBXandBY,a concept known as theadjoint,which is closely related to the transpose, may be defined:

Ifu:X→Yis alinear mapbetweenvector spacesXandY,we definegas theadjointofuifg:Y→Xsatisfies

- for allx∈Xandy∈Y.

These bilinear forms define anisomorphismbetweenXandX#,and betweenYandY#,resulting in an isomorphism between the transpose and adjoint ofu. The matrix of the adjoint of a map is the transposed matrix only if thebasesareorthonormalwith respect to their bilinear forms. In this context, many authors however, use the term transpose to refer to the adjoint as defined here.

The adjoint allows us to consider whetherg:Y→Xis equal tou −1:Y→X. In particular, this allows theorthogonal groupover a vector spaceXwith a quadratic form to be defined without reference to matrices (nor the components thereof) as the set of all linear mapsX→Xfor which the adjoint equals the inverse.

Over a complex vector space, one often works withsesquilinear forms(conjugate-linear in one argument) instead of bilinear forms. TheHermitian adjointof a map between such spaces is defined similarly, and the matrix of the Hermitian adjoint is given by the conjugate transpose matrix if the bases are orthonormal.

See also[edit]

- Adjugate matrix,the transpose of thecofactor matrix

- Conjugate transpose

- Moore–Penrose pseudoinverse

- Projection (linear algebra)

References[edit]

- ^Nykamp, Duane."The transpose of a matrix".Math Insight.RetrievedSeptember 8,2020.

- ^Arthur Cayley (1858)"A memoir on the theory of matrices",Philosophical Transactions of the Royal Society of London,148:17–37. The transpose (or "transposition" ) is defined on page 31.

- ^T.A. Whitelaw (1 April 1991).Introduction to Linear Algebra, 2nd edition.CRC Press.ISBN978-0-7514-0159-2.

- ^"Transpose of a Matrix Product (ProofWiki)".ProofWiki.Retrieved4 Feb2021.

- ^"What is the best symbol for vector/matrix transpose?".Stack Exchange.Retrieved4 Feb2021.

- ^Weisstein, Eric W."Transpose".mathworld.wolfram.com.Retrieved2020-09-08.

- ^Gilbert Strang(2006)Linear Algebra and its Applications4th edition, page 51, ThomsonBrooks/ColeISBN0-03-010567-6

- ^Schaefer & Wolff 1999,p. 128.

- ^Halmos 1974,§44

- ^Bourbaki 1989,II §2.5

- ^Trèves 2006,p. 240.

Further reading[edit]

- Bourbaki, Nicolas(1989) [1970].Algebra I Chapters 1-3[Algèbre: Chapitres 1 à 3](PDF).Éléments de mathématique.Berlin New York: Springer Science & Business Media.ISBN978-3-540-64243-5.OCLC18588156.

- Halmos, Paul(1974),Finite dimensional vector spaces,Springer,ISBN978-0-387-90093-3.

- Maruskin, Jared M. (2012).Essential Linear Algebra.San José: Solar Crest. pp. 122–132.ISBN978-0-9850627-3-6.

- Schaefer, Helmut H.;Wolff, Manfred P. (1999).Topological Vector Spaces.GTM.Vol. 8 (Second ed.). New York, NY: Springer New York Imprint Springer.ISBN978-1-4612-7155-0.OCLC840278135.

- Trèves, François(2006) [1967].Topological Vector Spaces, Distributions and Kernels.Mineola, N.Y.: Dover Publications.ISBN978-0-486-45352-1.OCLC853623322.

- Schwartz, Jacob T. (2001).Introduction to Matrices and Vectors.Mineola: Dover. pp. 126–132.ISBN0-486-42000-0.

External links[edit]

- Gilbert Strang (Spring 2010)Linear Algebrafrom MIT Open Courseware

![{\displaystyle \left[\mathbf {A} ^{\operatorname {T} }\right]_{ij}=\left[\mathbf {A} \right]_{ji}.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/9b0864ad54decb7f1b251512de895b40545facf5)