High Bandwidth Memory

| Computer memoryanddata storagetypes |

|---|

| Volatile |

| Non-volatile |

High Bandwidth Memory(HBM) is acomputer memoryinterface for3D-stackedsynchronous dynamic random-access memory(SDRAM) initially fromSamsung,AMDandSK Hynix.It is used in conjunction with high-performance graphics accelerators, network devices, high-performance datacenter AIASICs,as on-package cache inCPUs[1]and on-package RAM in upcoming CPUs, andFPGAsand in some supercomputers (such as theNEC SX-Aurora TSUBASAandFujitsu A64FX).[2]The first HBM memory chip was produced by SK Hynix in 2013,[3]and the first devices to use HBM were theAMD FijiGPUsin 2015.[4][5]

HBM was adopted byJEDECas an industry standard in October 2013.[6]The second generation,HBM2,was accepted by JEDEC in January 2016.[7]JEDEC officially announced theHBM3standard on January 27, 2022.[8]

Technology

[edit]| Type | Release | Clock (GHz) |

Stack | per Stack (1024 bit) | |

|---|---|---|---|---|---|

| Capacity (230Byte) |

Data rate (GByte/s) | ||||

| HBM 1 | Oct 2013 | 0.5 | 8× 128 bit |

1×4 =4 | 128 |

| HBM 2 | Jan 2016 | 1.0…1.2 | 1×8 =8 | 256…307 | |

| HBM 2E | Aug 2019 | 1.8 | 2×8 = 16 | 461 | |

| HBM 3 | Oct 2021 | 3.2 | 16× 64 bit |

2×12 = 24 | 819 |

| HBM 4 | 2026 | 5.6 | 2×16 = 32 | 1434 | |

HBM achieves higherbandwidththanDDR4orGDDR5while using less power, and in a substantially smaller form factor.[9]This is achieved by stacking up to eightDRAMdiesand an optional base die which can include buffer circuitry and test logic.[10]The stack is often connected to the memory controller on aGPUorCPUthrough a substrate, such as a siliconinterposer.[11][12]Alternatively, the memory die could be stacked directly on the CPU or GPU chip. Within the stack the dies are vertically interconnected bythrough-silicon vias(TSVs) andmicrobumps.The HBM technology is similar in principle but incompatible with theHybrid Memory Cube(HMC) interface developed byMicron Technology.[13]

HBM memory bus is very wide in comparison to other DRAM memories such as DDR4 or GDDR5. An HBM stack of four DRAM dies (4‑Hi) has two 128‑bit channels per die for a total of 8 channels and a width of 1024 bits in total. A graphics card/GPU with four 4‑Hi HBM stacks would therefore have a memory bus with a width of 4096 bits. In comparison, the bus width of GDDR memories is 32 bits, with 16 channels for a graphics card with a 512‑bit memory interface.[14]HBM supports up to 4 GB per package.

The larger number of connections to the memory, relative to DDR4 or GDDR5, required a new method of connecting the HBM memory to the GPU (or other processor).[15]AMD and Nvidia have both used purpose-built silicon chips, calledinterposers,to connect the memory and GPU. This interposer has the added advantage of requiring the memory and processor to be physically close, decreasing memory paths. However, assemiconductor device fabricationis significantly more expensive thanprinted circuit boardmanufacture, this adds cost to the final product.

-

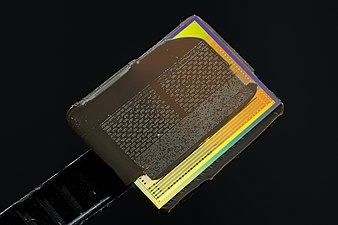

HBM DRAM die

-

HBM controller die

-

HBM memory on an AMDRadeon R9 Nanographics card's GPU package

Interface

[edit]

The HBM DRAM is tightly coupled to the host compute die with a distributed interface. The interface is divided into independent channels. The channels are completely independent of one another and are not necessarily synchronous to each other. The HBM DRAM uses a wide-interface architecture to achieve high-speed, low-power operation. The HBM DRAM uses a 500 MHzdifferentialclock CK_t / CK_c (where the suffix "_t" denotes the "true", or "positive", component of the differential pair, and "_c" stands for the "complementary" one). Commands are registered at the rising edge of CK_t, CK_c. Each channel interface maintains a 128‑bit data bus operating at double data rate (DDR). HBM supports transfer rates of 1GT/sper pin (transferring 1 bit), yielding an overall package bandwidth of 128 GB/s.[16]

HBM2

[edit]The second generation of High Bandwidth Memory, HBM2, also specifies up to eight dies per stack and doubles pin transfer rates up to 2GT/s.Retaining 1024‑bit wide access, HBM2 is able to reach 256 GB/s memory bandwidth per package. The HBM2 spec allows up to 8 GB per package. HBM2 is predicted to be especially useful for performance-sensitive consumer applications such asvirtual reality.[17]

On January 19, 2016,Samsungannounced early mass production of HBM2, at up to 8 GB per stack.[18][19]SK Hynix also announced availability of 4 GB stacks in August 2016.[20]

-

HBM2 DRAM die

-

HBM2 controller die

-

The HBM2 interposer of aRadeon RX Vega 64GPU, with removed HBM dies; the GPU is still in place

HBM2E

[edit]In late 2018, JEDEC announced an update to the HBM2 specification, providing for increased bandwidth and capacities.[21]Up to 307 GB/s per stack (2.5 Tbit/s effective data rate) is now supported in the official specification, though products operating at this speed had already been available. Additionally, the update added support for 12‑Hi stacks (12 dies) making capacities of up to 24 GB per stack possible.

On March 20, 2019,Samsungannounced their Flashbolt HBM2E, featuring eight dies per stack, a transfer rate of 3.2GT/s,providing a total of 16 GB and 410 GB/s per stack.[22]

August 12, 2019,SK Hynixannounced their HBM2E, featuring eight dies per stack, a transfer rate of 3.6GT/s,providing a total of 16 GB and 460 GB/s per stack.[23][24]On July 2, 2020, SK Hynix announced that mass production has begun.[25]

HBM3

[edit]In late 2020,Micronunveiled that the HBM2E standard would be updated and alongside that they unveiled the next standard known as HBMnext (later renamed to HBM3). This was to be a big generational leap from HBM2 and the replacement to HBM2E. This newVRAMwould have come to the market in the Q4 of 2022. This would likely introduce a new architecture as the naming suggests.

While the architecture might be overhauled, leaks point toward the performance to be similar to that of the updated HBM2E standard. This RAM is likely to be used mostly in data centerGPUs.[26][27][28][29]

In mid 2021,SK Hynixunveiled some specifications of the HBM3 standard, with 5.2 Gbit/s I/O speeds and bandwidth of 665 GB/s per package, as well as up to 16-high 2.5D and 3D solutions.[30][31]

On 20 October 2021, before the JEDEC standard for HBM3 was finalised, SK Hynix was the first memory vendor to announce that it has finished development of HBM3 memory devices. According to SK Hynix, the memory would run as fast as 6.4 Gbps/pin, double the data rate of JEDEC-standard HBM2E, which formally tops out at 3.2 Gbps/pin, or 78% faster than SK Hynix’s own 3.6 Gbps/pin HBM2E. The devices support a data transfer rate of 6.4 Gbit/s and therefore a single HBM3 stack may provide a bandwidth of up to 819 GB/s. The basic bus widths for HBM3 remain unchanged, with a single stack of memory being 1024-bits wide. SK Hynix would offer their memory in two capacities: 16 GB and 24 GB, aligning with 8-Hi and 12-Hi stacks respectively. The stacks consist of 8 or 12 16 Gb DRAMs that are each 30 μm thick and interconnected using Through Silicon Vias (TSVs).[32][33][34]

According to Ryan Smith ofAnandTech,the SK Hynix first generation HBM3 memory has the same density as their latest-generation HBM2E memory, meaning that device vendors looking to increase their total memory capacities for their next-generation parts would need to use memory with 12 dies/layers, up from the 8 layer stacks they typically used until then.[32] According to Anton Shilov ofTom's Hardware,high-performance compute GPUs or FPGAs typically use four or six HBM stacks, so with SK Hynix's HBM3 24 GB stacks they would accordingly get 3.2 TB/s or 4.9 TB/s of memory bandwidth. He also noted that SK Hynix's HBM3 chips are square, not rectangular like HBM2 and HBM2E chips.[33]According to Chris Mellor ofThe Register,with JEDEC not yet having developed its HBM3 standard, might mean that SK Hynix would need to retrofit its design to a future and faster one.[34]

JEDEC officially announced the HBM3 standard on January 27, 2022.[8]The number of memory channels was doubled from 8 channels of 128 bits with HBM2e to 16 channels of 64 bits with HBM3. Therefore, the total number of data pins of the interface is still 1024.[35]

In June 2022, SK Hynix announced they started mass production of industry's first HBM3 memory to be used with Nvidia's H100 GPU expected to ship in Q3 2022. The memory will provide H100 with "up to 819 GB/s" of memory bandwidth.[36]

In August 2022, Nvidia announced that its "Hopper" H100 GPU will ship with five active HBM3 sites (out of six on board) offering 80 GB of RAM and 3 TB/s of memory bandwidth (16 GB and 600 GB/s per site).[37]

HBM3E

[edit]On 30 May 2023, SK Hynix unveiled its HBM3E memory with 8 Gbps/pin data processing speed (25% faster than HBM3), which is to enter production in the first half of 2024.[38]At 8 GT/s with 1024-bit bus, its bandwidth per stack is increased from 819.2 GB/s as in HBM3 to 1 TB/s.

On 26 July 2023, Micron announced its HBM3E memory with 9.6 Gbps/pin data processing speed (50% faster than HBM3).[39]Micron HBM3E memory is a high-performance HBM that uses 1β DRAM process technology and advanced packaging to achieve the highest performance, capacity and power efficiency in the industry. It can store 24 GB per 8-high cube and allows data transfer at 1.2 TB/s. There will be a 12-high cube with 36 GB capacity in 2024.

In August 2023, Nvidia announced a new version of their GH200 Grace Hopper superchip that utilizes 141 GB (144 GiB physical) of HBM3e over a 6144-bit bus providing 50% higher memory bandwidth and 75% higher memory capacity over the HBM3 version.[40]

In May 2023, Samsung announced HBM3P with up to 7.2 Gbps which will be in production in 2024.[41]

On October 20 2023, Samsung announced their HBM3E "Shinebolt" with up to 9.8 Gbps memory.[42]

On February 26 2024, Micron announced the mass production of Micron's HBM3E memory.[43]

On March 18 2024, Nvidia announced theBlackwellseries of GPUs using HBM3E memory[44]

On March 19 2024, SK Hynix announced the mass production of SK Hynix's HBM3E memory.[45]

HBM-PIM

[edit]In February 2021,Samsungannounced the development of HBM with processing-in-memory (PIM). This new memory brings AI computing capabilities inside the memory, to increase the large-scale processing of data. A DRAM-optimised AI engine is placed inside each memory bank to enable parallel processing and minimise data movement. Samsung claims this will deliver twice the system performance and reduce energy consumption by more than 70%, while not requiring any hardware or software changes to the rest of the system.[46]

History

[edit]Background

[edit]Die-stackedmemory was initially commercialized in theflash memoryindustry.Toshibaintroduced aNAND flashmemory chip with eight stacked dies in April 2007,[47]followed byHynix Semiconductorintroducing a NAND flash chip with 24 stacked dies in September 2007.[48]

3D-stackedrandom-access memory(RAM) usingthrough-silicon via(TSV) technology was commercialized byElpida Memory,which developed the first 8GBDRAMchip (stacked with fourDDR3SDRAMdies) in September 2009, and released it in June 2011. In 2011,SK Hynixintroduced 16GB DDR3 memory (40nmclass) using TSV technology,[3]Samsung Electronicsintroduced 3D-stacked 32GB DDR3 (30nmclass) based on TSV in September, and then Samsung andMicron Technologyannounced TSV-basedHybrid Memory Cube(HMC) technology in October.[49]

JEDECfirst released the JESD229 standard for Wide IO memory,[50]the predecessor of HBM featuring four 128 bit channels with single data rate clocking, in December 2011 after several years of work. The first HBM standard JESD235 followed in October 2013.

Development

[edit]

The development of High Bandwidth Memory began at AMD in 2008 to solve the problem of ever-increasing power usage and form factor of computer memory. Over the next several years, AMD developed procedures to solve die-stacking problems with a team led by Senior AMD Fellow Bryan Black.[51]To help AMD realize their vision of HBM, they enlisted partners from the memory industry, particularly Korean companySK Hynix,[51]which had prior experience with 3D-stacked memory,[3][48]as well as partners from theinterposerindustry (Taiwanese companyUMC) andpackagingindustry (Amkor TechnologyandASE).[51]

The development of HBM was completed in 2013, when SK Hynix built the first HBM memory chip.[3]HBM was adopted as industry standard JESD235 byJEDECin October 2013, following a proposal by AMD and SK Hynix in 2010.[6]High volume manufacturing began at a Hynix facility inIcheon,South Korea, in 2015.

The first GPU utilizing HBM was the AMD Fiji which was released in June 2015 powering the AMD Radeon R9 Fury X.[4][52][53]

In January 2016,Samsung Electronicsbegan early mass production of HBM2.[18][19]The same month, HBM2 was accepted by JEDEC as standard JESD235a.[7]The first GPU chip utilizing HBM2 is theNvidia TeslaP100 which was officially announced in April 2016.[54][55]

In June 2016,Intelreleased a family ofXeon Phiprocessors with 8 stacks of HCDRAM, Micron's version of HBM. AtHot Chipsin August 2016, both Samsung and Hynix announced a new generation HBM memory technologies.[56][57]Both companies announced high performance products expected to have increased density, increased bandwidth, and lower power consumption. Samsung also announced a lower-cost version of HBM under development targeting mass markets. Removing the buffer die and decreasing the number ofTSVslowers cost, though at the expense of a decreased overall bandwidth (200 GB/s).

Nvidia announced Nvidia Hopper H100 GPU, the world's first GPU utilizing HBM3 on March 22, 2022.[58]

See also

[edit]- Stacked DRAM

- eDRAM

- Chip stack multi-chip module

- Hybrid Memory Cube(HMC): stacked memory standard fromMicron Technology(2011)

References

[edit]- ^Shilov, Anton (December 30, 2020)."Intel Confirms On-Package HBM Memory Support for Sapphire Rapids".Tom's Hardware.RetrievedJanuary 1,2021.

- ^ISSCC 2014 TrendsArchived2015-02-06 at theWayback Machinepage 118 "High-Bandwidth DRAM"

- ^abcd"History: 2010s".SK Hynix.Retrieved7 March2023.

- ^abSmith, Ryan (2 July 2015)."The AMD Radeon R9 Fury X Review".Anandtech.Retrieved1 August2016.

- ^Morgan, Timothy Prickett (March 25, 2014)."Future Nvidia 'Pascal' GPUs Pack 3D Memory, Homegrown Interconnect".EnterpriseTech.Retrieved26 August2014.

Nvidia will be adopting the High Bandwidth Memory (HBM) variant of stacked DRAM that was developed by AMD and Hynix

- ^abHigh Bandwidth Memory (HBM) DRAM (JESD235),JEDEC, October 2013

- ^ab"JESD235a: High Bandwidth Memory 2".2016-01-12.

- ^ab"JEDEC Publishes HBM3 Update to High Bandwidth Memory (HBM) Standard".JEDEC(Press release). Arlington, VA. January 27, 2022.RetrievedDecember 11,2022.

- ^HBM: Memory Solution for Bandwidth-Hungry ProcessorsArchived2015-04-24 at theWayback Machine,Joonyoung Kim and Younsu Kim, SK Hynix // Hot Chips 26, August 2014

- ^Sohn et.al. (Samsung) (January 2017)."A 1.2 V 20 nm 307 GB/s HBM DRAM With At-Speed Wafer-Level IO Test Scheme and Adaptive Refresh Considering Temperature Distribution".IEEE Journal of Solid-State Circuits.52(1): 250–260.Bibcode:2017IJSSC..52..250S.doi:10.1109/JSSC.2016.2602221.S2CID207783774.

- ^"What's Next for High Bandwidth Memory".17 December 2019.

- ^"Interposers".

- ^Where Are DRAM Interfaces Headed?Archived2018-06-15 at theWayback Machine// EETimes, 4/18/2014 "The Hybrid Memory Cube (HMC) and a competing technology called High-Bandwidth Memory (HBM) are aimed at computing and networking applications. These approaches stack multiple DRAM chips atop a logic chip."

- ^Highlights of the HighBandwidth Memory (HBM) StandardArchived2014-12-13 at theWayback Machine.Mike O’Connor, Sr. Research Scientist, NVidia // The Memory Forum – June 14, 2014

- ^Smith, Ryan (19 May 2015)."AMD Dives Deep On High Bandwidth Memory – What Will HBM Bring to AMD?".Anandtech.Retrieved12 May2017.

- ^"High-Bandwidth Memory (HBM)"(PDF).AMD. 2015-01-01.Retrieved2016-08-10.

- ^Valich, Theo (2015-11-16)."NVIDIA Unveils Pascal GPU: 16GB of memory, 1TB/s Bandwidth".VR World.Archived fromthe originalon 2019-07-14.Retrieved2016-01-24.

- ^ab"Samsung Begins Mass Producing World's Fastest DRAM – Based on Newest High Bandwidth Memory (HBM) Interface".news.samsung.

- ^ab"Samsung announces mass production of next-generation HBM2 memory – ExtremeTech".19 January 2016.

- ^Shilov, Anton (1 August 2016)."SK Hynix Adds HBM2 to Catalog".Anandtech.Retrieved1 August2016.

- ^"JEDEC Updates Groundbreaking High Bandwidth Memory (HBM) Standard"(Press release). JEDEC. 2018-12-17.Retrieved2018-12-18.

- ^"Samsung Electronics Introduces New High Bandwidth Memory Technology Tailored to Data Centers, Graphic Applications, and AI | Samsung Semiconductor Global Website".samsung.Retrieved2019-08-22.

- ^"SK Hynix Develops World's Fastest High Bandwidth Memory, HBM2E".skhynix.August 12, 2019. Archived fromthe originalon 2019-12-03.Retrieved2019-08-22.

- ^"SK Hynix Announces its HBM2E Memory Products, 460 GB/S and 16GB per Stack".

- ^"SK hynix Starts Mass-Production of High-Speed DRAM," HBM2E "".2 July 2020.

- ^"Micron reveals HBMnext, a successor to HBM2e".VideoCardz.August 14, 2020.RetrievedDecember 11,2022.

- ^Hill, Brandon (August 14, 2020)."Micron Announces HBMnext as Eventual Replacement for HBM2e in High-End GPUs".HotHardware.RetrievedDecember 11,2022.

- ^Hruska, Joel (August 14, 2020)."Micron Introduces HBMnext, GDDR6X, Confirms RTX 3090".ExtremeTech.RetrievedDecember 11,2022.

- ^Garreffa, Anthony (August 14, 2020)."Micron unveils HBMnext, the successor to HBM2e for next-next-gen GPUs".TweakTown.RetrievedDecember 11,2022.

- ^"SK Hynix expects HBM3 memory with 665 GB/s bandwidth".

- ^Shilov, Anton (June 9, 2021)."HBM3 to Top 665 GBPS Bandwidth per Chip, SK Hynix Says".Tom's Hardware.RetrievedDecember 11,2022.

- ^abSmith, Ryan (October 20, 2021)."SK Hynix Announces Its First HBM3 Memory: 24GB Stacks, Clocked at up to 6.4Gbps".AnandTech.RetrievedOctober 22,2021.

- ^abShilov, Anton (October 20, 2021)."SK Hynix Develops HBM3 DRAMs: 24GB at 6.4 GT/s over a 1024-Bit Bus".Tom's Hardware.RetrievedOctober 22,2021.

- ^abMellor, Chris (October 20, 2021)."SK hynix rolls out 819GB/s HBM3 DRAM".The Register.RetrievedOctober 24,2021.

- ^Prickett Morgan, Timothy (April 6, 2022)."The HBM3 roadmap is just getting started".The Next Platform.RetrievedMay 4,2022.

- ^"SK hynix to Supply Industry's First HBM3 DRAM to NVIDIA".SK Hynix.June 8, 2022.RetrievedDecember 11,2022.

- ^Robinson, Cliff (August 22, 2022)."NVIDIA H100 Hopper Details at HC34 as it Waits for Next-Gen CPUs".ServeTheHome.RetrievedDecember 11,2022.

- ^"SK hynix Enters Industry's First Compatibility Validation Process for 1bnm DDR5 Server DRAM".30 May 2023.

- ^"HBM3 Memory HBM3 Gen2".26 July 2023.

- ^Bonshor, Gavin (8 August 2023)."NVIDIA Unveils Updated GH200 'Grace Hopper' Superchip with HBM3e Memory, Shipping in Q2'2024".AnandTech.Retrieved9 August2023.

- ^"Samsung To Launch HBM3P Memory, Codenamed" Snowbolt "With Up To 5 TB/s Bandwith Per Stack".Wccftech.4 May 2023.Retrieved21 August2023.

- ^"Samsung Electronics Holds Memory Tech Day 2023 Unveiling New Innovations to Lead the Hyperscale AI Era".

- ^"Micron Commences Volume Production of Industry-Leading HBM3E Solution to Accelerate the Growth of AI".investors.micron.2024-02-26.Retrieved2024-06-07.

- ^Jarred Walton (2024-03-18)."Nvidia's next-gen AI GPU is 4X faster than Hopper: Blackwell B200 GPU delivers up to 20 petaflops of compute and other massive improvements".Tom's Hardware.Retrieved2024-03-19.

- ^https://news.skhynix /sk-hynix-begins-volume-production-of-industry-first-hbm3e/[bare URL]

- ^"Samsung Develops Industry's First High Bandwidth Memory with AI Processing Power".

- ^"TOSHIBA COMMERCIALIZES INDUSTRY'S HIGHEST CAPACITY EMBEDDED NAND FLASH MEMORY FOR MOBILE CONSUMER PRODUCTS".Toshiba.April 17, 2007. Archived fromthe originalon November 23, 2010.Retrieved23 November2010.

- ^ab"Hynix Surprises NAND Chip Industry".Korea Times.5 September 2007.Retrieved8 July2019.

- ^Kada, Morihiro (2015)."Research and Development History of Three-Dimensional Integration Technology".Three-Dimensional Integration of Semiconductors: Processing, Materials, and Applications.Springer. pp. 15–8.ISBN9783319186757.

- ^"WIDE I/O SINGLE DATA RATE (WIDE I/O SDR) standard JESD229"(PDF).

- ^abcHigh-Bandwidth Memory (HBM) from AMD: Making Beautiful Memory,AMD

- ^Smith, Ryan (19 May 2015)."AMD HBM Deep Dive".Anandtech.Retrieved1 August2016.

- ^[1]AMD Ushers in a New Era of PC Gaming including World’s First Graphics Family with Revolutionary HBM Technology

- ^Smith, Ryan (5 April 2016)."Nvidia announces Tesla P100 Accelerator".Anandtech.Retrieved1 August2016.

- ^"NVIDIA Tesla P100: The Most Advanced Data Center GPU Ever Built".nvidia.

- ^Smith, Ryan (23 August 2016)."Hot Chips 2016: Memory Vendors Discuss Ideas for Future Memory Tech – DDR5, Cheap HBM & More".Anandtech.Retrieved23 August2016.

- ^Walton, Mark (23 August 2016)."HBM3: Cheaper, up to 64GB on-package, and terabytes-per-second bandwidth".Ars Technica.Retrieved23 August2016.

- ^"NVIDIA Announces Hopper Architecture, the Next Generation of Accelerated Computing".

External links

[edit]- High Bandwidth Memory (HBM) DRAM (JESD235),JEDEC, October 2013

- Lee, Dong Uk; Kim, Kyung Whan; Kim, Kwan Weon; Kim, Hongjung; Kim, Ju Young; et al. (9–13 Feb 2014). "25.2 a 1.2V 8 Gb 8-channel 128 GB/S high-bandwidth memory (HBM) stacked DRAM with effective microbump I/O test methods using 29nm process and TSV".2014 IEEE International Solid-State Circuits Conference Digest of Technical Papers (ISSCC).IEEE(published 6 March 2014). pp. 432–433.doi:10.1109/ISSCC.2014.6757501.ISBN978-1-4799-0920-9.S2CID40185587.

- HBM vs HBM2 vs GDDR5 vs GDDR5X Memory Comparison