Half-precision floating-point format

Incomputing,half precision(sometimes calledFP16orfloat16) is abinaryfloating-pointcomputer number formatthat occupies16 bits(two bytes in modern computers) incomputer memory.It is intended for storage of floating-point values in applications where higher precision is not essential, in particularimage processingandneural networks.

Almost all modern uses follow theIEEE 754-2008standard, where the 16-bitbase-2format is referred to asbinary16,and the exponent uses 5 bits. This can express values in the range ±65,504, with the minimum value above 1 being 1 + 1/1024.

Depending on the computer, half-precision can be over an order of magnitude faster than double precision, e.g. 550 PFLOPS for half-precision vs 37 PFLOPS for double precision on one cloud provider.[1]

| Floating-pointformats |

|---|

| IEEE 754 |

|

| Other |

| Alternatives |

History[edit]

Several earlier 16-bit floating point formats have existed including that of Hitachi's HD61810 DSP of 1982 (a 4-bit exponent and a 12-bit mantissa),[2]Thomas J. Scott's WIF of 1991 (5 exponent bits, 10 mantissa bits)[3]and the3dfx Voodoo Graphics processorof 1995 (same as Hitachi).[4]

ILMwas searching for an image format that could handle a widedynamic range,but without the hard drive and memory cost of single or double precision floating point.[5]The hardware-accelerated programmable shading group led by John Airey atSGI (Silicon Graphics)used the s10e5 data type in 1997 as part of the 'bali' design effort. This is described in aSIGGRAPH2000 paper[6](see section 4.3) and further documented in US patent 7518615.[7]It was popularized by its use in the open-sourceOpenEXRimage format.

NvidiaandMicrosoftdefined thehalfdatatypein theCg language,released in early 2002, and implemented it in silicon in theGeForce FX,released in late 2002.[8]However, hardware support for accelerated 16-bit floating point was later dropped by Nvidia before being reintroduced in theTegra X1mobile GPU in 2015.

TheF16Cextension in 2012 allows x86 processors to convert half-precision floats to and from single-precision floats with a machine instruction.

IEEE 754 half-precision binary floating-point format: binary16[edit]

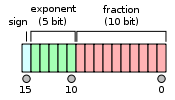

The IEEE 754 standard[9]specifies abinary16as having the following format:

- Sign bit:1 bit

- Exponentwidth: 5 bits

- Significandprecision:11 bits (10 explicitly stored)

The format is laid out as follows:

The format is assumed to have an implicit lead bit with value 1 unless the exponent field is stored with all zeros. Thus, only 10 bits of thesignificandappear in the memory format but the total precision is 11 bits. In IEEE 754 parlance, there are 10 bits of significand, but there are 11 bits of significand precision (log10(211) ≈ 3.311 decimal digits, or 4 digits ± slightly less than 5units in the last place).

Exponent encoding[edit]

The half-precision binary floating-point exponent is encoded using anoffset-binaryrepresentation, with the zero offset being 15; also known as exponent bias in the IEEE 754 standard.[9]

- Emin= 000012− 011112= −14

- Emax= 111102− 011112= 15

- Exponent bias= 011112= 15

Thus, as defined by the offset binary representation, in order to get the true exponent the offset of 15 has to be subtracted from the stored exponent.

The stored exponents 000002and 111112are interpreted specially.

| Exponent | Significand = zero | Significand ≠ zero | Equation |

|---|---|---|---|

| 000002 | zero,−0 | subnormal numbers | (−1)signbit× 2−14× 0.significantbits2 |

| 000012,..., 111102 | normalized value | (−1)signbit× 2exponent−15× 1.significantbits2 | |

| 111112 | ±infinity | NaN(quiet, signalling) | |

The minimum strictly positive (subnormal) value is 2−24≈ 5.96 × 10−8. The minimum positive normal value is 2−14≈ 6.10 × 10−5. The maximum representable value is (2−2−10) × 215= 65504.

Half precision examples[edit]

These examples are given in bit representation of the floating-point value. This includes the sign bit, (biased) exponent, and significand.

| Binary | Hex | Value | Notes |

|---|---|---|---|

| 0 00000 0000000000 | 0000 | 0 | |

| 0 00000 0000000001 | 0001 | 2−14× (0 +1/1024) ≈ 0.000000059604645 | smallest positive subnormal number |

| 0 00000 1111111111 | 03ff | 2−14× (0 +1023/1024) ≈ 0.000060975552 | largest subnormal number |

| 0 00001 0000000000 | 0400 | 2−14× (1 +0/1024) ≈ 0.00006103515625 | smallest positive normal number |

| 0 01101 0101010101 | 3555 | 2−2× (1 +341/1024) ≈ 0.33325195 | nearest value to 1/3 |

| 0 01110 1111111111 | 3bff | 2−1× (1 +1023/1024) ≈ 0.99951172 | largest number less than one |

| 0 01111 0000000000 | 3c00 | 20× (1 +0/1024) = 1 | one |

| 0 01111 0000000001 | 3c01 | 20× (1 +1/1024) ≈ 1.00097656 | smallest number larger than one |

| 0 11110 1111111111 | 7bff | 215× (1 +1023/1024) = 65504 | largest normal number |

| 0 11111 0000000000 | 7c00 | ∞ | infinity |

| 1 00000 0000000000 | 8000 | −0 | |

| 1 10000 0000000000 | c000 | −2 | |

| 1 11111 0000000000 | fc00 | −∞ | negative infinity |

By default, 1/3 rounds down like fordouble precision,because of the odd number of bits in the significand. The bits beyond the rounding point are0101... which is less than 1/2 of aunit in the last place.

Precision limitations[edit]

| Min | Max | interval |

|---|---|---|

| 0 | 2−13 | 2−24 |

| 2−13 | 2−12 | 2−23 |

| 2−12 | 2−11 | 2−22 |

| 2−11 | 2−10 | 2−21 |

| 2−10 | 2−9 | 2−20 |

| 2−9 | 2−8 | 2−19 |

| 2−8 | 2−7 | 2−18 |

| 2−7 | 2−6 | 2−17 |

| 2−6 | 2−5 | 2−16 |

| 2−5 | 2−4 | 2−15 |

| 2−4 | 1/8 | 2−14 |

| 1/8 | 1/4 | 2−13 |

| 1/4 | 1/2 | 2−12 |

| 1/2 | 1 | 2−11 |

| 1 | 2 | 2−10 |

| 2 | 4 | 2−9 |

| 4 | 8 | 2−8 |

| 8 | 16 | 2−7 |

| 16 | 32 | 2−6 |

| 32 | 64 | 2−5 |

| 64 | 128 | 2−4 |

| 128 | 256 | 1/8 |

| 256 | 512 | 1/4 |

| 512 | 1024 | 1/2 |

| 1024 | 2048 | 1 |

| 2048 | 4096 | 2 |

| 4096 | 8192 | 4 |

| 8192 | 16384 | 8 |

| 16384 | 32768 | 16 |

| 32768 | 65520 | 32 |

| 65520 | ∞ | ∞ |

65520 and larger numbers round to infinity. This is for round-to-even, other rounding strategies will change this cut-off.

ARM alternative half-precision[edit]

ARM processors support (via a floating pointcontrol registerbit) an "alternative half-precision" format, which does away with the special case for an exponent value of 31 (111112).[10]It is almost identical to the IEEE format, but there is no encoding for infinity or NaNs; instead, an exponent of 31 encodes normalized numbers in the range 65536 to 131008.

Uses of half precision[edit]

Half precision is used in severalcomputer graphicsenvironments to store pixels, includingMATLAB,OpenEXR,JPEG XR,GIMP,OpenGL,Vulkan,[11]Cg,Direct3D,andD3DX.The advantage over 8-bit or 16-bit integers is that the increaseddynamic rangeallows for more detail to be preserved in highlights andshadowsfor images, and avoids gamma correction. The advantage over 32-bitsingle-precisionfloating point is that it requires half the storage andbandwidth(at the expense of precision and range).[5]

Half precision can be useful formeshquantization. Mesh data is usually stored using 32-bit single precision floats for the vertices, however in some situations it is acceptable to reduce the precision to only 16-bit half precision, requiring only half the storage at the expense of some precision. Mesh quantization can also be done with 8-bit or 16-bit fixed precision depending on the requirements.[12]

Hardware and software formachine learningorneural networkstend to use half precision: such applications usually do a large amount of calculation, but don't require a high level of precision. Due to hardware typically not supporting 16-bit half precision floats, neural networks often use thebfloat16format, which is the single precision float format truncated to 16 bits.

If the hardware has instructions to compute half-precision math, it is often faster than single or double precision. If the system hasSIMDinstructions that can handle multiple floating-point numbers within one instruction, half precision can be twice as fast by operating on twice as many numbers simultaneously.[13]

Support by programming languages[edit]

Zigprovides support for half precisions with itsf16type.[14]

.NET5 introduced half precision floating point numbers with theSystem.Halfstandard library type.[15][16]As of January 2024, no.NET language (C#,F#,Visual Basic,andC++/CLIandC++/CX) has literals (e.g. in C#,1.0fhas typeSystem.Singleor1.0mhas typeSystem.Decimal) or a keyword for the type.[17][18][19]

Swiftintroduced half precision floating point numbers in Swift 5.3 with theFloat16type.[20]

OpenCLalso supports half precision floating point numbers with the half datatype on IEEE 754-2008 half-precision storage format.[21]

Hardware support[edit]

Several versions of theARM architecturehave support for half precision.[22]

Support for half precision in thex86instruction setis specified in theF16Cinstruction set extension, first introduced in 2009 by AMD and fairly broadly adopted by AMD and Intel CPUs by 2012. This was further extended up theAVX-512_FP16instruction set extension implemented in the IntelSapphire Rapidsprocessor.[23]

OnRISC-V,theZfhandZfhminextensionsprovide hardware support for 16-bit half precision floats. TheZfhminextension is a minimal alternative toZfh.[24]

OnPower ISA,VSX and the not-yet-approved SVP64 extension provide hardware support for 16-bit half precision floats as of PowerISA v3.1B and later.[25][26]

See also[edit]

- bfloat16 floating-point format:Alternative 16-bit floating-point format with 8 bits of exponent and 7 bits of mantissa

- Minifloat:small floating-point formats

- IEEE 754:IEEE standard for floating-point arithmetic (IEEE 754)

- ISO/IEC 10967,Language Independent Arithmetic

- Primitive data type

- RGBE image format

- Power Management Bus § Linear11 Floating Point Format

References[edit]

- ^"About ABCI - About ABCI | ABCI".abci.ai.Retrieved2019-10-06.

- ^"hitachi:: dataBooks:: HD61810 Digital Signal Processor Users Manual".Archive.org.Retrieved2017-07-14.

- ^Scott, Thomas J. (March 1991). "Mathematics and computer science at odds over real numbers".Proceedings of the twenty-second SIGCSE technical symposium on Computer science education - SIGCSE '91.Vol. 23. pp. 130–139.doi:10.1145/107004.107029.ISBN0897913779.S2CID16648394.

- ^"/home/usr/bk/glide/docs2.3.1/GLIDEPGM.DOC".Gamers.org.Retrieved2017-07-14.

- ^ab"OpenEXR".OpenEXR.Retrieved2017-07-14.

- ^Mark S. Peercy; Marc Olano; John Airey; P. Jeffrey Ungar."Interactive Multi-Pass Programmable Shading"(PDF).People.csail.mit.edu.Retrieved2017-07-14.

- ^"Patent US7518615 - Display system having floating point rasterization and floating point... - Google Patents".Google.Retrieved2017-07-14.

- ^"vs_2_sw".Cg 3.1 Toolkit Documentation.Nvidia.Retrieved17 August2016.

- ^abIEEE Standard for Floating-Point Arithmetic.IEEE STD 754-2019 (Revision of IEEE 754-2008). July 2019. pp. 1–84.doi:10.1109/ieeestd.2019.8766229.ISBN978-1-5044-5924-2.

- ^"Half-precision floating-point number support".RealView Compilation Tools Compiler User Guide.10 December 2010.Retrieved2015-05-05.

- ^Garrard, Andrew."10.1. 16-bit floating-point numbers".Khronos Data Format Specification v1.2 rev 1.Khronos.Retrieved2023-08-05.

- ^"KHR_mesh_quantization".GitHub.Khronos Group.Retrieved2023-07-02.

- ^Ho, Nhut-Minh; Wong, Weng-Fai (September 1, 2017)."Exploiting half precision arithmetic in Nvidia GPUs"(PDF).Department of Computer Science, National University of Singapore.RetrievedJuly 13,2020.

Nvidia recently introduced native half precision floating point support (FP16) into their Pascal GPUs. This was mainly motivated by the possibility that this will speed up data intensive and error tolerant applications in GPUs.

- ^"Floats".ziglang.org.Retrieved7 January2024.

- ^"Half Struct (System)".learn.microsoft.Retrieved2024-02-01.

- ^Govindarajan, Prashanth (2020-08-31)."Introducing the Half type!"..NET Blog.Retrieved2024-02-01.

- ^"Floating-point numeric types ― C# reference".learn.microsoft.2022-09-29.Retrieved2024-02-01.

- ^"Literals ― F# language reference".learn.microsoft.2022-06-15.Retrieved2024-02-01.

- ^"Data Type Summary — Visual Basic language reference".learn.microsoft.2021-09-15.Retrieved2024-02-01.

- ^"swift-evolution/proposals/0277-float16.md at main · apple/swift-evolution".github.Retrieved13 May2024.

- ^"cl_khr_fp16 extension".registry.khronos.org.Retrieved31 May2024.

- ^"Half-precision floating-point number format".ARM Compiler armclang Reference Guide Version 6.7.ARM Developer.Retrieved13 May2022.

- ^Towner, Daniel."Intel® Advanced Vector Extensions 512 - FP16 Instruction Set for Intel® Xeon® Processor Based Products"(PDF).Intel® Builders Programs.Retrieved13 May2022.

- ^"RISC-V Instruction Set Manual, Volume I: RISC-V User-Level ISA".Five EmbedDev.Retrieved2023-07-02.

- ^"OPF_PowerISA_v3.1B.pdf".OpenPOWER Files.OpenPOWER Foundation.Retrieved2023-07-02.

- ^"ls005.xlen.mdwn".libre-soc.org Git.Retrieved2023-07-02.

Further reading[edit]

External links[edit]

This article'suse ofexternal linksmay not follow Wikipedia's policies or guidelines.(July 2017) |

- Minifloats(inSurvey of Floating-Point Formats)

- OpenEXR site

- Half precision constantsfromD3DX

- OpenGL treatment of half precision

- Fast Half Float Conversions

- Analog Devices variant(four-bit exponent)

- C source code to convert between IEEE double, single, and half precision can be found here

- Java source code for half-precision floating-point conversion

- Half precision floating point for one of the extended GCC features