Display resolution

Thedisplay resolutionor display modes of adigital television,computer monitor,or otherdisplay deviceis the number of distinctpixelsin each dimension that can be displayed. It can be an ambiguous term especially as the displayed resolution is controlled by different factors incathode ray tube(CRT) displays,flat-panel displays(includingliquid-crystal displays) and projection displays using fixed picture-element (pixel) arrays.

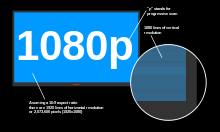

It is usually quoted aswidth × height,with the units in pixels: for example,1024 × 768means the width is 1024 pixels and the height is 768 pixels. This example would normally be spoken as "ten twenty-four by seven sixty-eight" or "ten twenty-four by seven six eight".

One use of the termdisplay resolutionapplies to fixed-pixel-array displays such asplasma display panels(PDP), liquid-crystal displays (LCD),Digital Light Processing(DLP) projectors,OLEDdisplays, and similar technologies, and is simply the physical number of columns and rows of pixels creating the display (e.g.1920 × 1080). A consequence of having a fixed-grid display is that, for multi-format video inputs, all displays need a "scaling engine" (a digital video processor that includes a memory array) to match the incoming picture format to the display.

For device displays such as phones, tablets, monitors and televisions, the use of the termdisplay resolutionas defined above is a misnomer, though common. The termdisplay resolutionis usually used to meanpixel dimensions,the maximum number of pixels in each dimension (e.g.1920 × 1080), which does not tell anything about the pixel density of the display on which the image is actually formed: resolution properly refers to thepixel density,the number of pixels per unit distance or area, not thetotalnumber of pixels. In digital measurement, the display resolution would be given in pixels per inch (PPI). In analog measurement, if the screen is 10 inches high, then the horizontal resolution is measured across a square 10 inches wide.[1]For television standards, this is typically stated as "lines horizontal resolution, per picture height";[2]for example, analogNTSCTVs can typically display about 340 lines of "per picture height" horizontal resolution from over-the-air sources, which is equivalent to about 440 total lines of actual picture information from left edge to right edge.[2]

Background

[edit]

Some commentators also use display resolution to indicate a range of input formats that the display's input electronics will accept and often include formats greater than the screen's native grid size even though they have to be down-scaled to match the screen's parameters (e.g. accepting a1920 × 1080input on a display with a native1366 × 768pixel array). In the case of television inputs, many manufacturers will take the input and zoom it out to "overscan"the display by as much as 5% so input resolution is not necessarily display resolution.

The eye's perception ofdisplay resolutioncan be affected by a number of factors – seeimage resolutionandoptical resolution.One factor is the display screen's rectangular shape, which is expressed as the ratio of the physical picture width to the physical picture height. This is known as theaspect ratio.A screen's physical aspect ratio and the individual pixels' aspect ratio may not necessarily be the same. An array of1280 × 720on a16:9display has square pixels, but an array of1024 × 768on a 16:9 display has oblong pixels.

An example of pixel shape affecting "resolution" or perceived sharpness: displaying more information in a smaller area using a higher resolution makes the image much clearer or "sharper". However, most recent screen technologies are fixed at a certain resolution; making the resolution lower on these kinds of screens will greatly decrease sharpness, as an interpolation process is used to "fix" the non-native resolution input into the display'snative resolutionoutput.

While some CRT-based displays may usedigital video processingthat involvesimage scalingusing memory arrays, ultimately "display resolution" in CRT-type displays is affected by different parameters such as spot size and focus,astigmatic effectsin the display corners, the color phosphor pitchshadow mask(such asTrinitron) in color displays, and the video bandwidth.

Aspects

[edit]

Overscan and underscan

[edit]Most television display manufacturers "overscan" the pictures on their displays (CRTs and PDPs, LCDs etc.), so that the effective on-screen picture may be reduced from720 × 576(480) to680 × 550(450), for example. The size of the invisible area somewhat depends on the display device. Some HD televisions do this as well, to a similar extent.

Computer displays including projectors generally do not overscan although many models (particularly CRT displays) allow it. CRT displays tend to be underscanned in stock configurations, to compensate for the increasing distortions at the corners.

Interlaced versus progressive scan

[edit]Interlaced video(also known asinterlaced scan) is a technique for doubling the perceivedframe rateof a video display without consuming extrabandwidth.The interlaced signal contains twofieldsof a video frame captured consecutively. This enhances motion perception to the viewer, and reducesflickerby taking advantage of thephi phenomenon.

TheEuropean Broadcasting Unionhas argued against interlaced video in production and broadcasting. The main argument is that no matter how complex the deinterlacing algorithm may be, the artifacts in the interlaced signal cannot be completely eliminated because some information is lost between frames. Despite arguments against it, television standards organizations continue to support interlacing. It is still included in digital video transmission formats such asDV,DVB,andATSC.New video compression standards likeHigh Efficiency Video Codingare optimized forprogressive scanvideo, but sometimes do support interlaced video.

Progressive scanning(alternatively referred to asnoninterlaced scanning) is a format of displaying, storing, or transmittingmoving imagesin which all the lines of eachframeare drawn in sequence. This is in contrast tointerlaced videoused in traditionalanalog televisionsystems where only the odd lines, then the even lines of each frame (each image called avideo field) are drawn alternately, so that only half the number of actual image frames are used to produce video.

Televisions

[edit]Current standards

[edit]Televisions are of the following resolutions:

- Standard-definition television (SDTV):

- 480i(NTSC-compatibledigital standard employing twointerlacedfields of 240 lines each)

- 576i(PAL-compatibledigital standard employing two interlaced fields of 288 lines each)

- Enhanced-definition television (EDTV):

- 480p(720 × 480progressive scan)

- 576p(720 × 576progressive scan)

- High-definition television (HDTV):

- Ultra-high-definition television (UHDTV):

Film industry

[edit]As far asdigital cinematographyis concerned, video resolution standards depend first on the frames' aspect ratio in thefilm stock(which is usuallyscannedfordigital intermediatepost-production) and then on the actual points' count. Although there is not a unique set of standardized sizes, it is commonplace within the motion picture industry to refer to "nK "image" quality ", wherenis a (small, usually even) integer number which translates into a set of actual resolutions, depending on thefilm format.As a reference consider that, for a 4:3 (around 1.33:1) aspect ratio which a film frame (no matter what is its format) is expected tohorizontally fit in,nis the multiplier of 1024 such that the horizontal resolution is exactly1024•npoints.[citation needed]For example,2K reference resolutionis2048 × 1536pixels, whereas4K reference resolutionis4096 × 3072pixels. Nevertheless, 2K may also refer to resolutions like2048 × 1556(full-aperture),2048 × 1152(HDTV,16:9 aspect ratio) or2048 × 872pixels (Cinemascope,2.35:1 aspect ratio). It is also worth noting that while a frame resolution may be, for example, 3:2 (720 × 480NTSC), that is not what you will see on-screen (i.e. 4:3 or 16:9 depending on the intended aspect ratio of the original material).

Computer monitors

[edit]Computer monitors have traditionally possessed higher resolutions than most televisions.

Evolution of standards

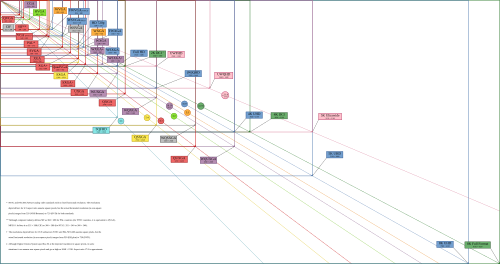

[edit]

Many personal computers introduced in the late 1970s and the 1980s were designed to use television receivers as their display devices, making the resolutions dependent on the television standards in use, includingPALandNTSC.Picture sizes were usually limited to ensure the visibility of all the pixels in the major television standards and the broad range of television sets with varying amounts of over scan. The actual drawable picture area was, therefore, somewhat smaller than the whole screen, and was usually surrounded by a static-colored border (see image below). Also, the interlace scanning was usually omitted in order to provide more stability to the picture, effectively halving the vertical resolution in progress.160 × 200,320 × 200and640 × 200on NTSC were relatively common resolutions in the era (224, 240 or 256 scanlines were also common). In the IBM PC world, these resolutions came to be used by 16-colorEGAvideo cards.

One of the drawbacks of using a classic television is that the computer display resolution is higher than the television could decode. Chroma resolution for NTSC/PAL televisions are bandwidth-limited to a maximum 1.5MHz, or approximately 160 pixels wide, which led to blurring of the color for 320- or 640-wide signals, and made text difficult to read (see example image below). Many users upgraded to higher-quality televisions withS-VideoorRGBIinputs that helped eliminate chroma blur and produce more legible displays. The earliest, lowest cost solution to the chroma problem was offered in theAtari 2600Video Computer System and theApple II+,both of which offered the option to disable the color and view a legacy black-and-white signal. On the Commodore 64, theGEOSmirrored the Mac OS method of using black-and-white to improve readability.

The640 × 400iresolution (720 × 480iwith borders disabled) was first introduced by home computers such as theCommodore Amigaand, later, Atari Falcon. These computers used interlace to boost the maximum vertical resolution. These modes were only suited to graphics or gaming, as the flickering interlace made reading text in word processor, database, or spreadsheet software difficult. (Modern game consoles solve this problem by pre-filtering the 480i video to a lower resolution. For example,Final Fantasy XIIsuffers from flicker when the filter is turned off, but stabilizes once filtering is restored. The computers of the 1980s lacked sufficient power to run similar filtering software.)

The advantage of a720 × 480ioverscanned computer was an easy interface with interlaced TV production, leading to the development of Newtek'sVideo Toaster.This device allowed Amigas to be used for CGI creation in various news departments (example: weather overlays), drama programs such as NBC'sseaQuestand The WB'sBabylon 5.

In the PC world, theIBM PS/2VGA (multi-color) on-board graphics chips used a non-interlaced (progressive) 640 × 480 × 16 color resolution that was easier to read and thus more useful for office work. It was the standard resolution from 1990 to around 1996.[citation needed]The standard resolution was800 × 600until around 2000. MicrosoftWindows XP,released in 2001, was designed to run at800 × 600minimum, although it is possible to select the original640 × 480in the Advanced Settings window.

Programs designed to mimic older hardware such as Atari, Sega, or Nintendo game consoles (emulators) when attached to multiscan CRTs, routinely use much lower resolutions, such as160 × 200or320 × 400for greater authenticity, though other emulators have taken advantage of pixelation recognition on circle, square, triangle and other geometric features on a lesser resolution for a more scaled vector rendering. Some emulators, at higher resolutions, can even mimic the aperture grille and shadow masks of CRT monitors.

In 2002,1024 × 768eXtended Graphics Arraywas the most common display resolution. Many web sites and multimedia products were re-designed from the previous800 × 600format to the layouts optimized for1024 × 768.

The availability of inexpensive LCD monitors made the 5∶4 aspect ratio resolution of1280 × 1024more popular for desktop usage during the first decade of the 21st century. Many computer users includingCADusers, graphic artists and video game players ran their computers at1600 × 1200resolution (UXGA) or higher such as2048 × 1536QXGAif they had the necessary equipment. Other available resolutions included oversize aspects like1400 × 1050SXGA+and wide aspects like1280 × 800WXGA,1440 × 900WXGA+,1680 × 1050WSXGA+,and1920 × 1200WUXGA;monitors built to the 720p and 1080p standard were also not unusual among home media and video game players, due to the perfect screen compatibility with movie and video game releases. A new more-than-HD resolution of2560 × 1600WQXGAwas released in 30-inch LCD monitors in 2007.

In 2010, 27-inch LCD monitors with the2560 × 1440resolution were released by multiple manufacturers, and in 2012, Apple introduced a2880 × 1800display on theMacBook Pro.Panels for professional environments, such as medical use and air traffic control, support resolutions up to4096 × 2160[3](or, more relevant for control rooms, 1∶12048 × 2048pixels).[4][5]

Common display resolutions

[edit]| Standard | Aspect ratio | Width (px) | Height (px) | Megapixels | Steam[6](%) | StatCounter[7](%) |

|---|---|---|---|---|---|---|

| nHD | 16:9 | 640 | 360 | 0.230 | N/A | 0.47 |

| VGA | 4:3 | 640 | 480 | 0.307 | N/A | N/A |

| SVGA | 4:3 | 800 | 600 | 0.480 | N/A | 0.76 |

| XGA | 4:3 | 1024 | 768 | 0.786 | 0.38 | 2.78 |

| WXGA | 16:9 | 1280 | 720 | 0.922 | 0.36 | 4.82 |

| WXGA | 16:10 | 1280 | 800 | 1.024 | 0.61 | 3.08 |

| SXGA | 5:4 | 1280 | 1024 | 1.311 | 1.24 | 2.47 |

| HD | ≈16:9 | 1360 | 768 | 1.044 | 1.55 | 1.38 |

| HD | ≈16:9 | 1366 | 768 | 1.049 | 10.22 | 23.26 |

| WXGA+ | 16:10 | 1440 | 900 | 1.296 | 3.12 | 6.98 |

| N/A | 16:9 | 1536 | 864 | 1.327 | N/A | 8.53 |

| HD+ | 16:9 | 1600 | 900 | 1.440 | 2.59 | 4.14 |

| UXGA | 4:3 | 1600 | 1200 | 1.920 | N/A | N/A |

| WSXGA+ | 16:10 | 1680 | 1050 | 1.764 | 1.97 | 2.23 |

| FHD | 16:9 | 1920 | 1080 | 2.074 | 64.81 | 20.41 |

| WUXGA | 16:10 | 1920 | 1200 | 2.304 | 0.81 | 0.93 |

| QWXGA | 16:9 | 2048 | 1152 | 2.359 | N/A | 0.51 |

| QXGA | 4:3 | 2048 | 1536 | 3.145 | ||

| UWFHD | ≈21:9 | 2560 | 1080 | 2.765 | 1.13 | N/A |

| QHD | 16:9 | 2560 | 1440 | 3.686 | 6.23 | 2.15 |

| WQXGA | 16:10 | 2560 | 1600 | 4.096 | <0.58 | <2.4 |

| UWQHD | ≈21:9 | 3440 | 1440 | 4.954 | 0.87 | N/A |

| 4K UHD | 16:9 | 3840 | 2160 | 8.294 | 2.12 | N/A |

| DUHD | 32:9 | 7680 | 2160 | 16.588 | N/A | |

| Other | 2.00 | 15.09 |

In recent years the 16:9 aspect ratio has become more common in notebook displays, and1366 × 768(HD) has become popular for most low-cost notebooks, while1920 × 1080(FHD) and higher resolutions are available for more premium notebooks.

When a computer display resolution is set higher than the physical screen resolution (native resolution), some video drivers make the virtual screen scrollable over the physical screen thus realizing a two dimensionalvirtual desktopwith its viewport. Most LCD manufacturers do make note of the panel's native resolution as working in a non-native resolution on LCDs will result in a poorer image, due to dropping of pixels to make the image fit (when using DVI) or insufficient sampling of the analog signal (when using VGA connector). Few CRT manufacturers will quote the true native resolution, because CRTs are analog in nature and can vary their display from as low as 320 × 200 (emulation of older computers or game consoles) to as high as the internal board will allow, or the image becomes too detailed for the vacuum tube to recreate (i.e., analog blur). Thus, CRTs provide a variability in resolution that fixed resolution LCDs cannot provide.

See also

[edit]- Display aspect ratio

- Display size

- Pixel density of computer displays– PPI(for example, a 20-inch 1680 × 1050 screen has a PPI of 99.06)

- Resolution independence

- Ultrawide formats

- Video scaler

- Widescreen

References

[edit]- ^"Screen resolution? Aspect ratio? What do 720p, 1080p, QHD, 4K and 8K mean?".digitalcitizen.life. 2016-05-20.Retrieved2017-08-28.

- ^abRobin, Michael (2005-04-01)."Horizontal resolution: Pixels or lines".Broadcast Engineering. Archived fromthe originalon 2012-08-15.Retrieved2012-07-22.

- ^"Eizo industrial monitor does 4K resolution at 36-inches, start saving now".Engadget.21 June 2011.Retrieved2021-05-15.

- ^"EIZO Releases 5th Generation 2K x 2K Primary Control Monitor with New Design and Extensive Customizability for ATC Centers | EIZO".eizoglobal.Retrieved2021-05-15.

- ^nikolai (February 2010)."Eizo outs Raptor WS3001 30-inch LCD monitor".Retrieved2021-05-15.

- ^"Steam Hardware & Software Survey".Valve. Archived fromthe originalon 2020-07-07.Retrieved2020-07-16.

- ^"Desktop Screen Resolution Stats Worldwide".StatCounter.Retrieved2020-07-16.