The FPF Center for Artificial Intelligence: Navigating AI Policy, Regulation, and Governance

The rapid deployment of Artificial Intelligence for consumer, enterprise, and government uses has created challenges for policymakers, compliance experts, and regulators. AI policy stakeholders are seeking sophisticated, practical policy information and analysis.

The rapid deployment of Artificial Intelligence for consumer, enterprise, and government uses has created challenges for policymakers, compliance experts, and regulators. AI policy stakeholders are seeking sophisticated, practical policy information and analysis.

This is where the FPF Center for Artificial Intelligence comes in, expanding FPF’s role as the leading pragmatic and trusted voice for those who seek impartial, practical analysis of the latest challenges for AI-related regulation, compliance, and ethical use.

At the FPF Center for Artificial Intelligence, we help policymakers and privacy experts at organizations, civil society, and academics navigate AI policy and governance. The Center is supported by a Leadership Council of experts from around the globe. The Council consists of members from industry, academia, civil society, and current and former policymakers.

FPF has a long history of AI-related and emerging technology policy work that has focused on data, privacy, and the responsible use of technology to mitigate harms. From FPF’s presentation to global privacy regulators about emerging AI technologies and risks in 2017 to our briefing for US Congressional members detailing the risks and mitigation strategies for AI-powered workplace tech in 2023, FPF has helped policymakers around the world better understand AI risks and opportunities while equipping data, privacy and AI experts with the information they need to develop and deploy AI responsibly in their organizations.

In 2024, FPF received a grant from the National Science Foundation (NSF) to advance the Whitehouse Executive Order in Artificial Intelligence to support the use of Privacy Enhancing Technologies (PETs) by government agencies and the private sector by advancing legal certainty, standardization, and equitable uses. FPF is also a member of the U.S. AI Safety Institute at the National Institute for Standards and Technology (NIST) where it focuses on assessing the policy implications of the changing nature of artificial intelligence.

Areas of work within the FPF Center for Artificial Intelligence include:

- Legislative Comparison

- Responsible AI Governance

- AI Policy by Sector

- AI Assessments & Analyses

- Novel AI Policy Issues

- AI and Privacy Enhancing Technologies

FPF’s new Center for Artificial Intelligence will be supported by a Leadership Council of leading experts from around the globe. The Council will consist of members from industry, academia, civil society, and current and former policymakers.

FPF Center for AI Leadership Council

The FPF Center for Artificial Intelligence will be supported by a Leadership Council of leading experts from around the globe. The Council will consist of members from industry, academia, civil society, and current and former policymakers.

We are delighted to announce the founding Leadership Council members:

- Estela Aranha, Member of the United Nations High-level Advisory Body on AI; Former State Secretary for Digital Rights, Ministry of Justice and Public Security, Federal Government of Brazil

- Jocelyn Aqua, Principal, Data, Risk, Privacy and AI Governance, PricewaterhouseCoopers LLP

- John Bailey, Nonresident Senior Fellow, American Enterprise Institute

- Lori Baker, Vice President, Data Protection & Regulatory Compliance, Dubai International Financial Centre Authority (DPA)

- Cari Benn, Assistant Chief Privacy Officer, Microsoft Corporation

- Andrew Bloom, Vice President & Chief Privacy Officer, McGraw Hill

- Kate Charlet, Head of Global Privacy, Safety, and Security Policy, Google

- Prof. Simon Chesterman, David Marshall Professor of Law & Vice Provost, National University of Singapore; Principal Researcher, Office of the UNSG’s Envoy on Technology, High-Level Advisory Body on AI

- Barbara Cosgrove, Vice President, Chief Privacy Officer, Workday

- Jo Ann Davaris, Vice President, Global Privacy, Booking Holdings Inc.

- Elizabeth Denham, Chief Policy Strategist, Information Accountability Foundation, Former UK ICO Commissioner and British Columbia Privacy Commissioner

- Lydia F. de la Torre, Senior Lecturer at University of California, Davis; Founder, Golden Data Law, PBC; Former California Privacy Protection Agency Board Member

- Leigh Feldman, SVP, Chief Privacy Officer, Visa Inc.

- Lindsey Finch, Executive Vice President, Global Privacy & Product Legal, Salesforce

- Harvey Jang, Vice President, Chief Privacy Officer, Cisco Systems, Inc.

- Lisa Kohn, Director of Public Policy, Amazon

- Emerald de Leeuw-Goggin, Global Head of AI Governance & Privacy, Logitech

- Caroline Louveaux, Chief Privacy Officer, MasterCard

- Ewa Luger, Professor of human-data interaction, University of Edinburgh; Co-Director, Bridging Responsible AI Divides (BRAID)

- Dr. Gianclaudio Malgieri, Associate Professor of Law & Technology at eLaw, University of Leiden

- State Senator James Maroney, Connecticut

- Christina Montgomery, Chief Privacy & Trust Officer, AI Ethics Board Chair, IBM

- Carolyn Pfeiffer, Senior Director, Privacy, AI & Ethics and DSSPE Operations, Johnson & Johnson Innovative Medicine

- Ben Rossen, Associate General Counsel, AI Policy & Regulation, OpenAI

- Crystal Rugege, Managing Director, Centre for the Fourth Industrial Revolution Rwanda

- Guido Scorza, Member, The Italian Data Protection Authority

- Nubiaa Shabaka, Global Chief Privacy Officer and Chief Cyber Legal Officer, Adobe, Inc.

- Rob Sherman, Vice President and Deputy Chief Privacy Officer for Policy, Meta

- Dr. Anna Zeiter, Vice President & Chief Privacy Officer, Privacy, Data & AI Responsibility, eBay

- Yeong Zee Kin, Chief Executive of Singapore Academy of Law and former Assistant Chief Executive (Data Innovation and Protection Group), Infocomm Media Development Authority of Singapore

For more information on the FPF Center for AI email [email protected]

Featured

Do LLMs Contain Personal Information? California AB 1008 Highlights Evolving, Complex Techno-Legal Debate

By Jordan Francis, Beth Do, and Stacey Gray, with thanks to Dr. Rob van Eijk and Dr. Gabriela Zanfir-Fortuna for their contributions. California Governor Gavin Newsom signed Assembly Bill (AB) 1008 into law on September 28, amending the definition of “personal information” under the California Consumer Privacy Act (CCPA) to provide that personal information can […]

Future of Privacy Forum Convenes Over 200 State Lawmakers in AI Policy Working Group Focused on 2025 Legislative Sessions

The Multistate AI Policymaker Working Group (MAP-WG) is convened by FPF to help state lawmakers from more than 45 states to collaborate on emerging technologies and related policy issues. OCTOBER 21, 2024 — In the lead-up to the 2025 legislative session, FPF is excited to convene the expanded Multistate AI Policymaker Working Group (MAP-WG)—a bipartisan […]

Synthetic Content: Exploring the Risks, Technical Approaches, and Regulatory Responses

Today, the Future of Privacy Forum (FPF) released a new report, Synthetic Content: Exploring the Risks, Technical Approaches, and Regulatory Responses, which analyzes the various approaches being pursued to address the risks associated with “synthetic” content – material produced by generative artificial intelligence (AI) tools. As more people use generative AI to create synthetic content, […]

FPF Unveils Report on Emerging Trends in U.S. State AI Regulation

Today, the Future of Privacy Forum (FPF) launched a new report—U.S. State AI Legislation: A Look at How U.S. State Policymakers Are Approaching Artificial Intelligence Regulation— analyzing recent proposed and enacted legislation in U.S. states. As artificial intelligence (AI) becomes increasingly embedded in daily life and critical sectors like healthcare and employment, state lawmakers have […]

Privacy Roundup from Summer Developer Conference Season 2024

Ahh, summer. A time for hot dogs, swimming pools, and software developer conferences. For third-party application developers to deliver new tools with the best features for the lucrative fall quarter, they must have access to all the APIs and tools by the summer before. This has meant that early summer has become known as a […]

FPF Highlights Intersection of AI, Privacy, and Civil Rights in Response to California’s Proposed Employment Regulations

On July 18, the Future of Privacy Forum submitted comments to the California Civil Rights Council (Council) in response to their proposed modifications to the state Fair Employment and Housing Act (FEHA) regarding automated-decision systems (ADS). As one of the first state agencies in the U.S. to advance modernized employment regulations to account for automated-decision […]

FPF Responds to the Federal Election Commission Decision on the use of AI in Political Campaign Advertising

The Federal Election Commission’s (FEC) abandoned rulemaking presented an opportunity to better protect the integrity of elections and campaigns, as well as to preserve and increase public trust in the growing use of AI by candidates and in campaigns. When generative AI is used carefully and responsibly, it can reach different segments of the population […]

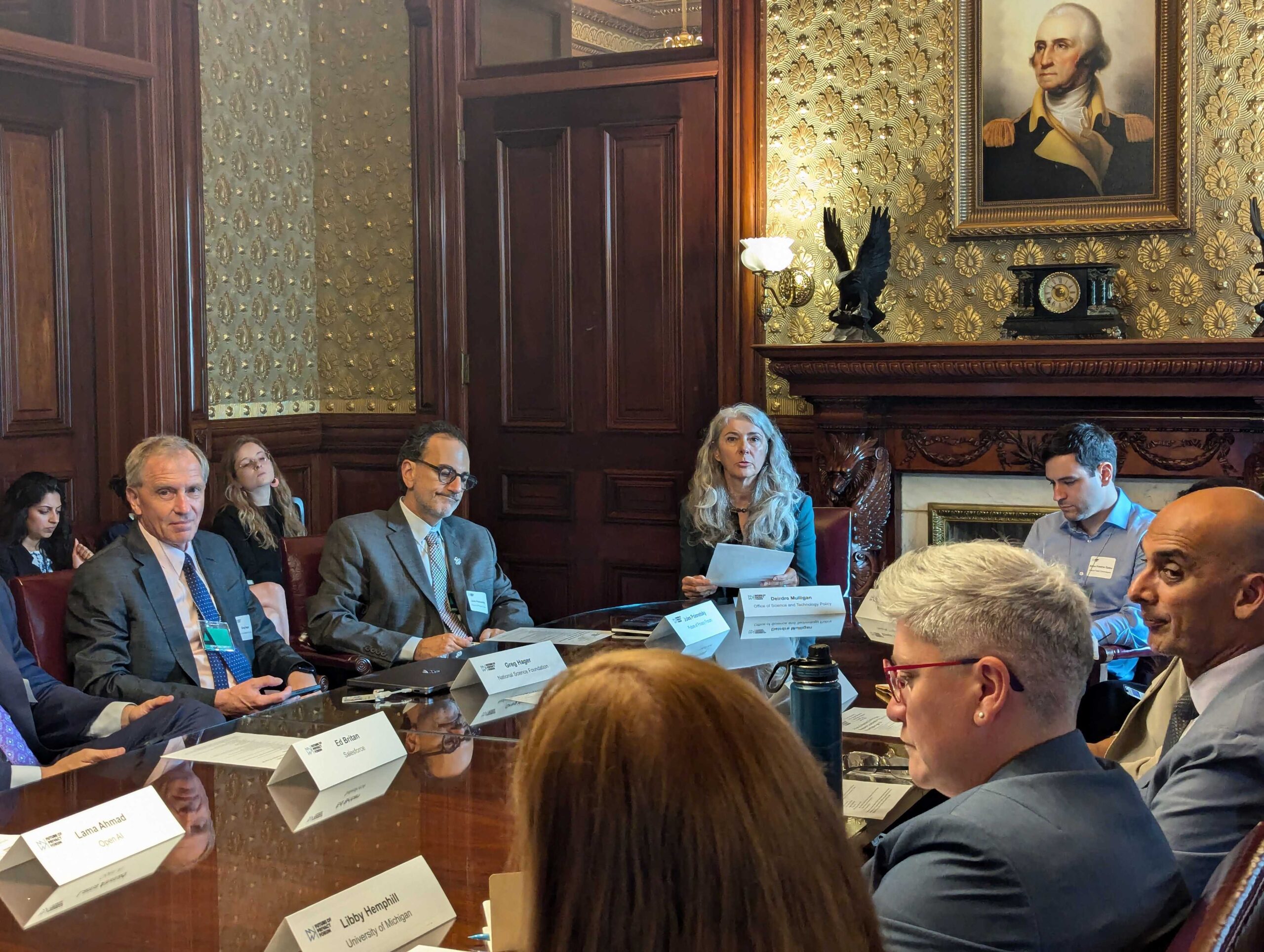

Connecting Experts to Make Privacy-Enhancing Tech and AI Work for Everyone

The Future of Privacy Forum (FPF) launched its Research Coordination Network (RCN) for Privacy-Preserving Data Sharing and Analytics on Tuesday, July 9th. The RCN supports the Biden-Harris Administration’s commitments to privacy, equity, and safety articulated in the administration’s Executive Order on Artificial Intelligence (AI). Industry experts, policymakers, civil society, and academics met to discuss the […]

NEW FPF REPORT: Confidential Computing and Privacy: Policy Implications of Trusted Execution Environments

Written by Judy Wang, FPF Communications Intern Today, the Future of Privacy Forum (FPF) published a paper on confidential computing, a privacy-enhancing technology (PET) that marks a significant shift in the trustworthiness and verifiability of data processing for the use cases it supports, including training and use of AI models. Confidential computing leverages two key […]

A First for AI: A Close Look at The Colorado AI Act

Colorado made history on May 17, 2024 when Governor Polis signed into law the Colorado Artificial Intelligence Act (“CAIA”), the first law in the United States to comprehensively regulate the development and deployment of high-risk artificial intelligence (“AI”) systems. The law will come into effect on February 1, 2026, preceding the March, 2026 effective date […]