Tutorial: Run a Batch job through Data Factory with Batch Explorer, Storage Explorer, and Python

This tutorial walks you through creating and running an Azure Data Factory pipeline that runs an Azure Batch workload. A Python script runs on the Batch nodes to get comma-separated value (CSV) input from an Azure Blob Storage container, manipulate the data, and write the output to a different storage container. You use Batch Explorer to create a Batch pool and nodes, and Azure Storage Explorer to work with storage containers and files.

In this tutorial, you learn how to:

- Use Batch Explorer to create a Batch pool and nodes.

- Use Storage Explorer to create storage containers and upload input files.

- Develop a Python script to manipulate input data and produce output.

- Create a Data Factory pipeline that runs the Batch workload.

- Use Batch Explorer to look at the output log files.

Prerequisites

- An Azure account with an active subscription. If you don't have one,create a free account.

- A Batch account with a linked Azure Storage account. You can create the accounts by using any of the following methods:Azure portal|Azure CLI|Bicep|ARM template|Terraform.

- A Data Factory instance. To create the data factory, follow the instructions inCreate a data factory.

- Batch Explorerdownloaded and installed.

- Storage Explorerdownloaded and installed.

- Python 3.8 or above,with theazure-storage-blobpackage installed by using

pip. - Theiris.csv input datasetdownloaded from GitHub.

Use Batch Explorer to create a Batch pool and nodes

Use Batch Explorer to create a pool of compute nodes to run your workload.

Sign in to Batch Explorer with your Azure credentials.

Select your Batch account.

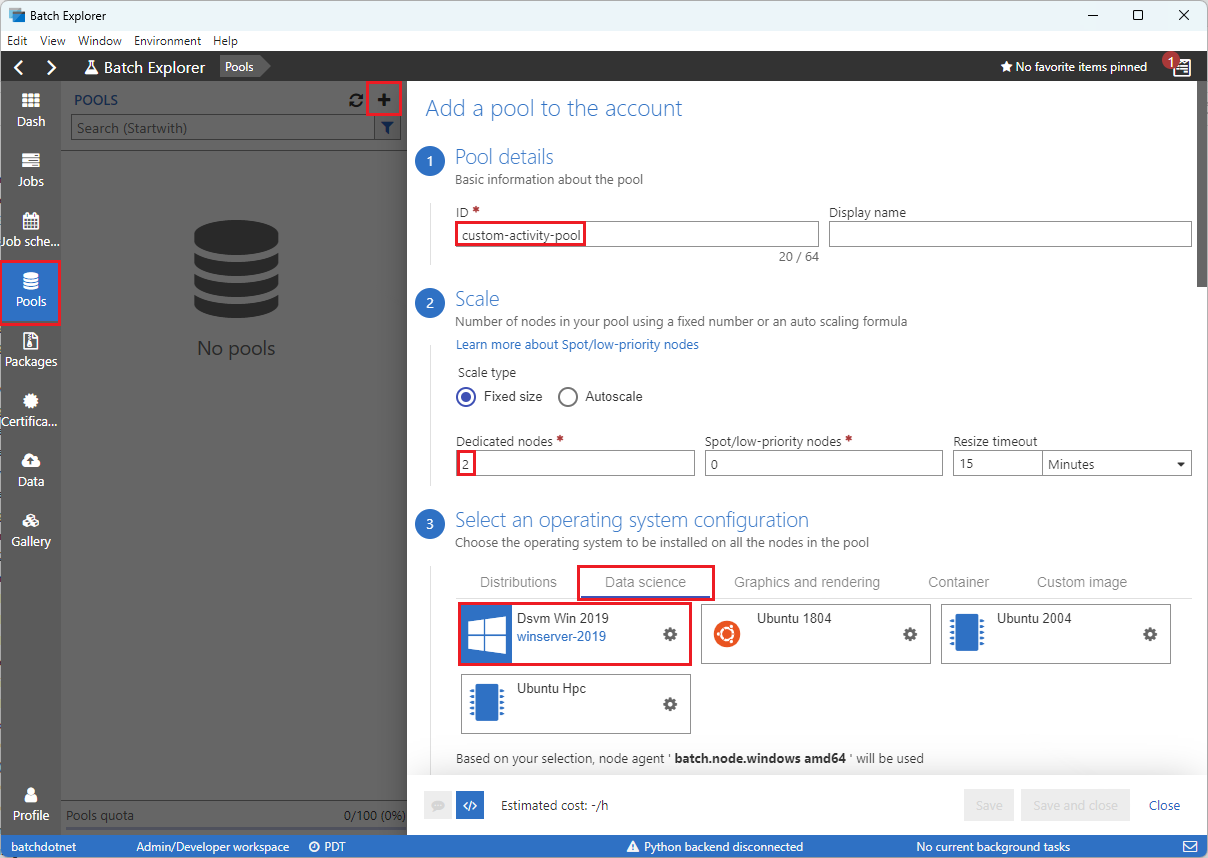

SelectPoolson the left sidebar, and then select the+icon to add a pool.

Complete theAdd a pool to the accountform as follows:

- UnderID,entercustom-activity-pool.

- UnderDedicated nodes,enter2.

- ForSelect an operating system configuration,select theData sciencetab, and then selectDsvm Win 2019.

- ForChoose a virtual machine size,selectStandard_F2s_v2.

- ForStart Task,selectAdd a start task.

On the start task screen, underCommand line,enter

cmd /c "pip install azure-storage-blob pandas",and then selectSelect.This command installs theazure-storage-blobpackage on each node as it starts up.

SelectSave and close.

Use Storage Explorer to create blob containers

Use Storage Explorer to create blob containers to store input and output files, and then upload your input files.

- Sign in to Storage Explorer with your Azure credentials.

- In the left sidebar, locate and expand the storage account that's linked to your Batch account.

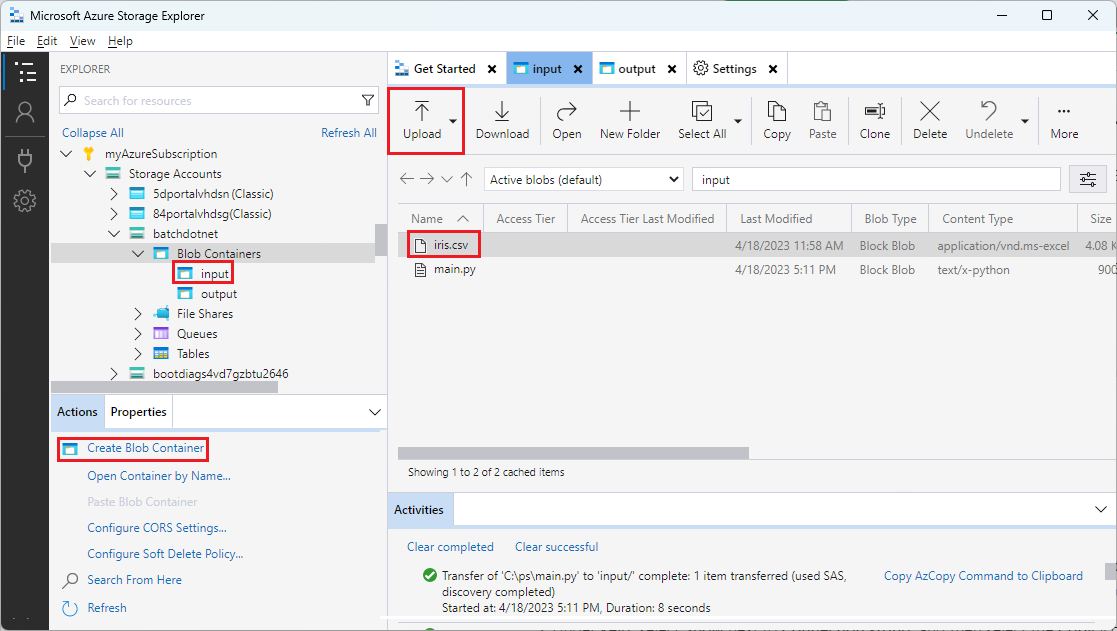

- Right-clickBlob Containers,and selectCreate Blob Container,or selectCreate Blob ContainerfromActionsat the bottom of the sidebar.

- Enterinputin the entry field.

- Create another blob container namedoutput.

- Select theinputcontainer, and then selectUpload>Upload filesin the right pane.

- On theUpload filesscreen, underSelected files,select the ellipsis...next to the entry field.

- Browse to the location of your downloadediris.csvfile, selectOpen,and then selectUpload.

Develop a Python script

The following Python script loads theiris.csvdataset file from your Storage Explorerinputcontainer, manipulates the data, and saves the results to theoutputcontainer.

The script needs to use the connection string for the Azure Storage account that's linked to your Batch account. To get the connection string:

- In theAzure portal,search for and select the name of the storage account that's linked to your Batch account.

- On the page for the storage account, selectAccess keysfrom the left navigation underSecurity + networking.

- Underkey1,selectShownext toConnection string,and then select theCopyicon to copy the connection string.

Paste the connection string into the following script, replacing the<storage-account-connection-string>placeholder. Save the script as a file namedmain.py.

Important

Exposing account keys in the app source isn't recommended for Production usage. You should restrict access to credentials and refer to them in your code by using variables or a configuration file. It's best to store Batch and Storage account keys in Azure Key Vault.

# Load libraries

from azure.storage.blob import BlobClient

import pandas as pd

# Define parameters

connectionString = "<storage-account-connection-string>"

containerName = "output"

outputBlobName = "iris_setosa.csv"

# Establish connection with the blob storage account

blob = BlobClient.from_connection_string(conn_str=connectionString, container_name=containerName, blob_name=outputBlobName)

# Load iris dataset from the task node

df = pd.read_csv( "iris.csv" )

# Take a subset of the records

df = df[df['Species'] == "setosa" ]

# Save the subset of the iris dataframe locally in the task node

df.to_csv(outputBlobName, index = False)

with open(outputBlobName, "rb" ) as data:

blob.upload_blob(data, overwrite=True)

Run the script locally to test and validate functionality.

python main.py

The script should produce an output file namediris_setosa.csvthat contains only the data records that have Species = setosa. After you verify that it works correctly, upload themain.pyscript file to your Storage Explorerinputcontainer.

Set up a Data Factory pipeline

Create and validate a Data Factory pipeline that uses your Python script.

Get account information

The Data Factory pipeline uses your Batch and Storage account names, account key values, and Batch account endpoint. To get this information from theAzure portal:

From the Azure Search bar, search for and select your Batch account name.

On your Batch account page, selectKeysfrom the left navigation.

On theKeyspage, copy the following values:

- Batch account

- Account endpoint

- Primary access key

- Storage account name

- Key1

Create and run the pipeline

If Azure Data Factory Studio isn't already running, selectLaunch studioon your Data Factory page in the Azure portal.

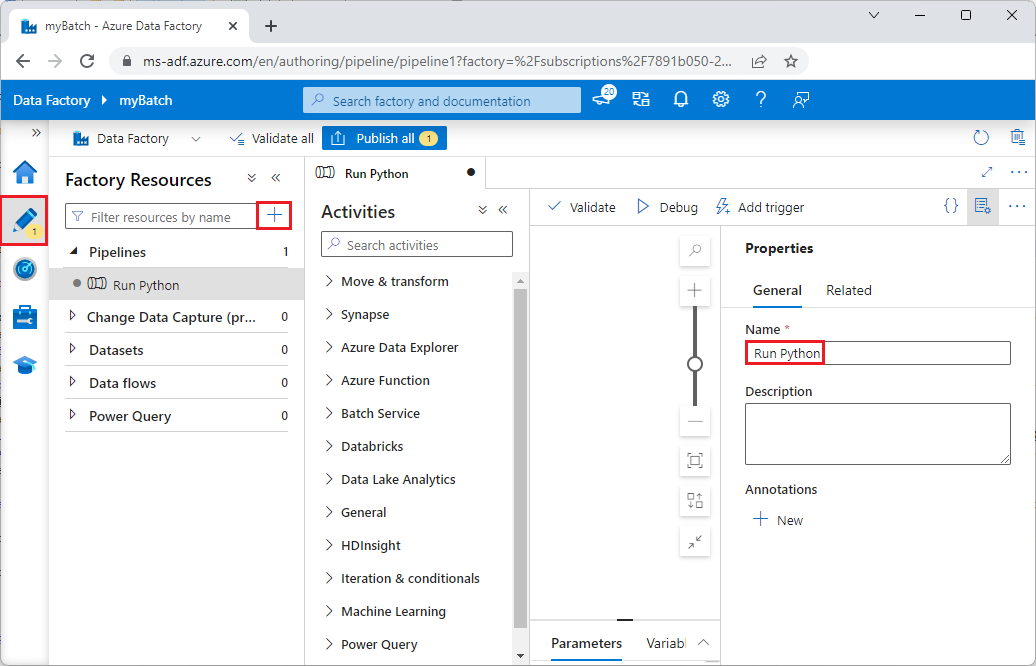

In Data Factory Studio, select theAuthorpencil icon in the left navigation.

UnderFactory Resources,select the+icon, and then selectPipeline.

In thePropertiespane on the right, change the name of the pipeline toRun Python.

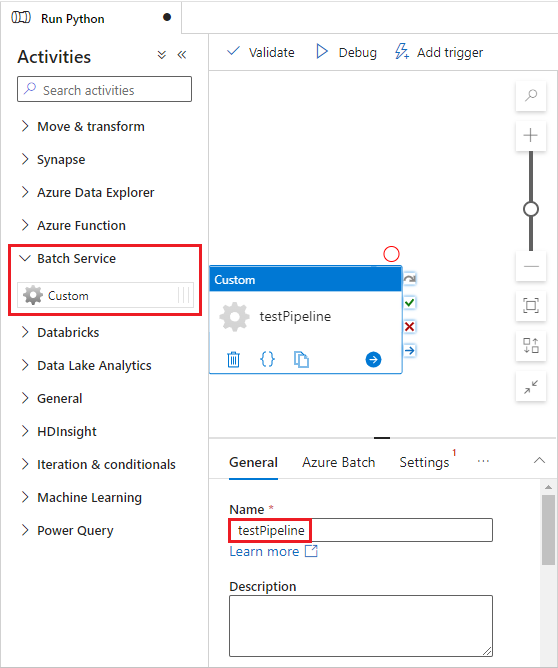

In theActivitiespane, expandBatch Service,and drag theCustomactivity to the pipeline designer surface.

Below the designer canvas, on theGeneraltab, entertestPipelineunderName.

Select theAzure Batchtab, and then selectNew.

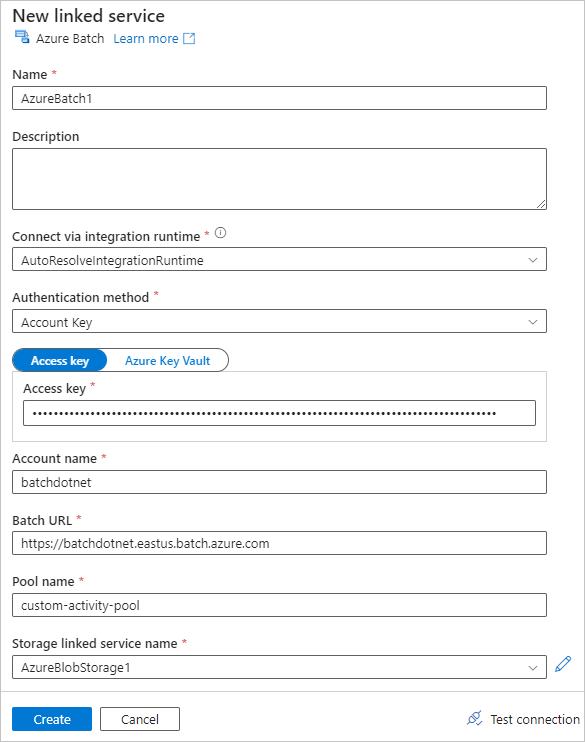

Complete theNew linked serviceform as follows:

- Name:Enter a name for the linked service, such asAzureBatch1.

- Access key:Enter the primary access key you copied from your Batch account.

- Account name:Enter your Batch account name.

- Batch URL:Enter the account endpoint you copied from your Batch account, such as

https://batchdotnet.eastus.batch.azure.com. - Pool name:Entercustom-activity-pool,the pool you created in Batch Explorer.

- Storage account linked service name:SelectNew.On the next screen, enter aNamefor the linked storage service, such asAzureBlobStorage1,select your Azure subscription and linked storage account, and then selectCreate.

At the bottom of the BatchNew linked servicescreen, selectTest connection.When the connection is successful, selectCreate.

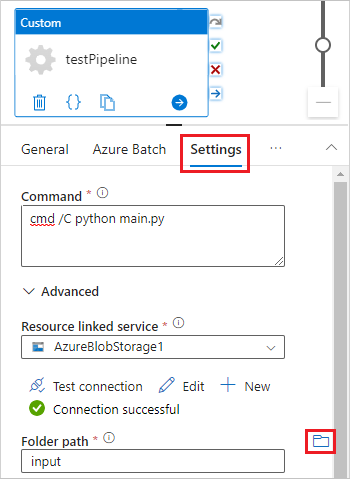

Select theSettingstab, and enter or select the following settings:

- Command:Enter

cmd /C python main.py. - Resource linked service:Select the linked storage service you created, such asAzureBlobStorage1,and test the connection to make sure it's successful.

- Folder path:Select the folder icon, and then select theinputcontainer and selectOK.The files from this folder download from the container to the pool nodes before the Python script runs.

- Command:Enter

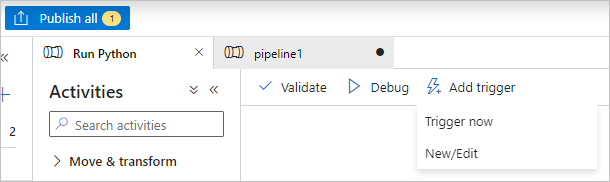

SelectValidateon the pipeline toolbar to validate the pipeline.

SelectDebugto test the pipeline and ensure it works correctly.

SelectPublish allto publish the pipeline.

SelectAdd trigger,and then selectTrigger nowto run the pipeline, orNew/Editto schedule it.

Use Batch Explorer to view log files

If running your pipeline produces warnings or errors, you can use Batch Explorer to look at thestdout.txtandstderr.txtoutput files for more information.

- In Batch Explorer, selectJobsfrom the left sidebar.

- Select theadfv2-custom-activity-pooljob.

- Select a task that had a failure exit code.

- View thestdout.txtandstderr.txtfiles to investigate and diagnose your problem.

Clean up resources

Batch accounts, jobs, and tasks are free, but compute nodes incur charges even when they're not running jobs. It's best to allocate node pools only as needed, and delete the pools when you're done with them. Deleting pools deletes all task output on the nodes, and the nodes themselves.

Input and output files remain in the storage account and can incur charges. When you no longer need the files, you can delete the files or containers. When you no longer need your Batch account or linked storage account, you can delete them.

Next steps

In this tutorial, you learned how to use a Python script with Batch Explorer, Storage Explorer, and Data Factory to run a Batch workload. For more information about Data Factory, seeWhat is Azure Data Factory?

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see:https://aka.ms/ContentUserFeedback.

Submit and view feedback for